@ Apache Kafka A Streaming Data Platform and #javapuzzlersng @gamussa @sfjava @confluentinc

Slide 1

Slide 2

@ @gamussa @sfjava @confluentinc

Slide 3

@ @gamussa @sfjava @confluentinc

Slide 4

@ @gamussa @sfjava @confluentinc Who am I?

Slide 5

@ @gamussa @sfjava @confluentinc Solutions Architect Who am I?

Slide 6

@ @gamussa @sfjava @confluentinc Solutions Architect Developer Advocate Who am I?

Slide 7

@ @gamussa @sfjava @confluentinc Solutions Architect Developer Advocate @gamussa in internetz Who am I?

Slide 8

@

@gamussa @sfjava @confluentinc

Solutions Architect

Developer Advocate

@gamussa

in internetz

Hey you, yes, you,

go follow me in twitter ©

Who am I?

Slide 9

@ @gamussa @sfjava @confluentinc Kafka & Confluent

Slide 10

@ @gamussa @sfjava @confluentinc We are hiring! https://www.confluent.io/careers/

Slide 11

@ @gamussa @sfjava @confluentinc

Slide 12

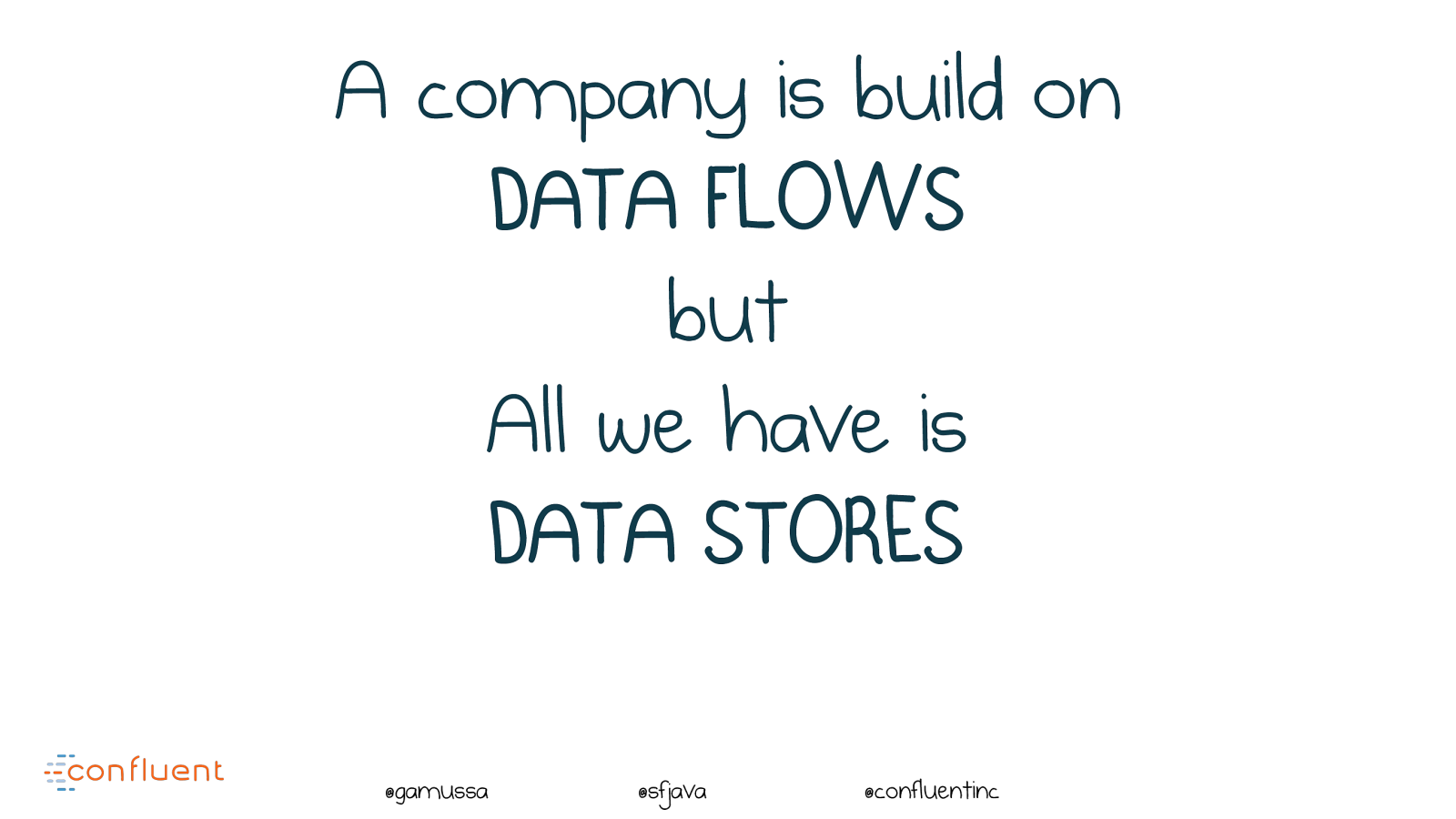

@ @gamussa @sfjava @confluentinc A company is build on

Slide 13

@ @gamussa @sfjava @confluentinc A company is build on DATA FLOWS but All we have is DATA STORES

Slide 14

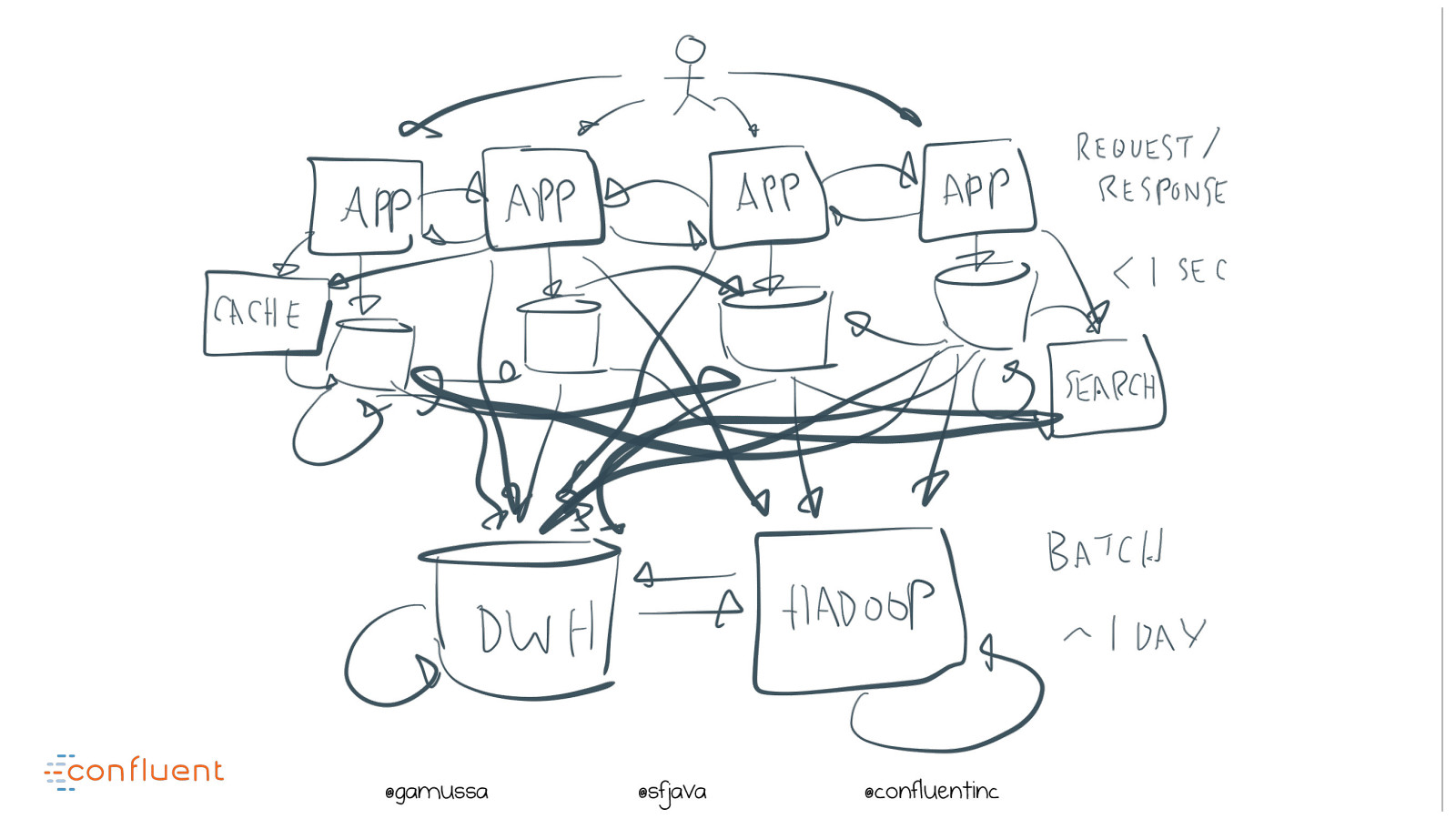

@ @gamussa @sfjava @confluentinc

Slide 15

@ @gamussa @sfjava @confluentinc

Slide 16

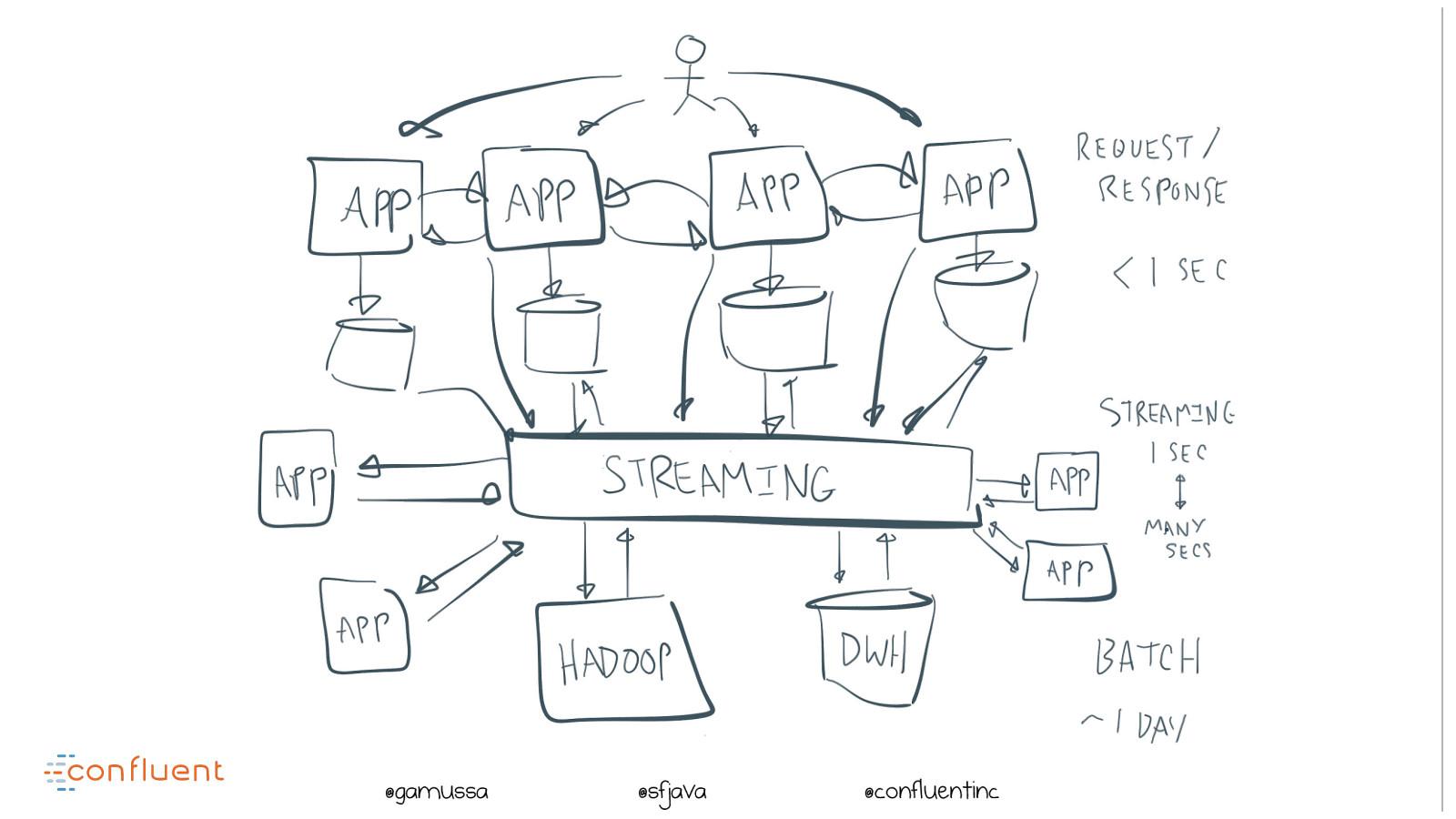

@ @gamussa @sfjava @confluentinc

Slide 17

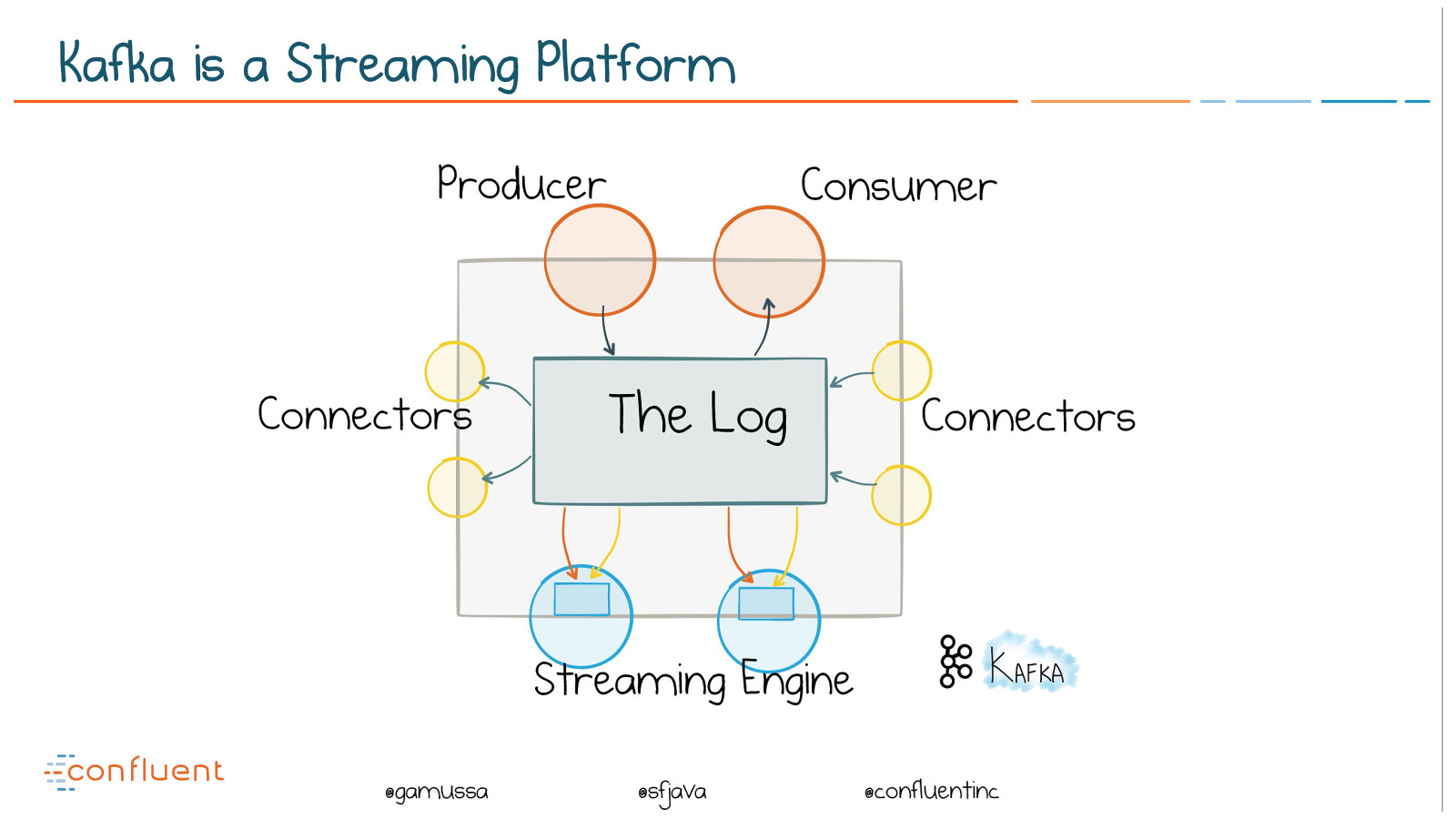

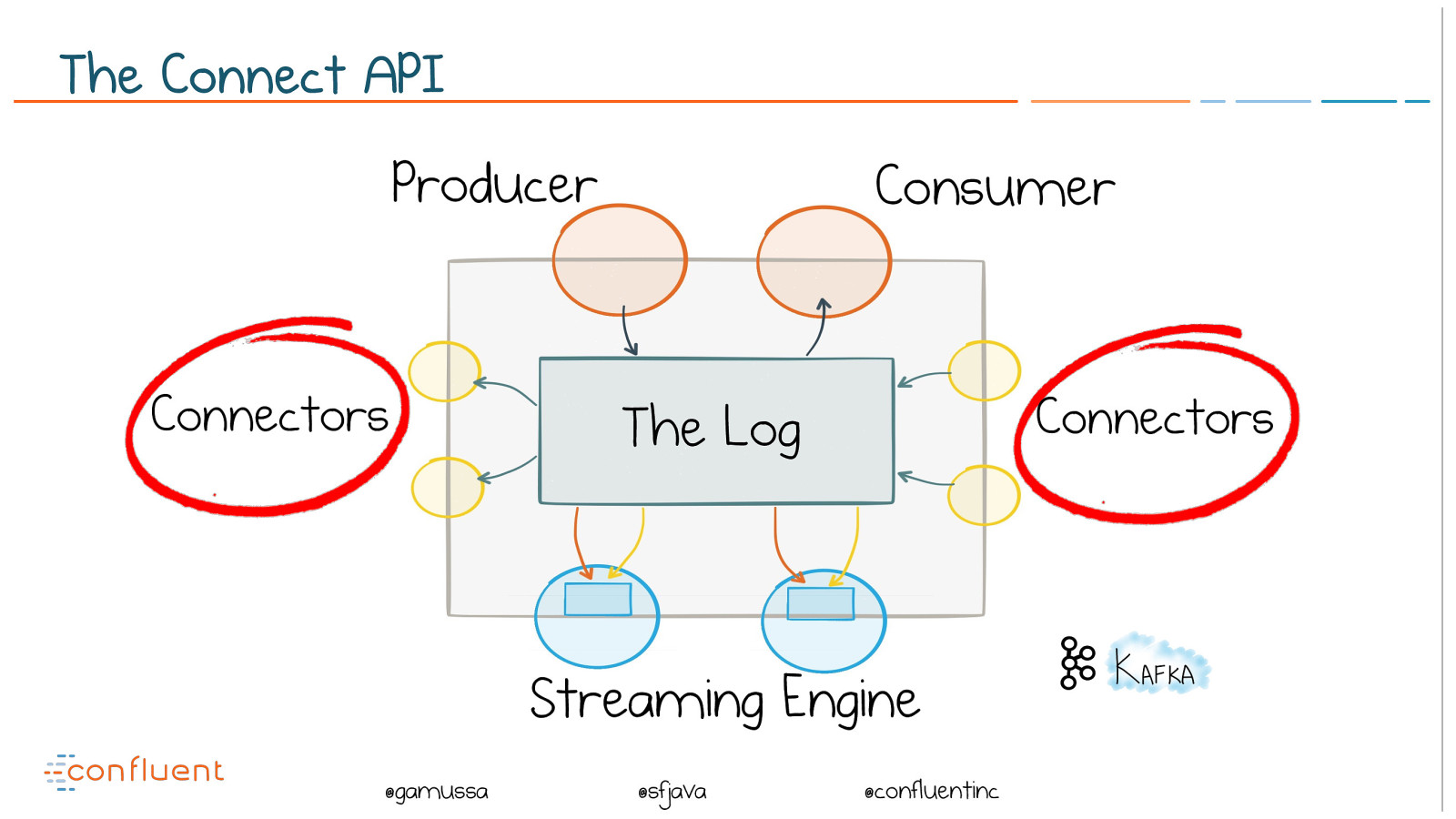

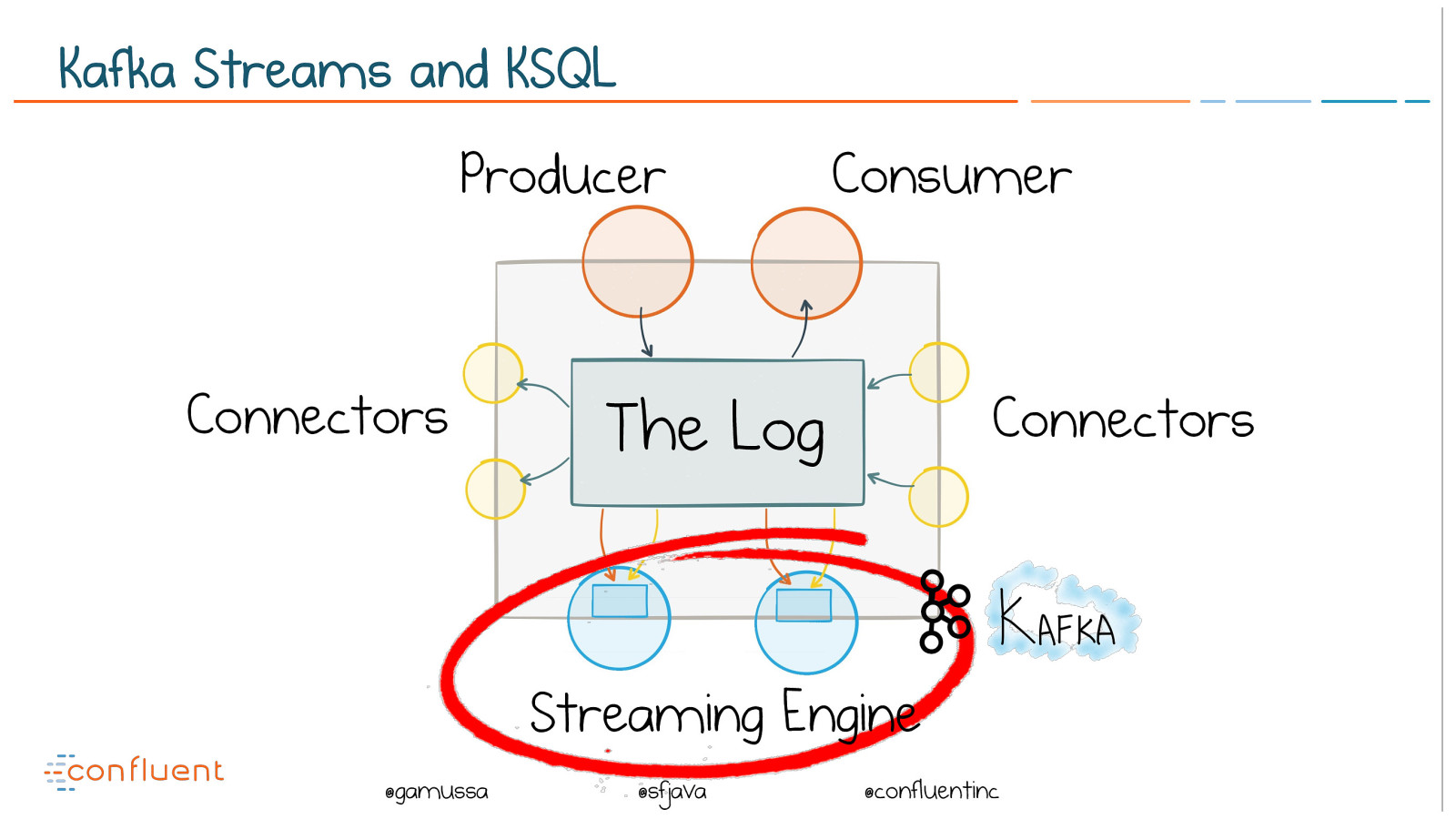

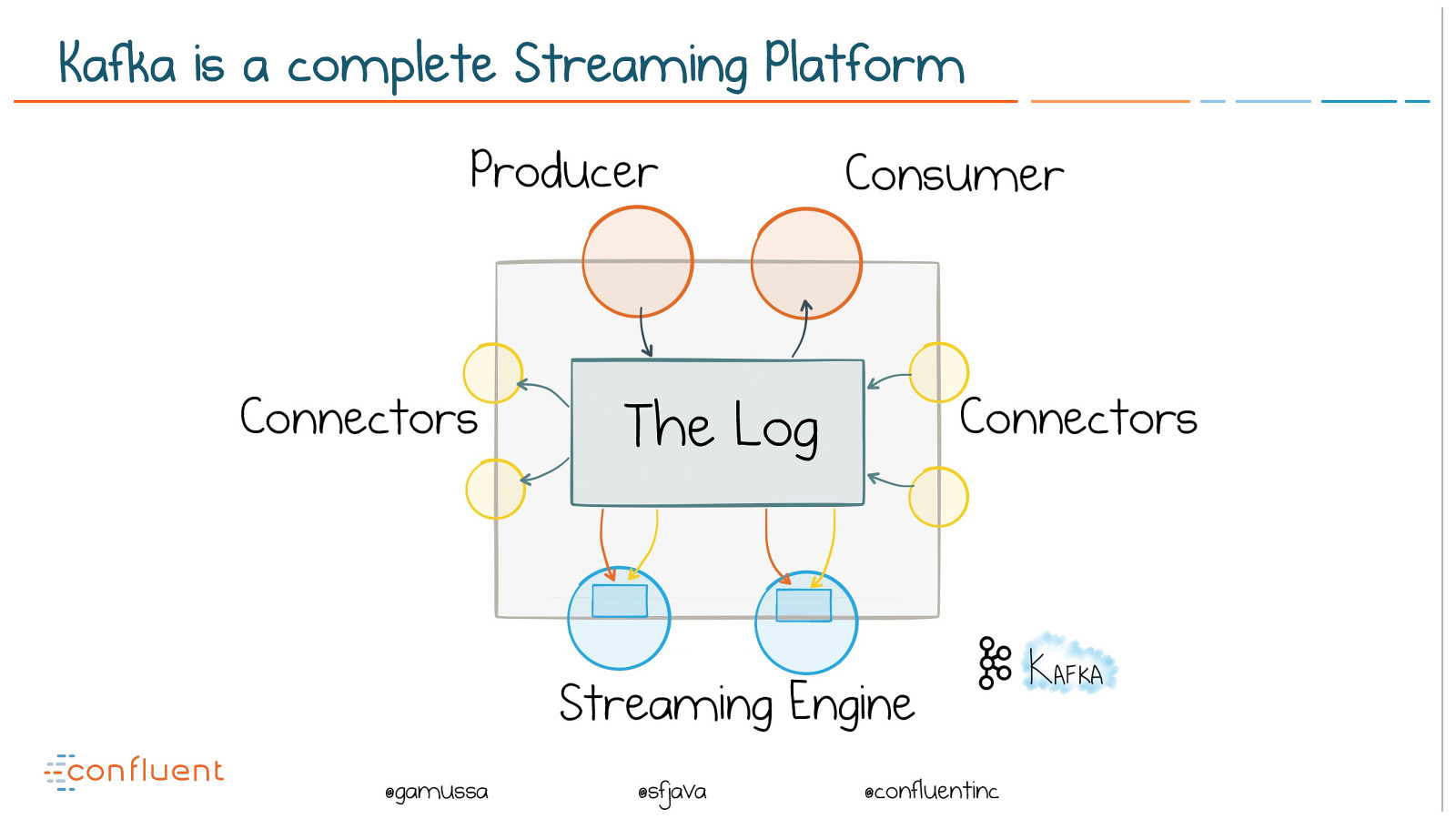

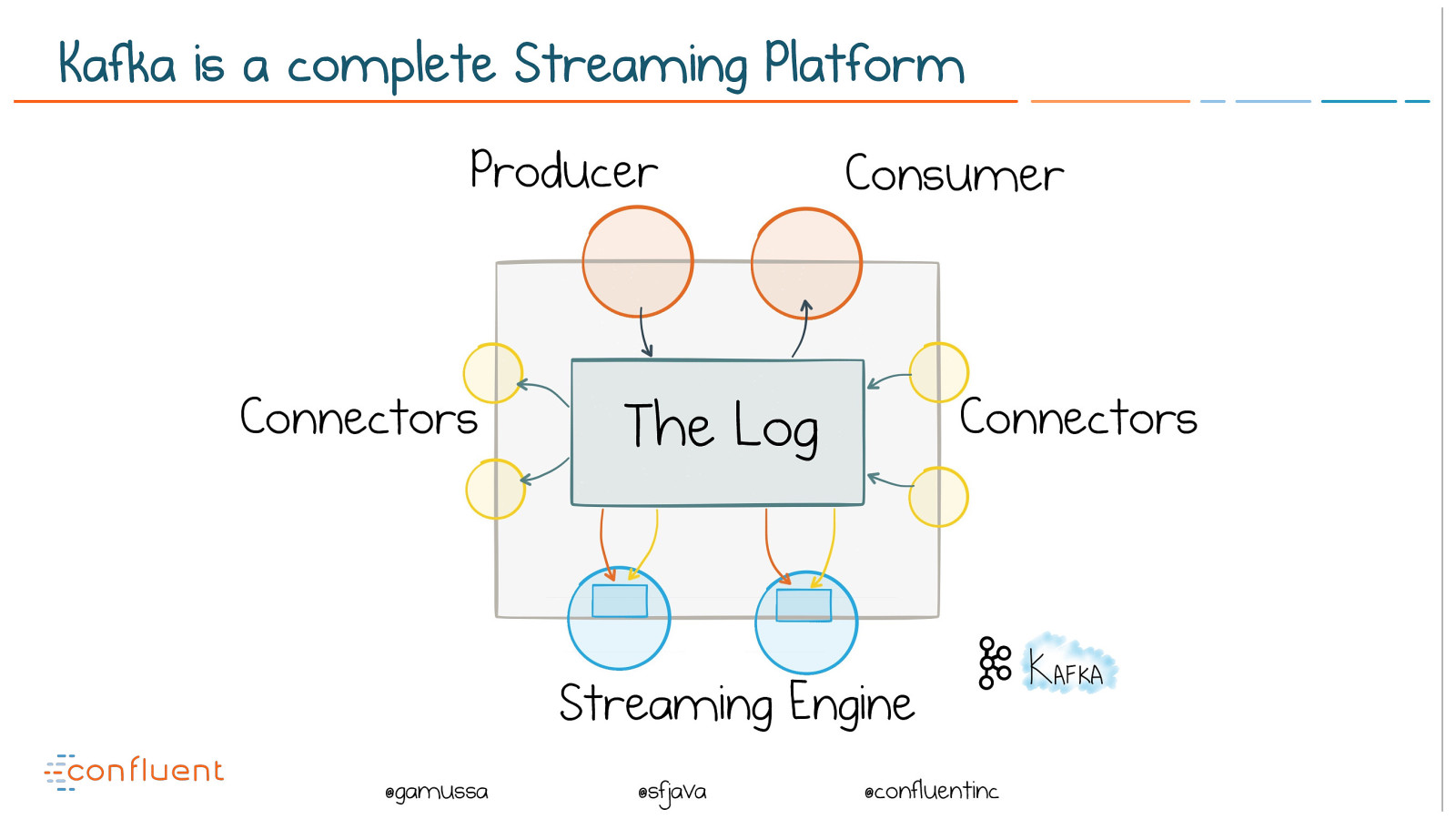

@ @gamussa @sfjava @confluentinc Kafka is a Streaming Platform The Log Connectors Connectors Producer Consumer Streaming Engine

Slide 18

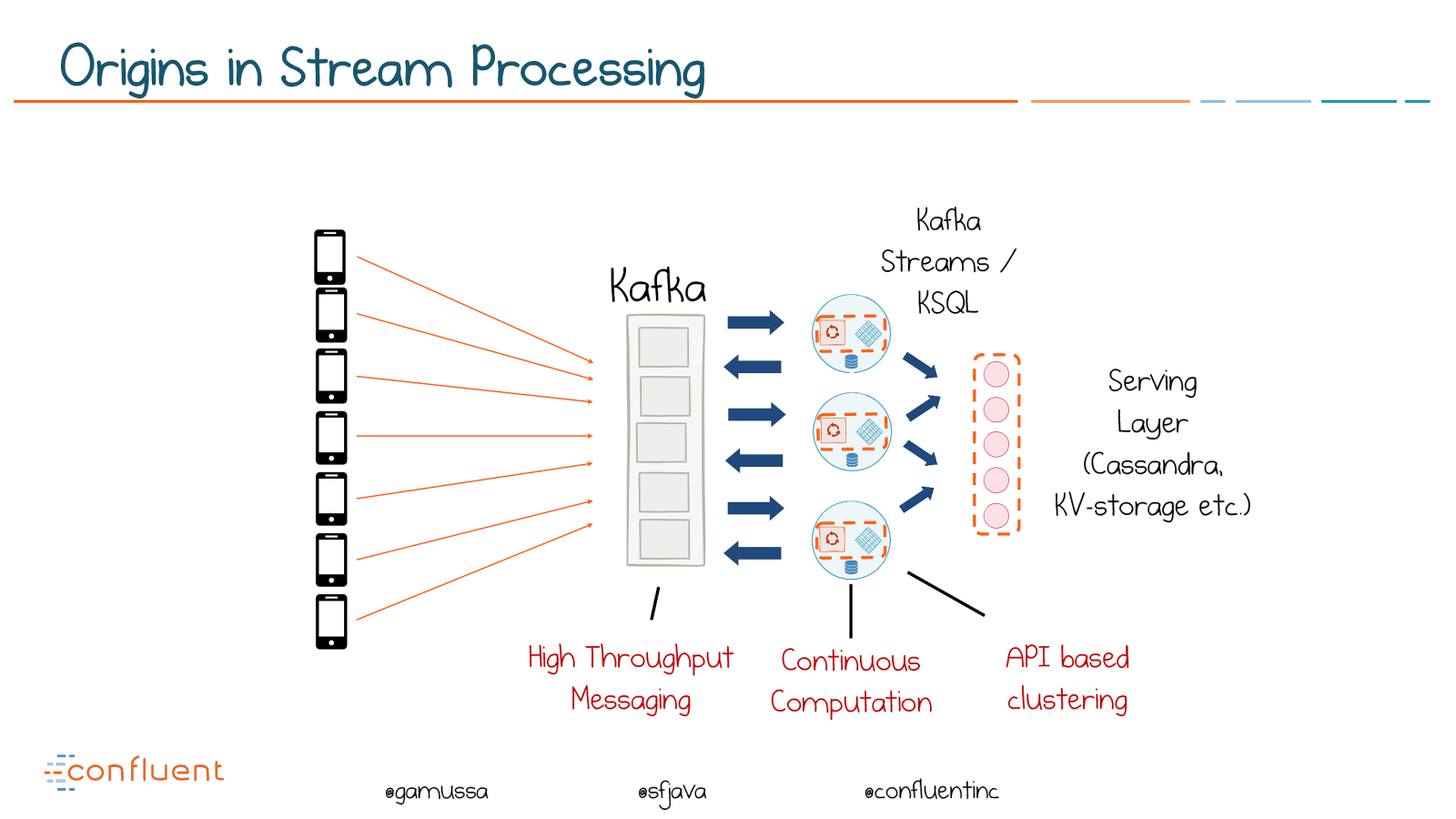

@

@gamussa @sfjava @confluentinc

Kafka

Serving

Layer

(Cassandra,

KV-storage etc.)

Kafka

Streams /

KSQL

Continuous

Computation

High Throughput

Messaging

API based

clustering

Origins in Stream Processing

Slide 19

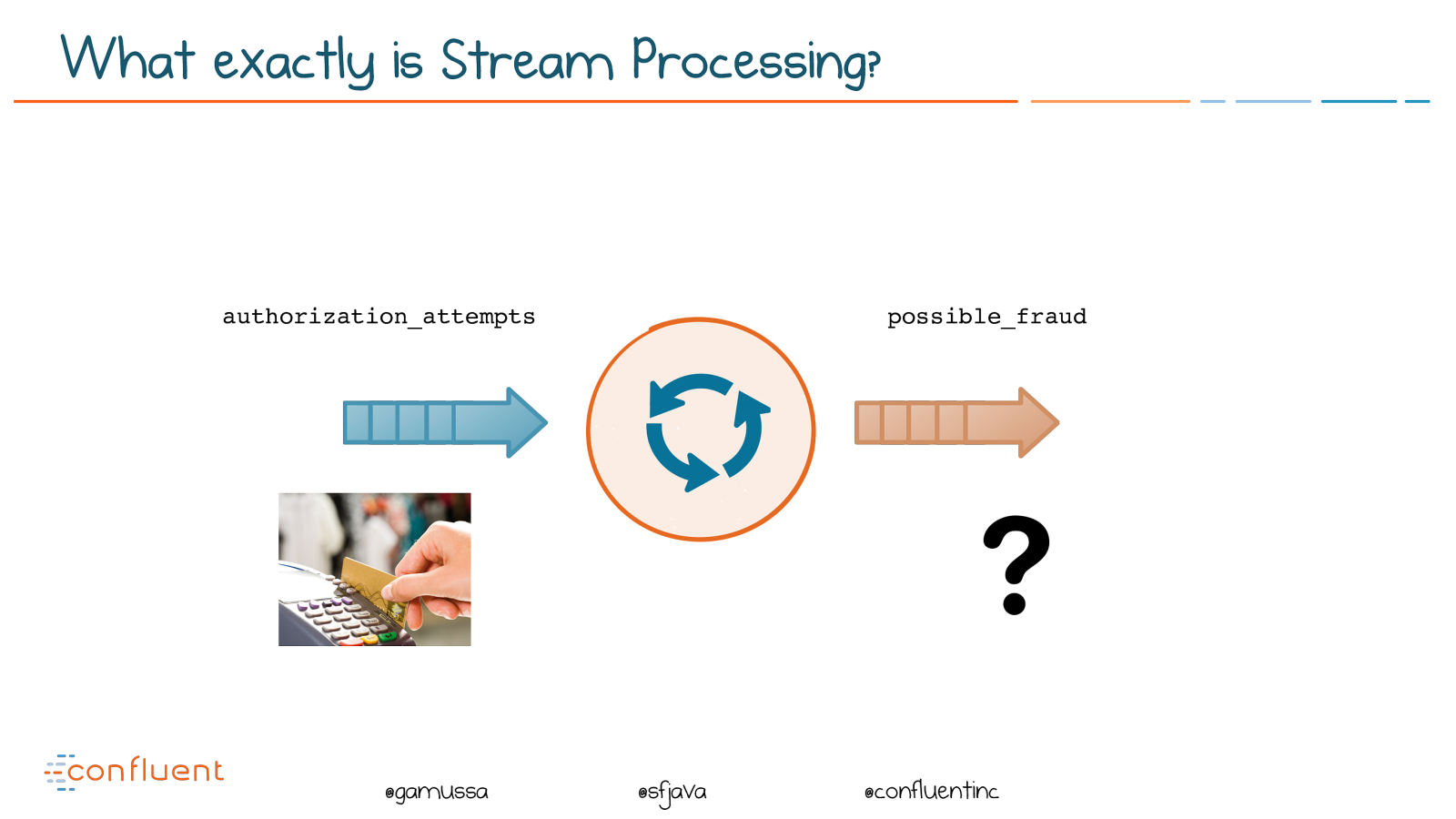

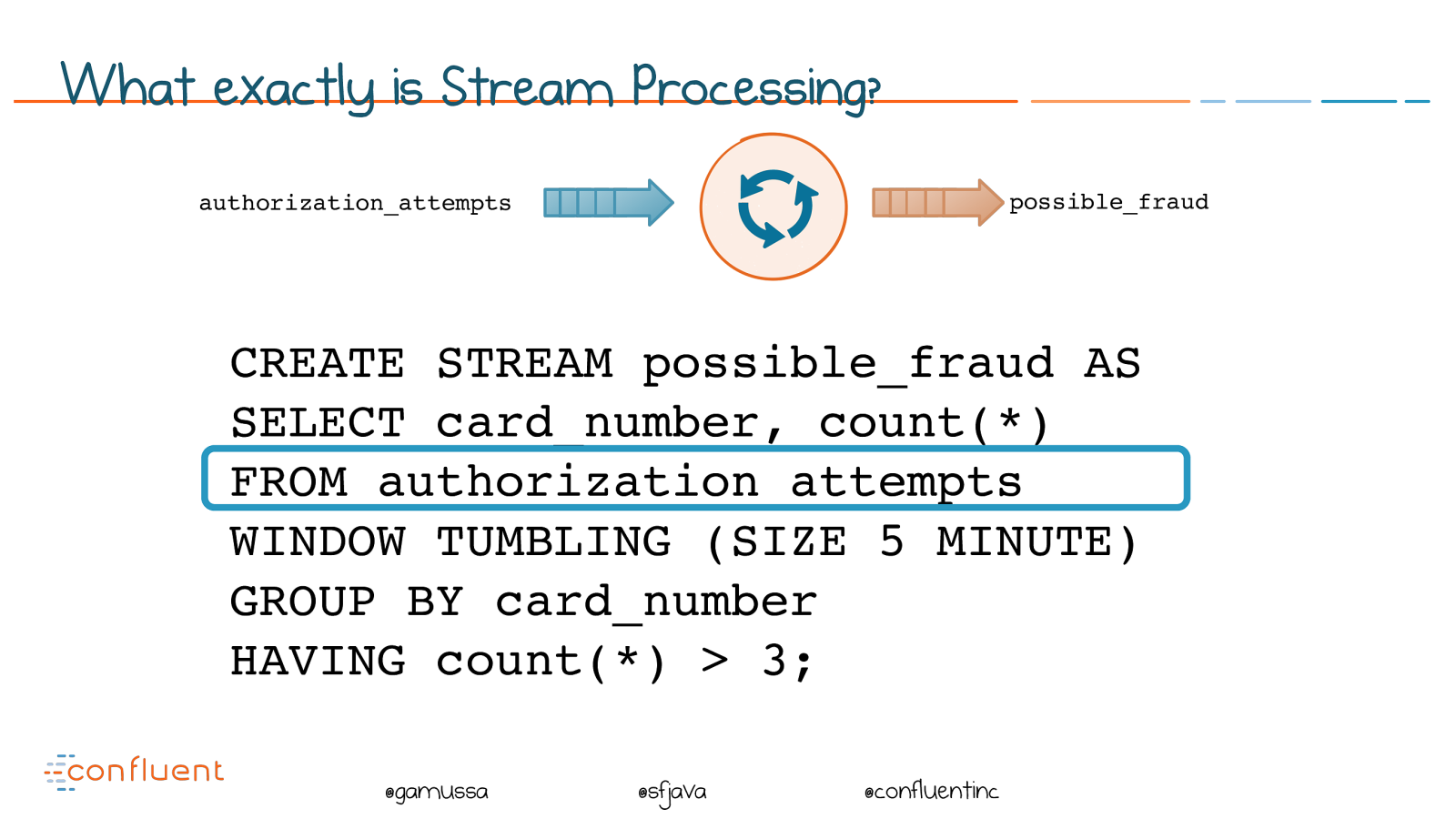

@ @gamussa @sfjava @confluentinc authorization_attempts possible_fraud What exactly is Stream Processing?

Slide 20

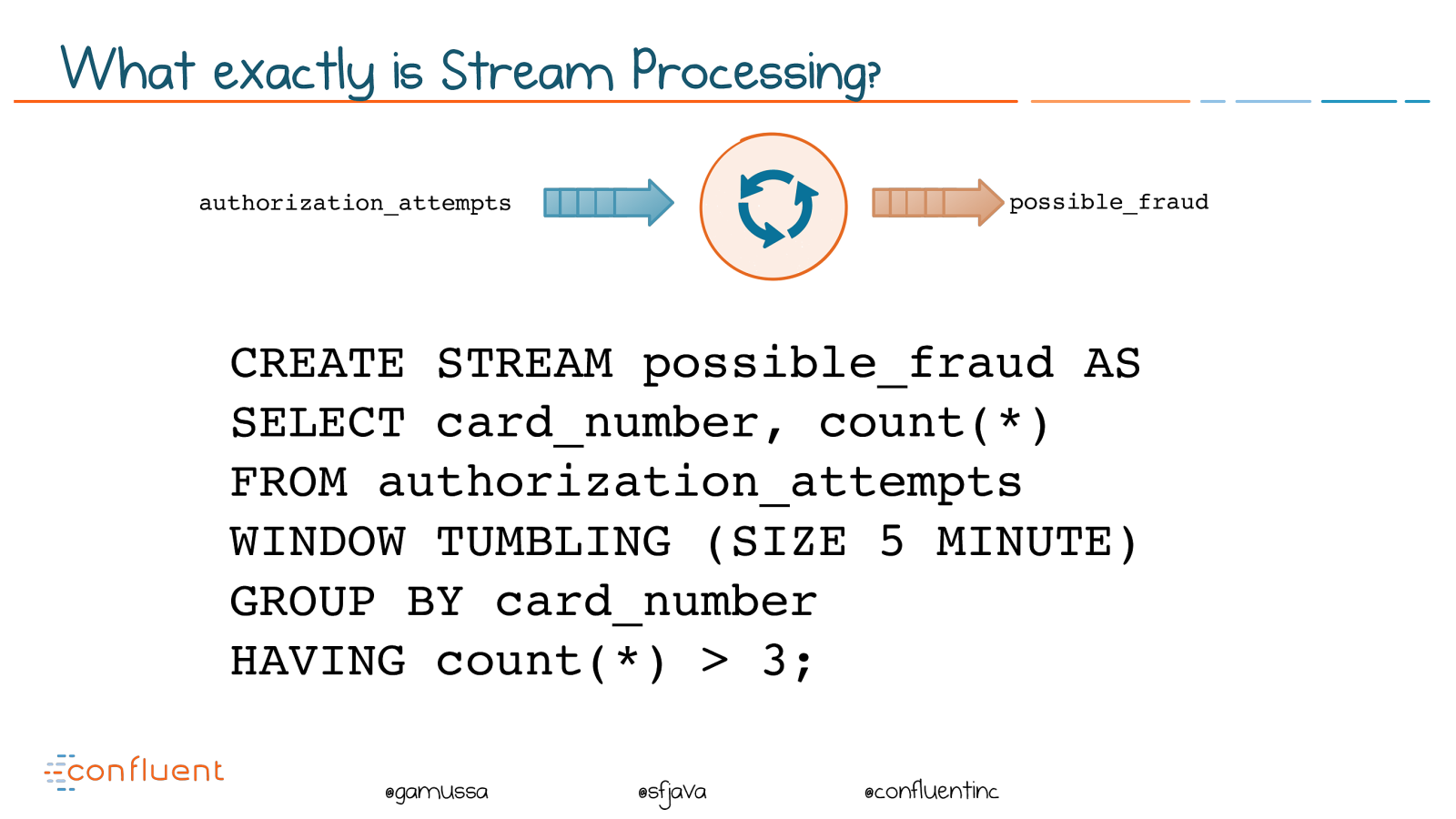

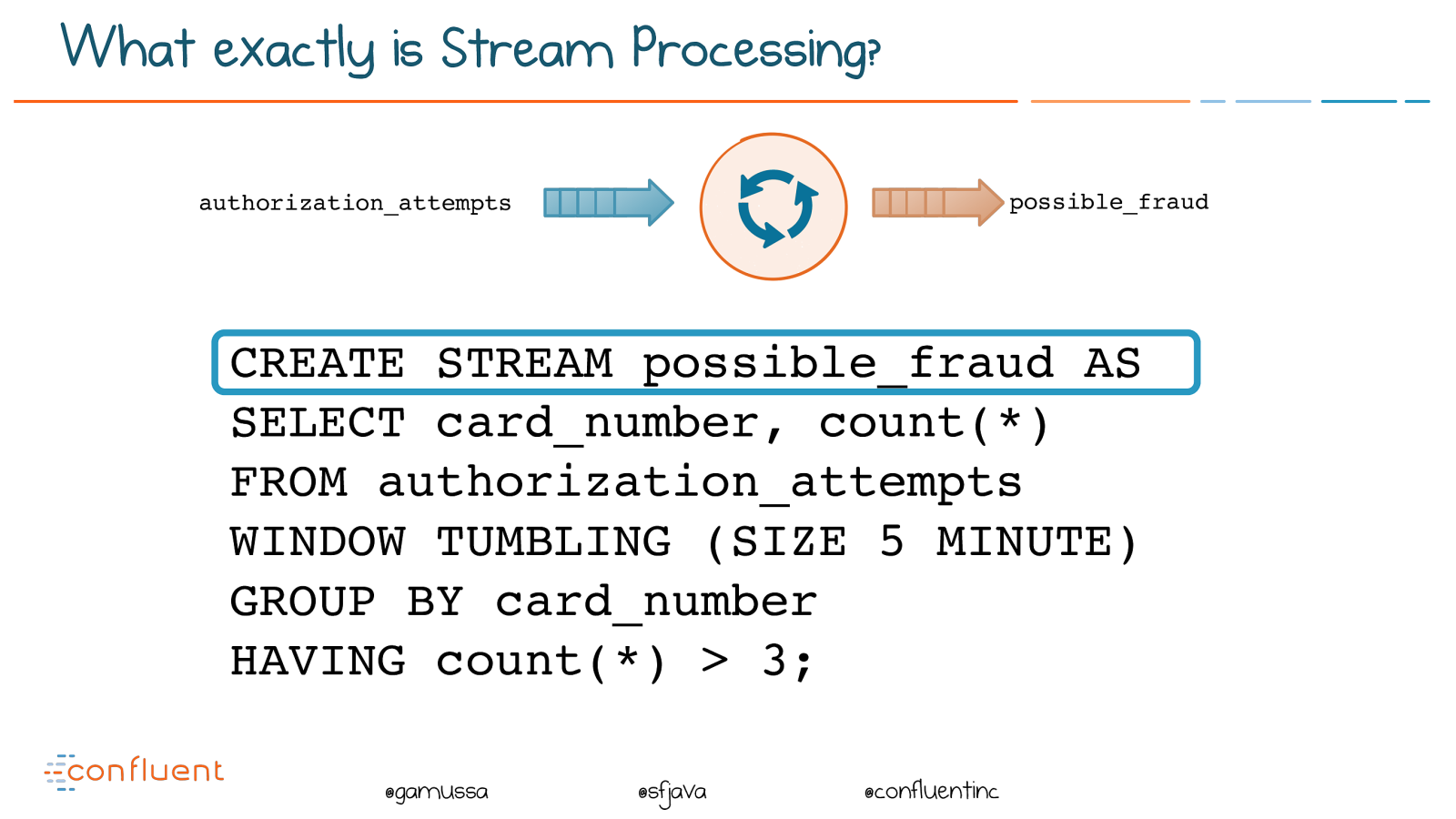

@ @gamussa @sfjava @confluentinc CREATE STREAM possible_fraud AS SELECT card_number, count() FROM authorization_attempts WINDOW TUMBLING (SIZE 5 MINUTE) GROUP BY card_number HAVING count() > 3; authorization_attempts possible_fraud What exactly is Stream Processing?

Slide 21

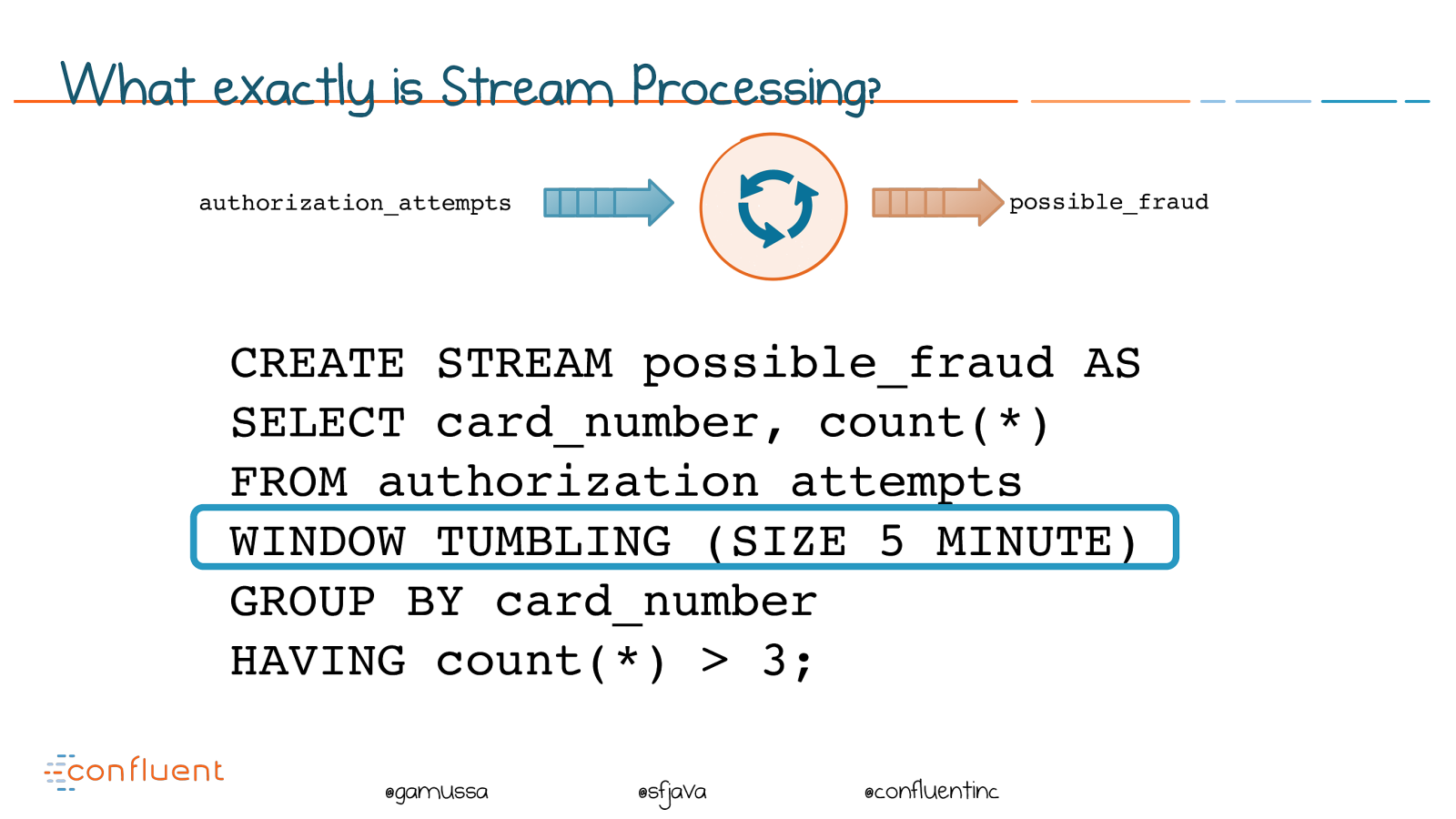

@ @gamussa @sfjava @confluentinc CREATE STREAM possible_fraud AS SELECT card_number, count() FROM authorization_attempts WINDOW TUMBLING (SIZE 5 MINUTE) GROUP BY card_number HAVING count() > 3; authorization_attempts possible_fraud What exactly is Stream Processing?

Slide 22

@ @gamussa @sfjava @confluentinc CREATE STREAM possible_fraud AS SELECT card_number, count() FROM authorization_attempts WINDOW TUMBLING (SIZE 5 MINUTE) GROUP BY card_number HAVING count() > 3; authorization_attempts possible_fraud What exactly is Stream Processing?

Slide 23

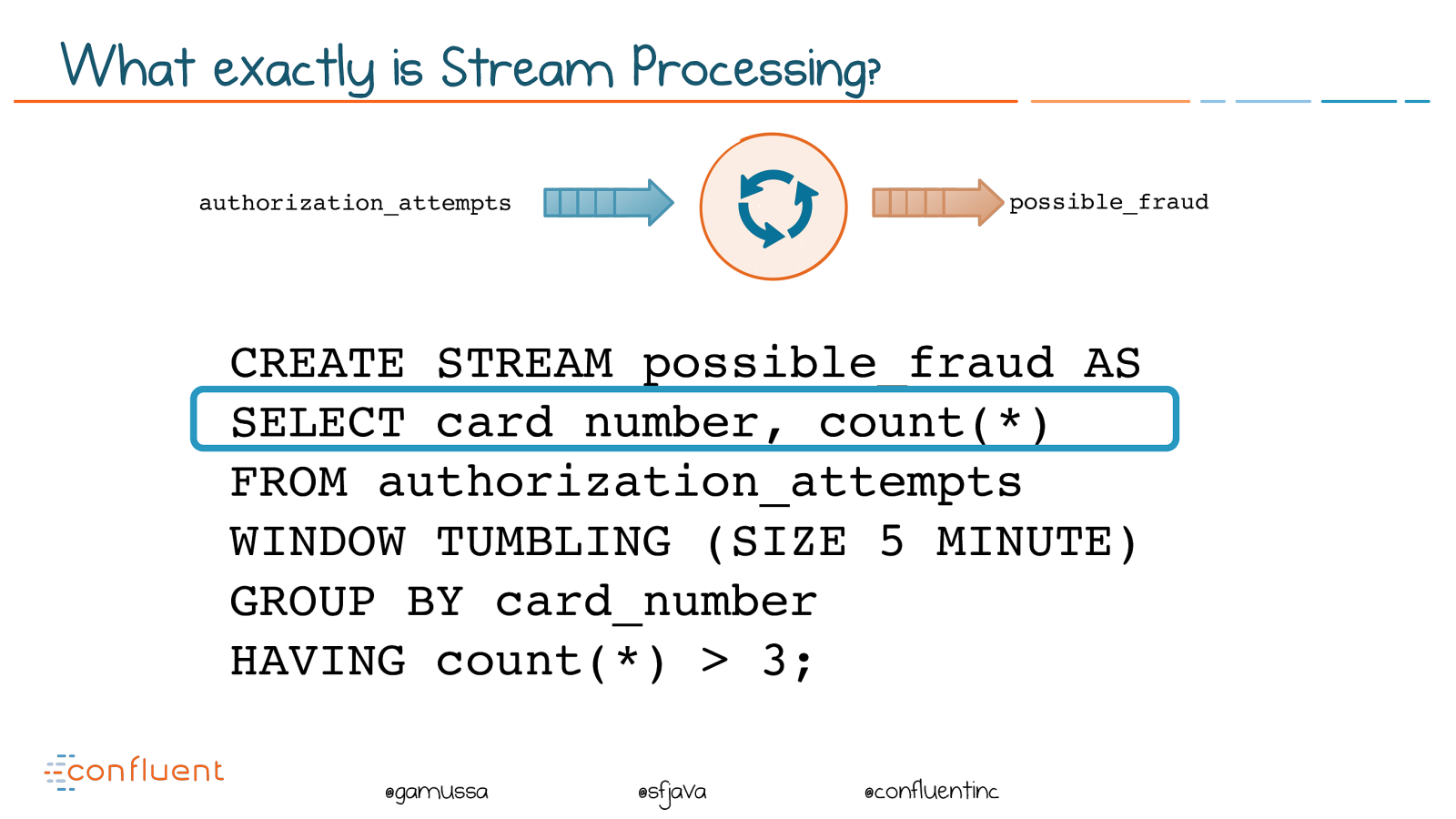

@ @gamussa @sfjava @confluentinc CREATE STREAM possible_fraud AS SELECT card_number, count() FROM authorization_attempts WINDOW TUMBLING (SIZE 5 MINUTE) GROUP BY card_number HAVING count() > 3; authorization_attempts possible_fraud What exactly is Stream Processing?

Slide 24

@ @gamussa @sfjava @confluentinc CREATE STREAM possible_fraud AS SELECT card_number, count() FROM authorization_attempts WINDOW TUMBLING (SIZE 5 MINUTE) GROUP BY card_number HAVING count() > 3; authorization_attempts possible_fraud What exactly is Stream Processing?

Slide 25

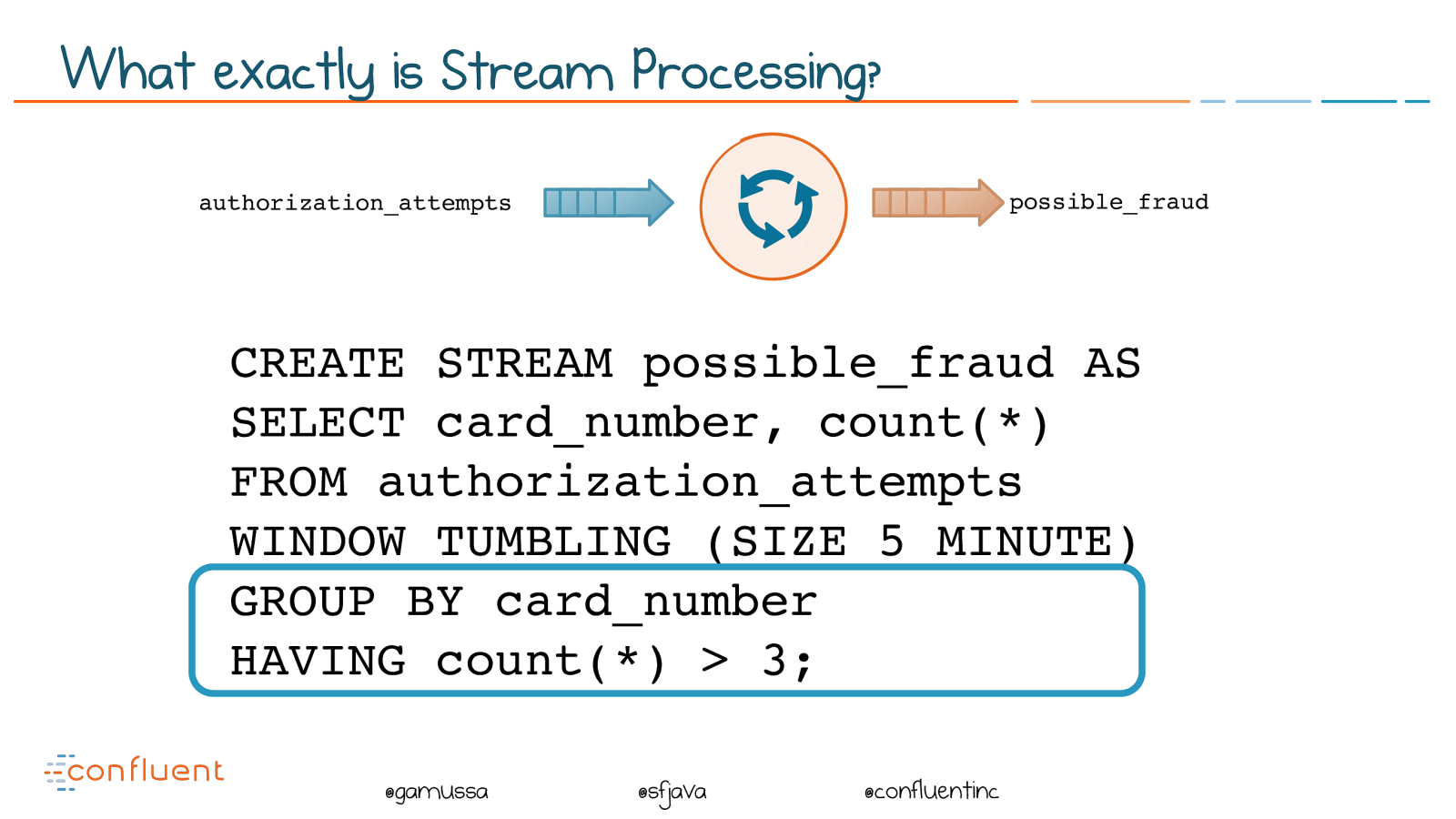

@ @gamussa @sfjava @confluentinc CREATE STREAM possible_fraud AS SELECT card_number, count() FROM authorization_attempts WINDOW TUMBLING (SIZE 5 MINUTE) GROUP BY card_number HAVING count() > 3; authorization_attempts possible_fraud What exactly is Stream Processing?

Slide 26

@ @gamussa @sfjava @confluentinc Streaming is the toolset for dealing with events as they move!

Slide 27

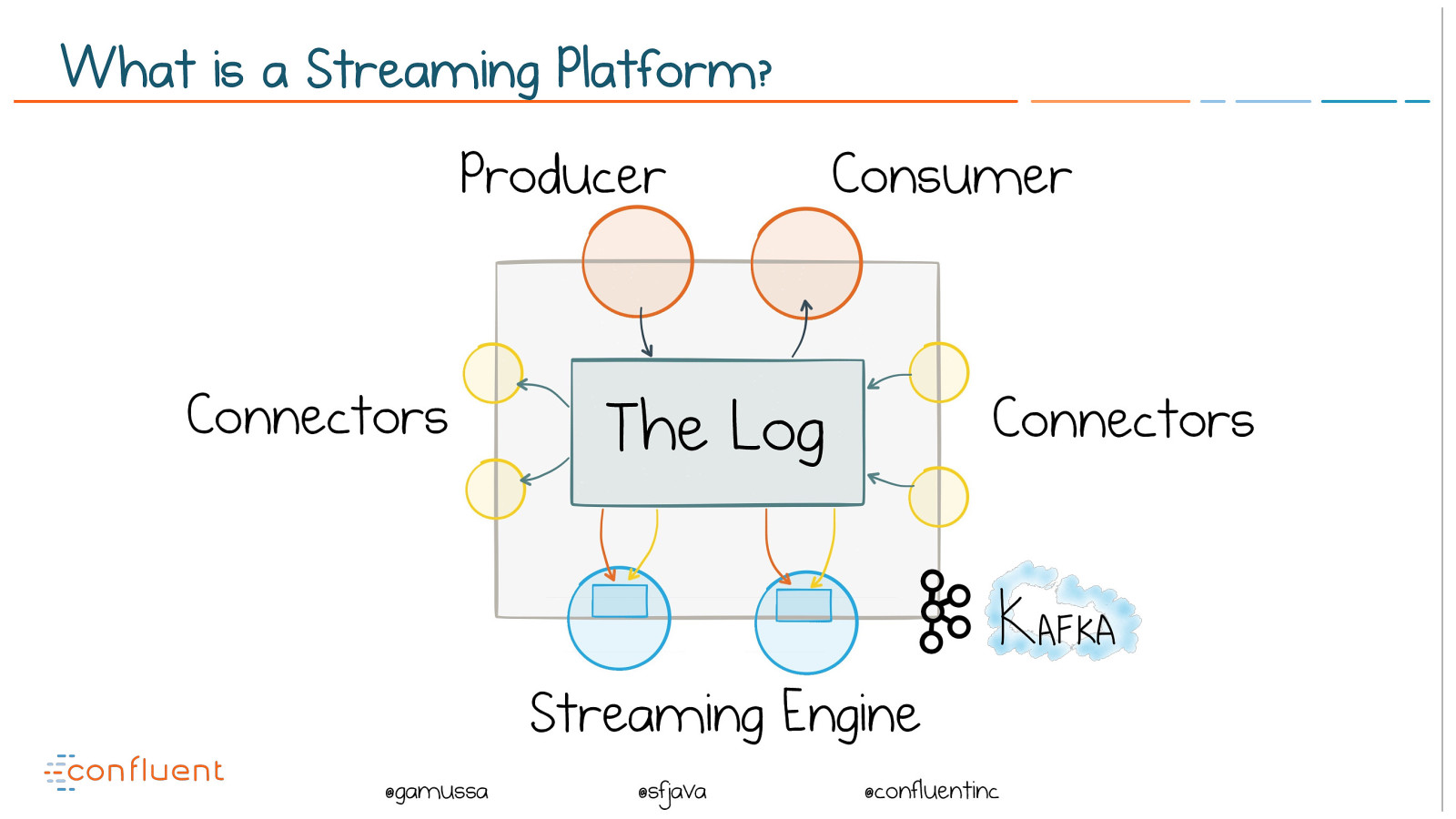

@ @gamussa @sfjava @confluentinc What is a Streaming Platform? The Log Connectors Connectors Producer Consumer Streaming Engine

Slide 28

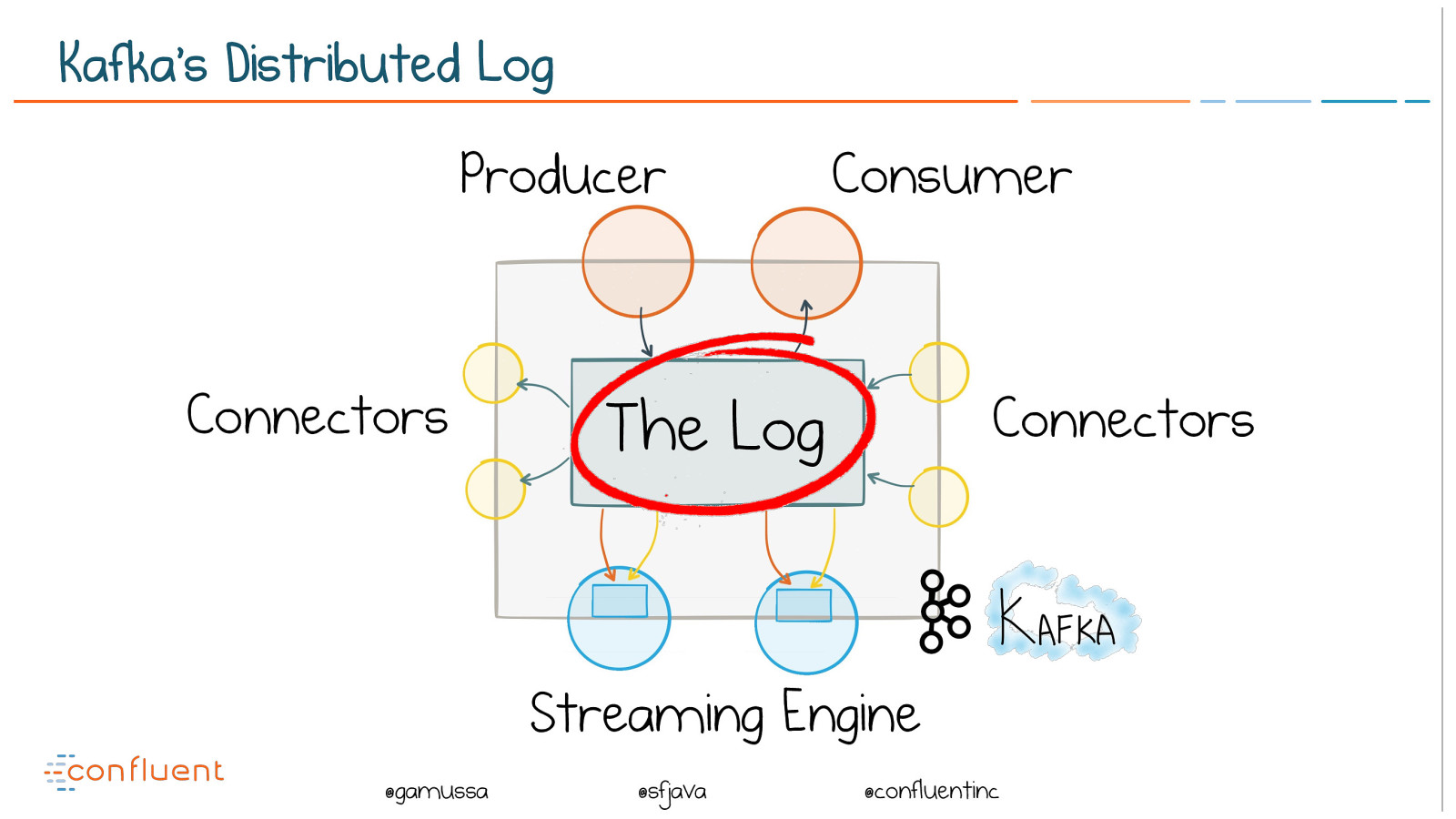

@ @gamussa @sfjava @confluentinc Kafka’s Distributed Log The Log Connectors Connectors Producer Consumer Streaming Engine

Slide 29

@ @gamussa @sfjava @confluentinc The log is a type of durable messaging system

Slide 30

@ @gamussa @sfjava @confluentinc Similar to a traditional messaging system (ActiveMQ, Rabbit etc) but with: (a) Far better scalability (b) Built in fault tolerance / HA (c) Storage The log is a type of durable messaging system

Slide 31

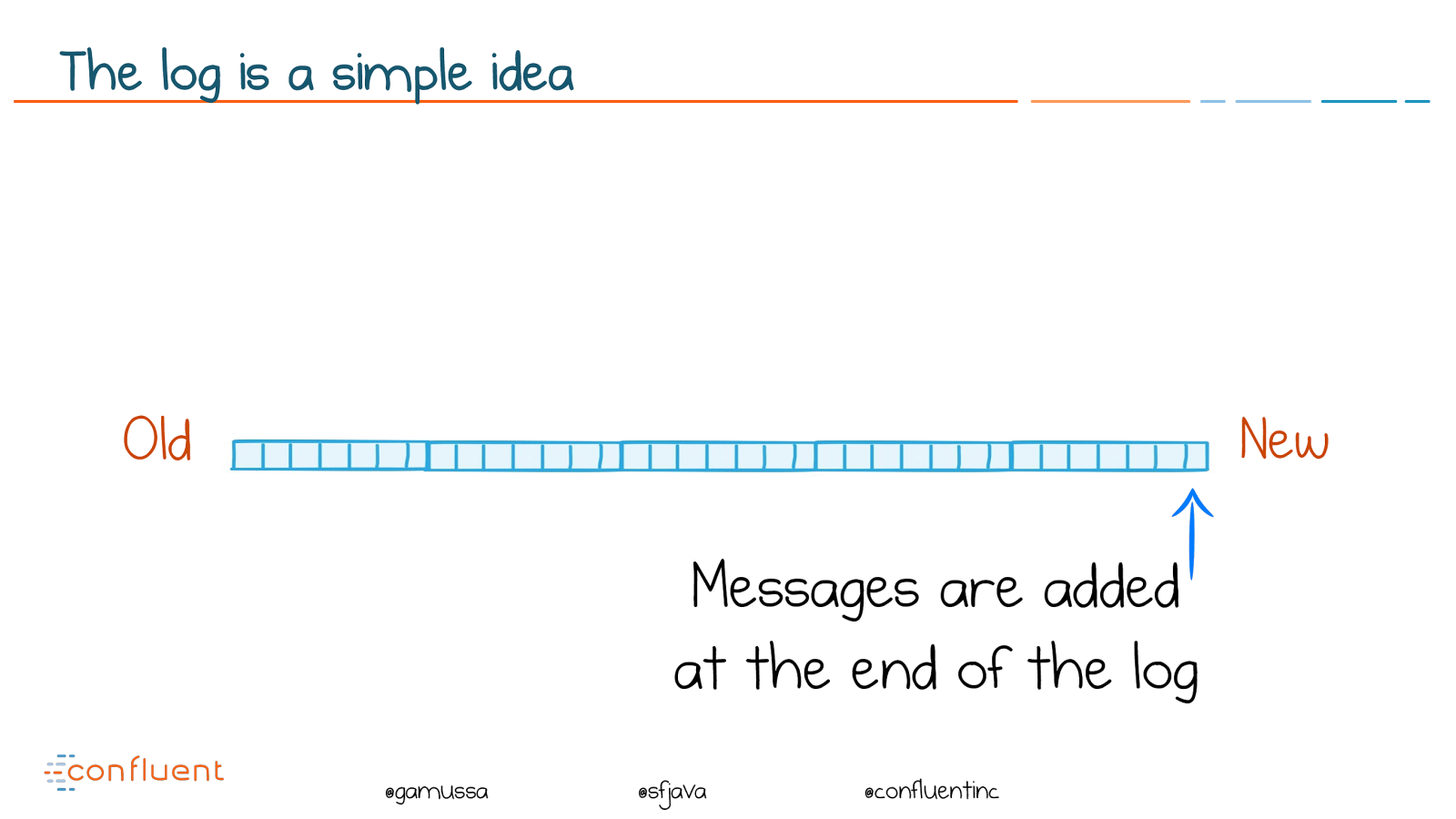

@

@gamussa @sfjava @confluentinc

The log is a simple idea

Messages are added

at the end of the log

Old

New

Slide 32

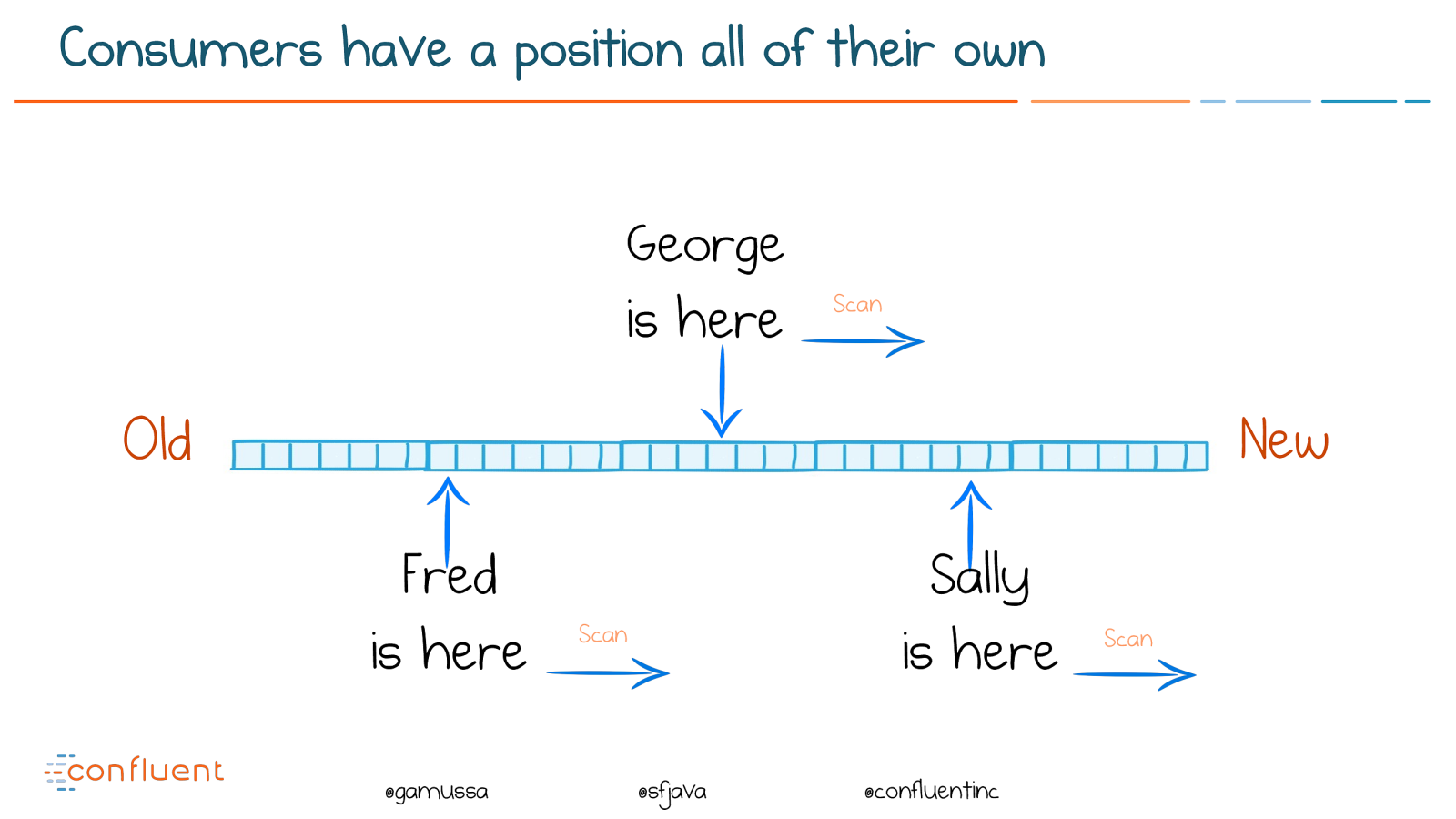

@ @gamussa @sfjava @confluentinc Consumers have a position all of their own Sally is here George is here Fred is here Old New Scan Scan Scan

Slide 33

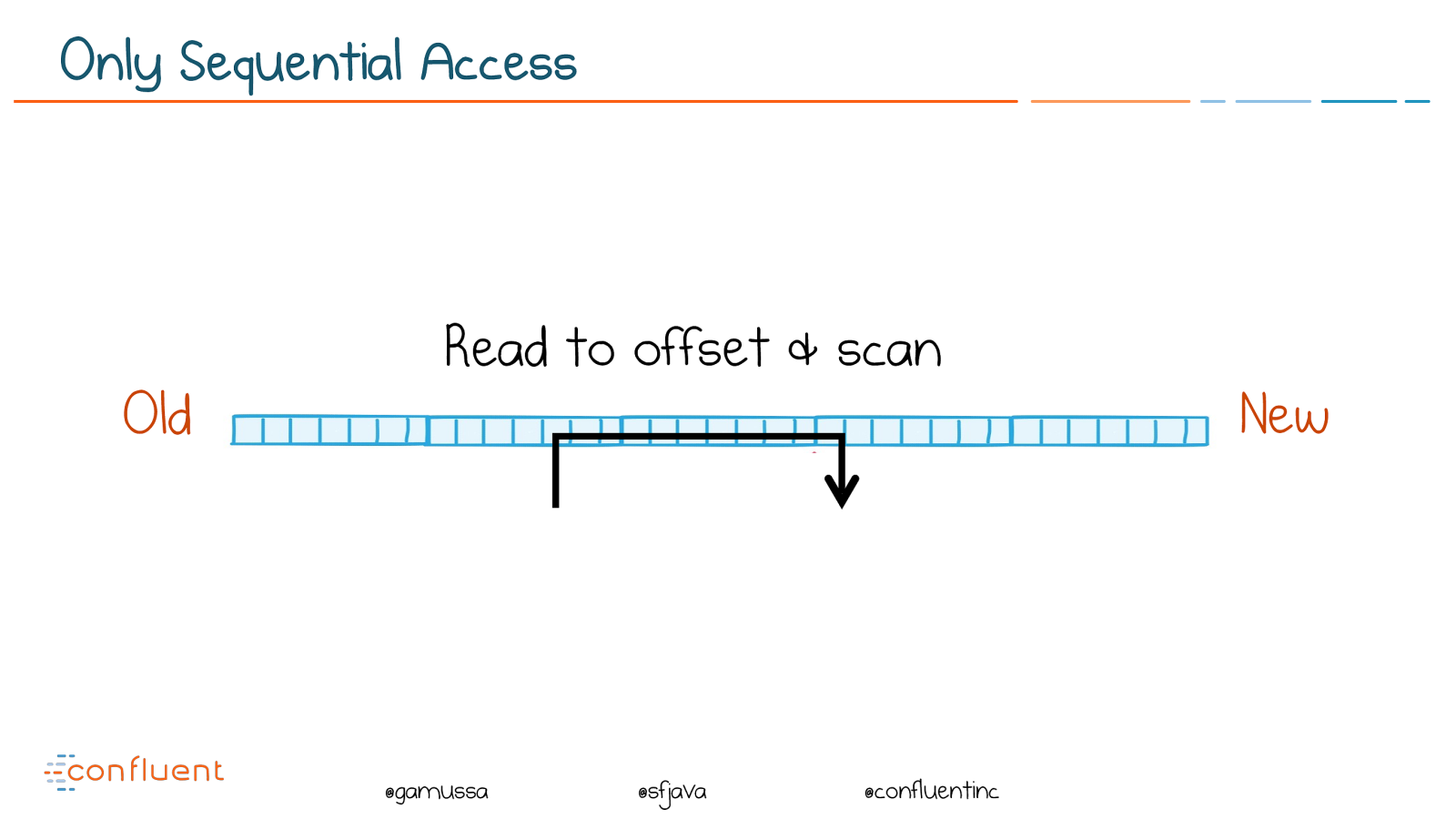

@ @gamussa @sfjava @confluentinc Only Sequential Access Old New Read to offset & scan

Slide 34

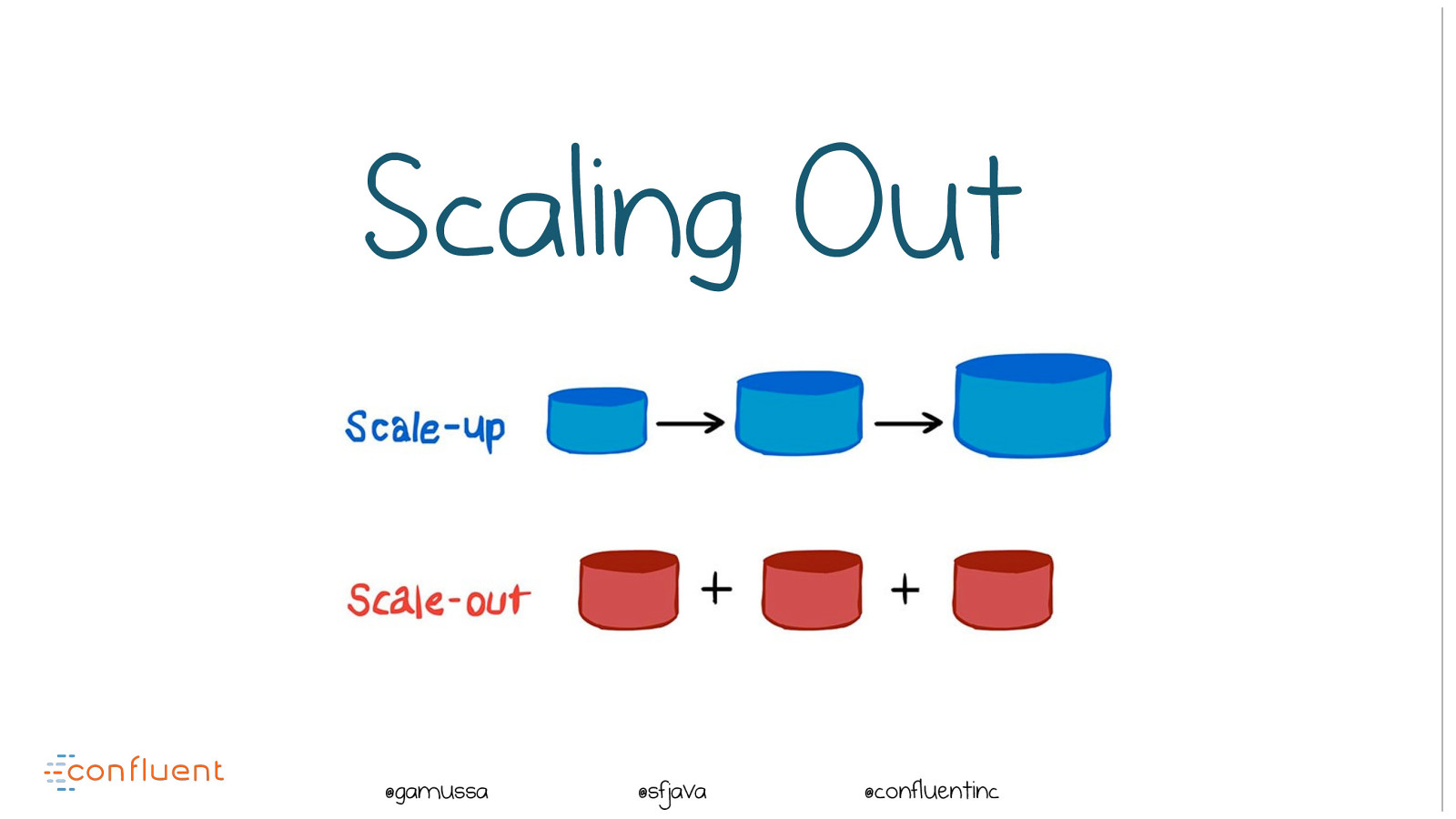

@ @gamussa @sfjava @confluentinc Scaling Out

Slide 35

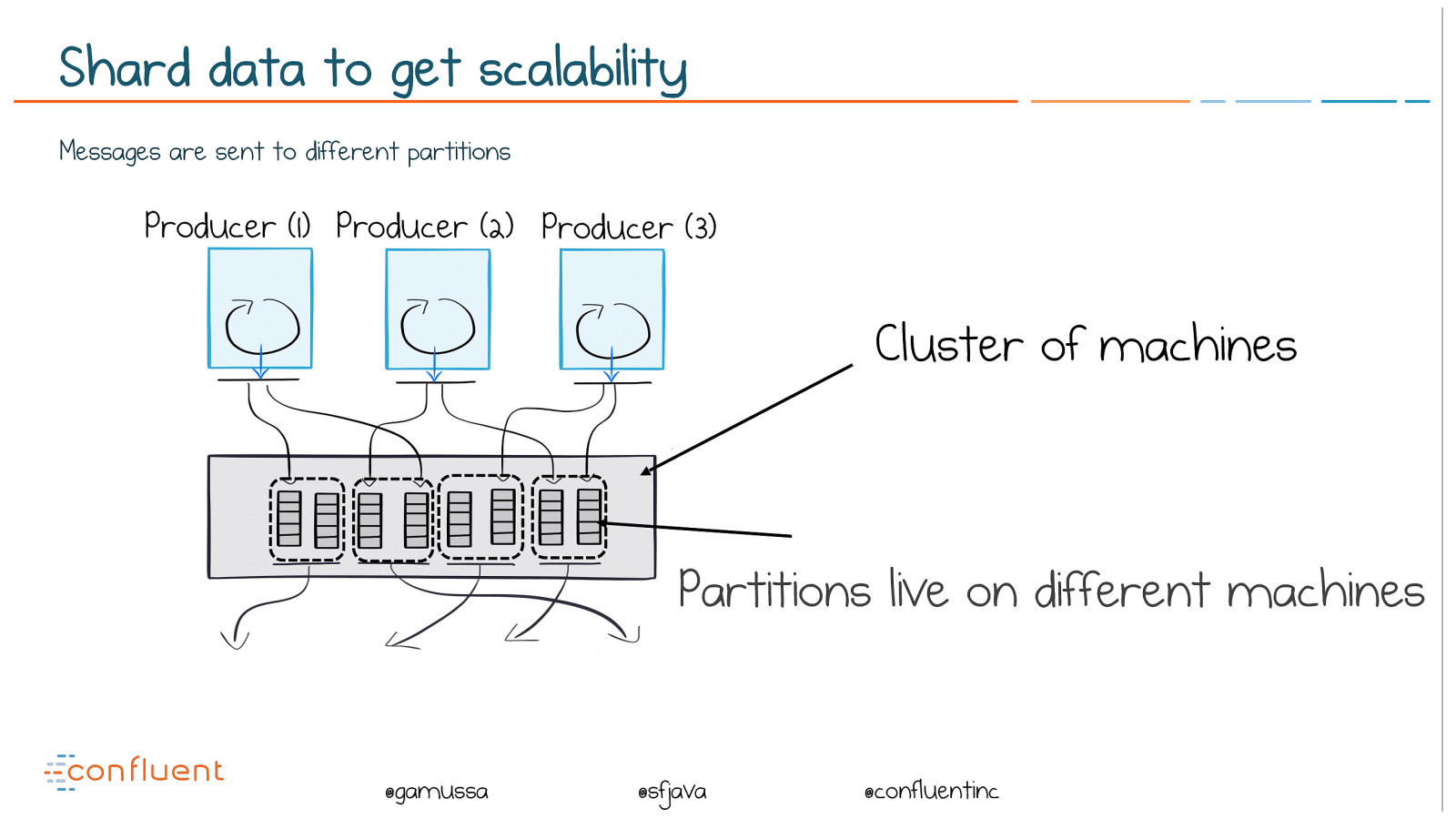

@ @gamussa @sfjava @confluentinc Shard data to get scalability Messages are sent to different partitions Producer (1) Producer (2) Producer (3) Cluster of machines Partitions live on different machines

Slide 36

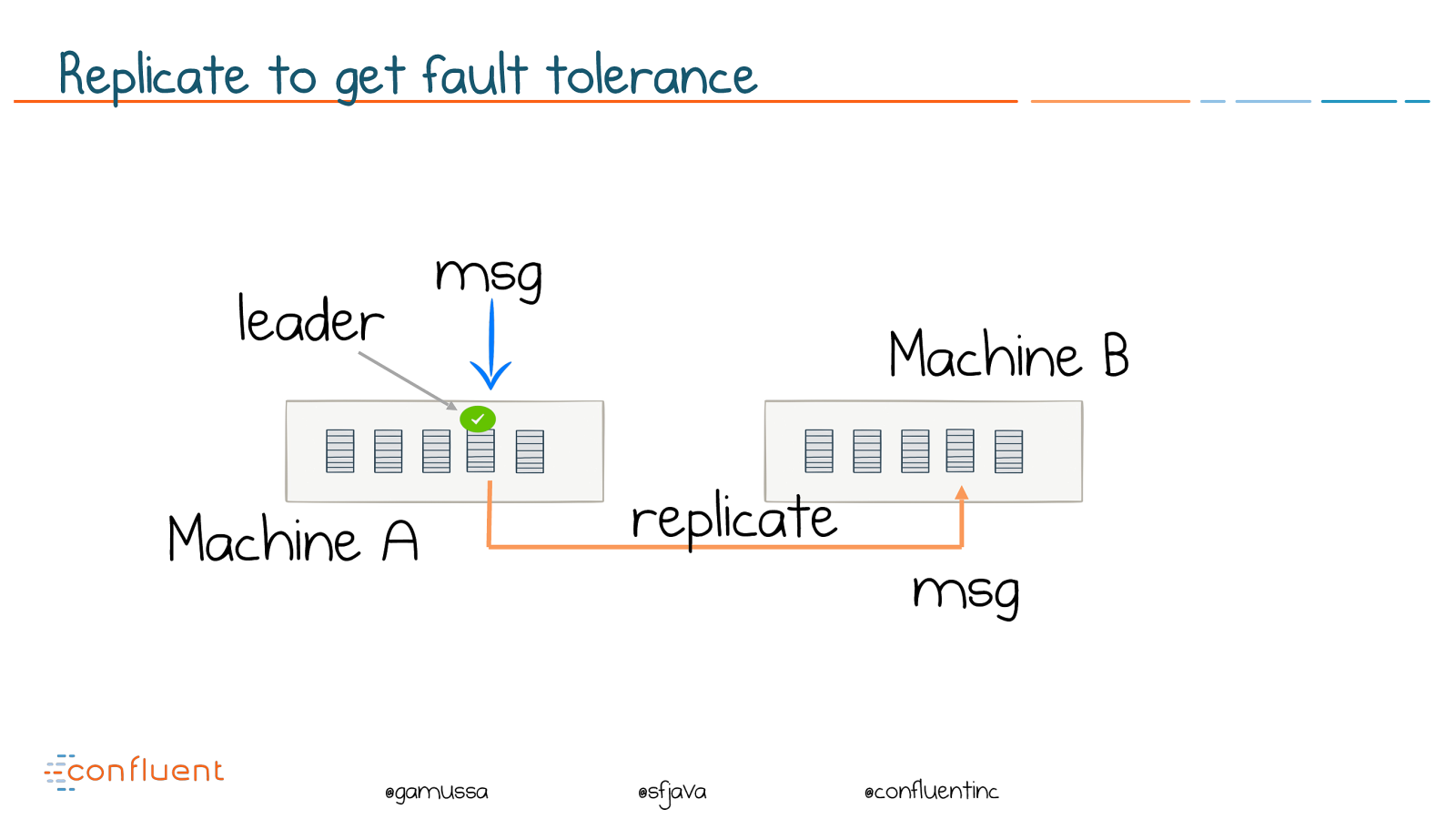

@ @gamussa @sfjava @confluentinc Replicate to get fault tolerance replicate msg msg leader Machine A Machine B

Slide 37

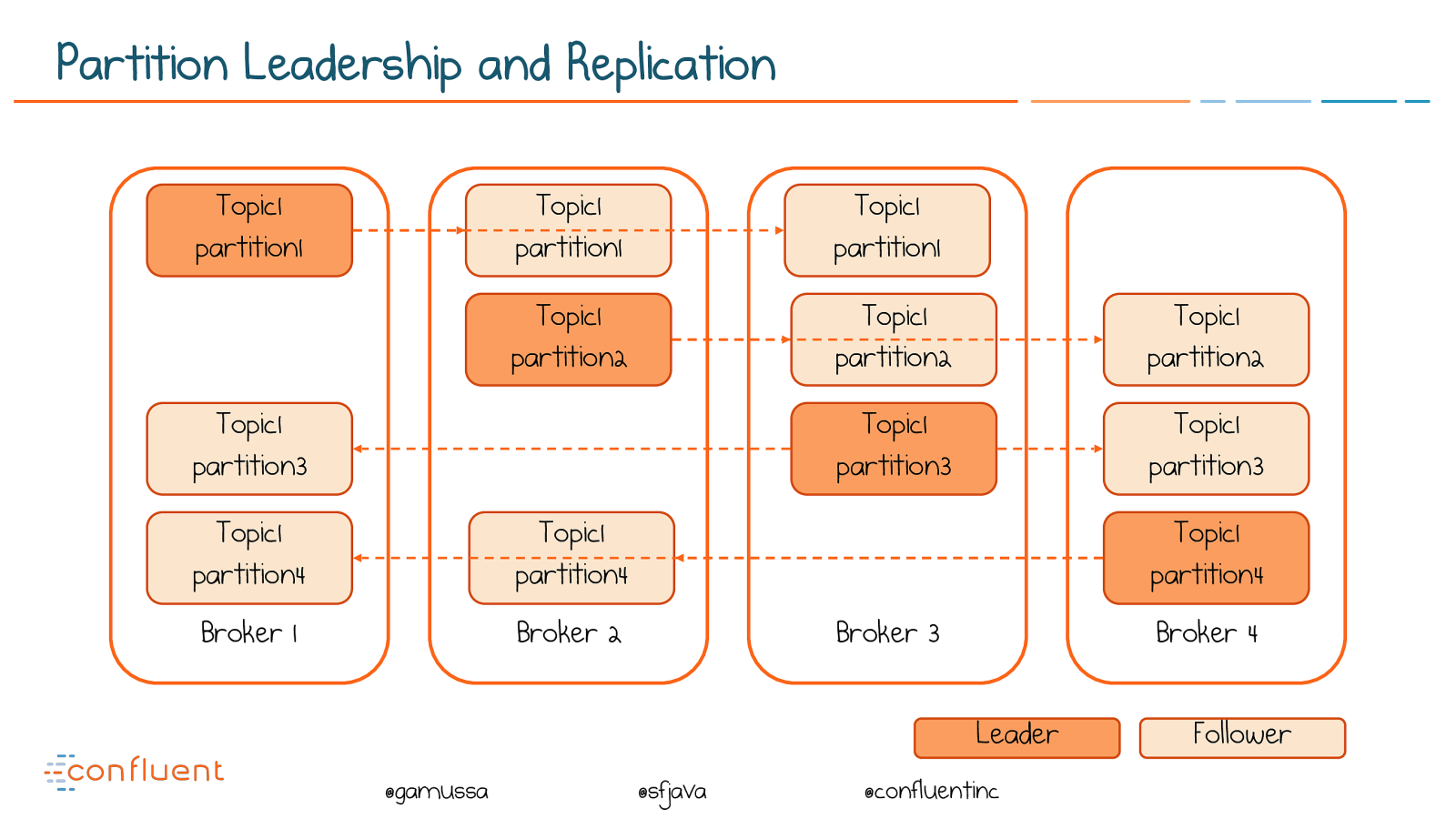

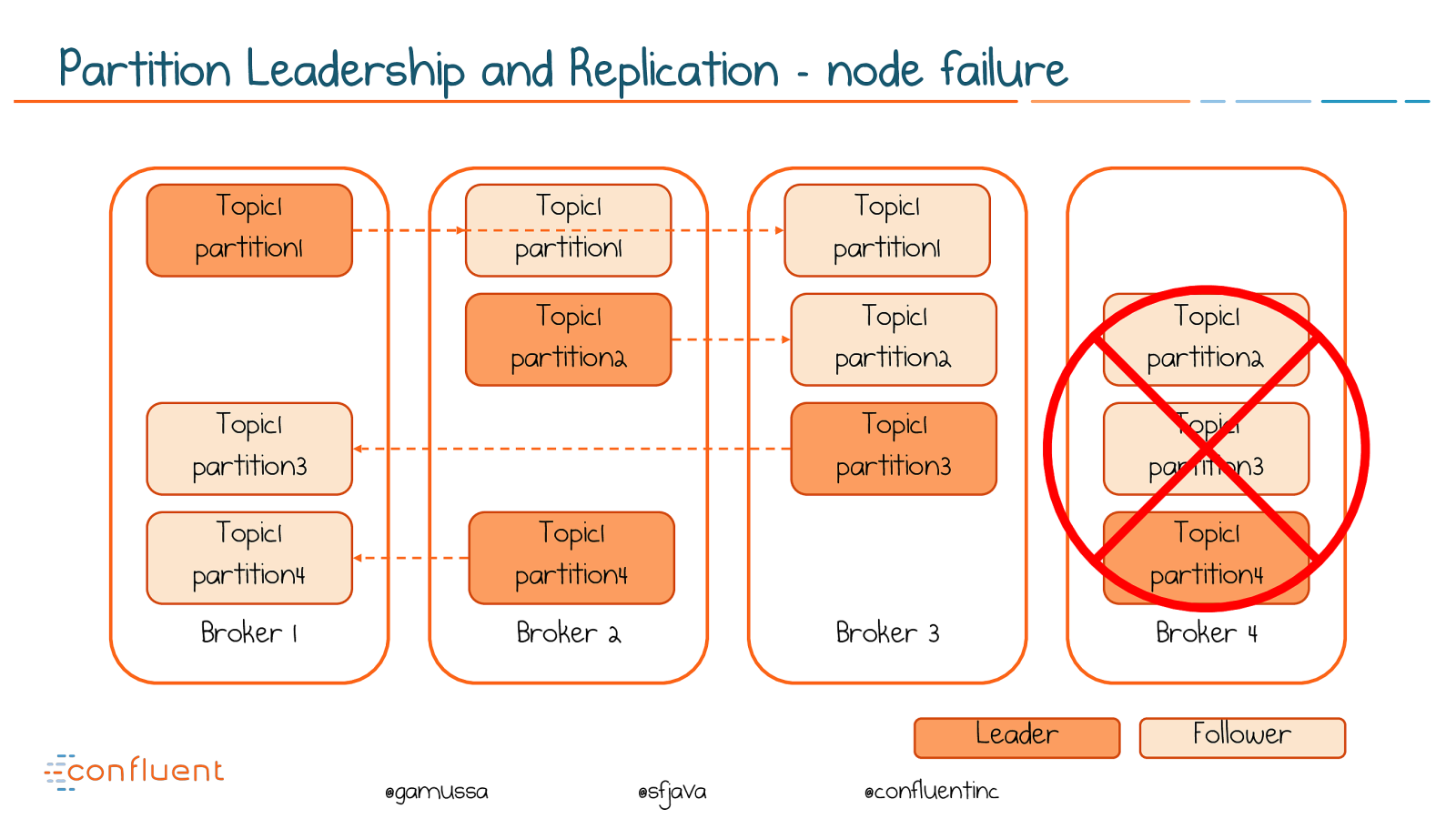

@ @gamussa @sfjava @confluentinc Partition Leadership and Replication Broker 1 Topic1 partition1 Broker 2 Broker 3 Broker 4 Topic1 partition1 Topic1 partition1 Leader Follower Topic1 partition2 Topic1 partition2 Topic1 partition2 Topic1 partition3 Topic1 partition4 Topic1 partition3 Topic1 partition3 Topic1 partition4 Topic1 partition4

Slide 38

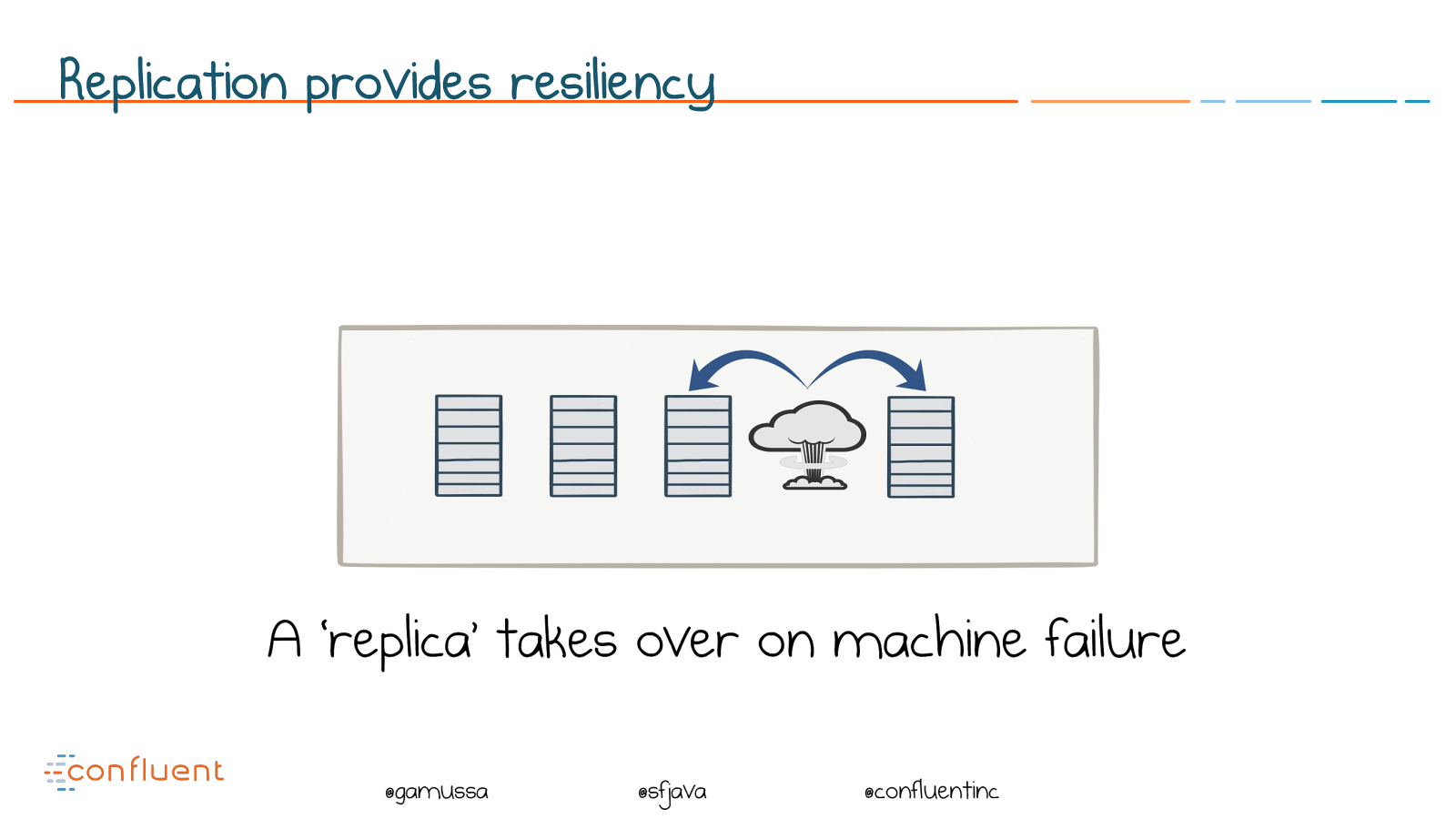

@ @gamussa @sfjava @confluentinc Replication provides resiliency A ‘replica’ takes over on machine failure

Slide 39

@ @gamussa @sfjava @confluentinc Partition Leadership and Replication - node failure Broker 1 Topic1 partition1 Broker 2 Broker 3 Broker 4 Topic1 partition1 Topic1 partition1 Leader Follower Topic1 partition2 Topic1 partition2 Topic1 partition2 Topic1 partition3 Topic1 partition4 Topic1 partition3 Topic1 partition3 Topic1 partition4 Topic1 partition4

Slide 40

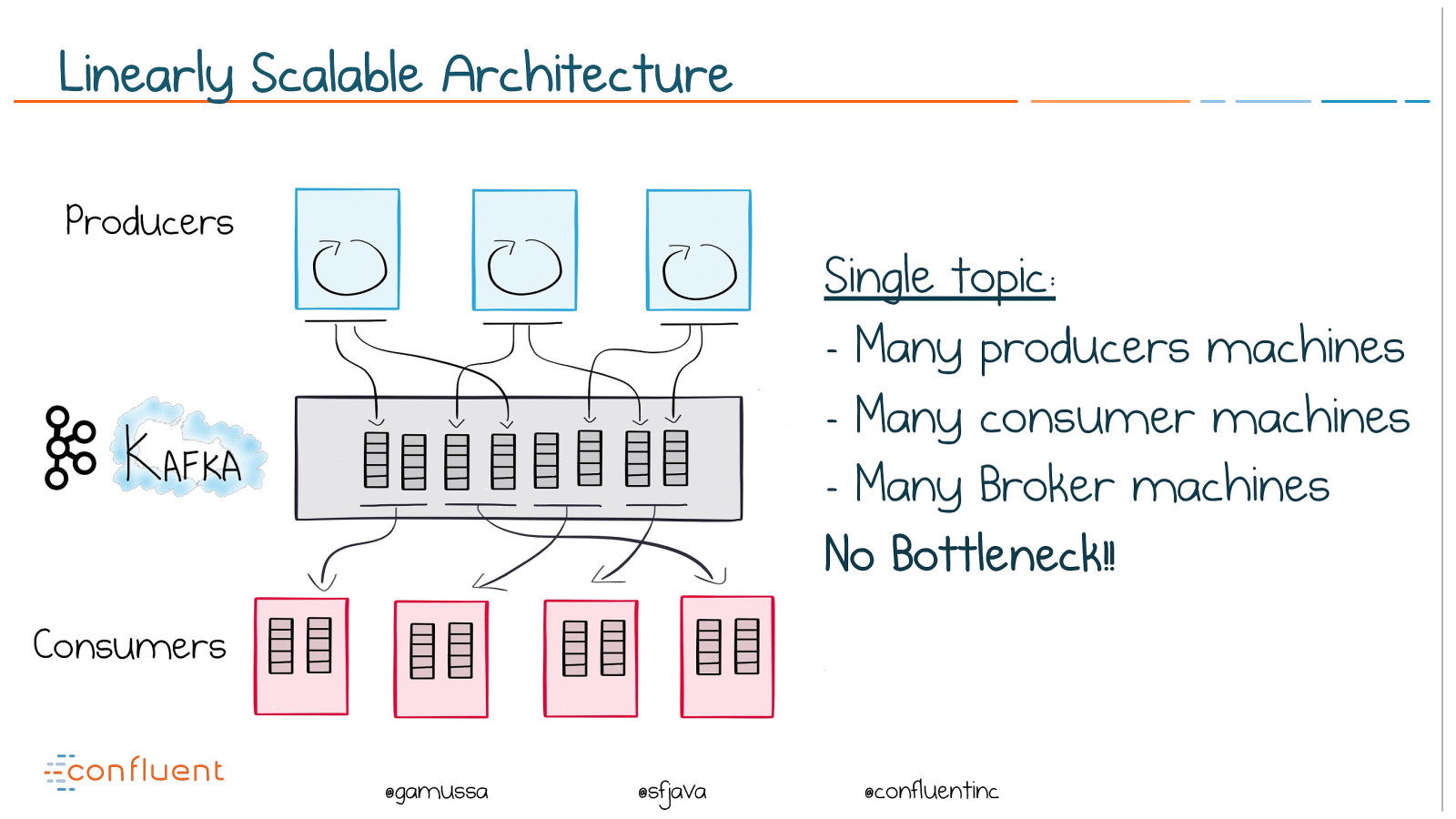

@ @gamussa @sfjava @confluentinc Linearly Scalable Architecture Single topic:

- Many producers machines

- Many consumer machines

- Many Broker machines No Bottleneck!! Consumers Producers

Slide 41

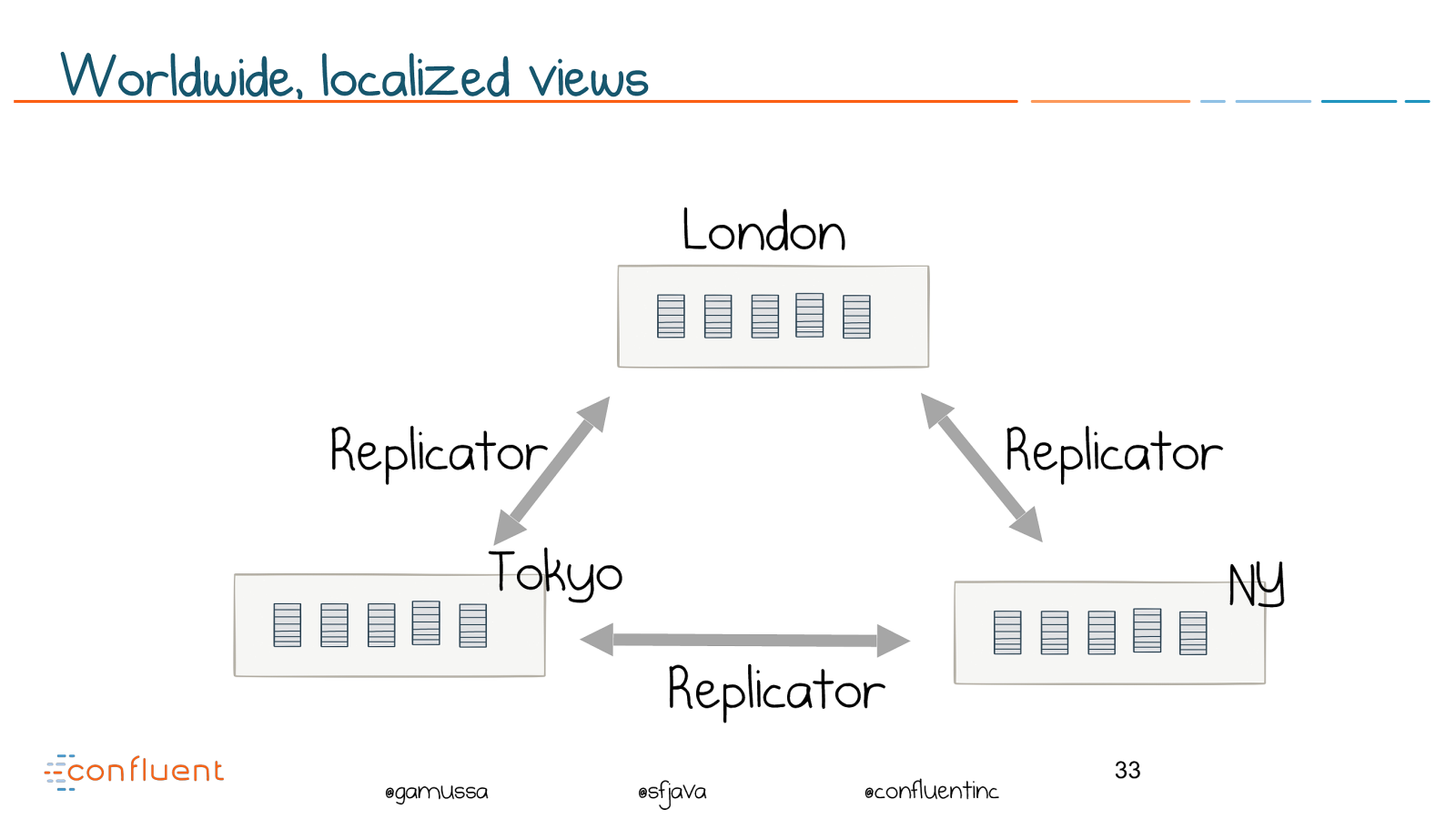

@ @gamussa @sfjava @confluentinc Worldwide, localized views ! 33 NY London Tokyo Replicator Replicator Replicator

Slide 42

@ @gamussa @sfjava @confluentinc The Connect API The Log Connectors Connectors Producer Consumer Streaming Engine

Slide 43

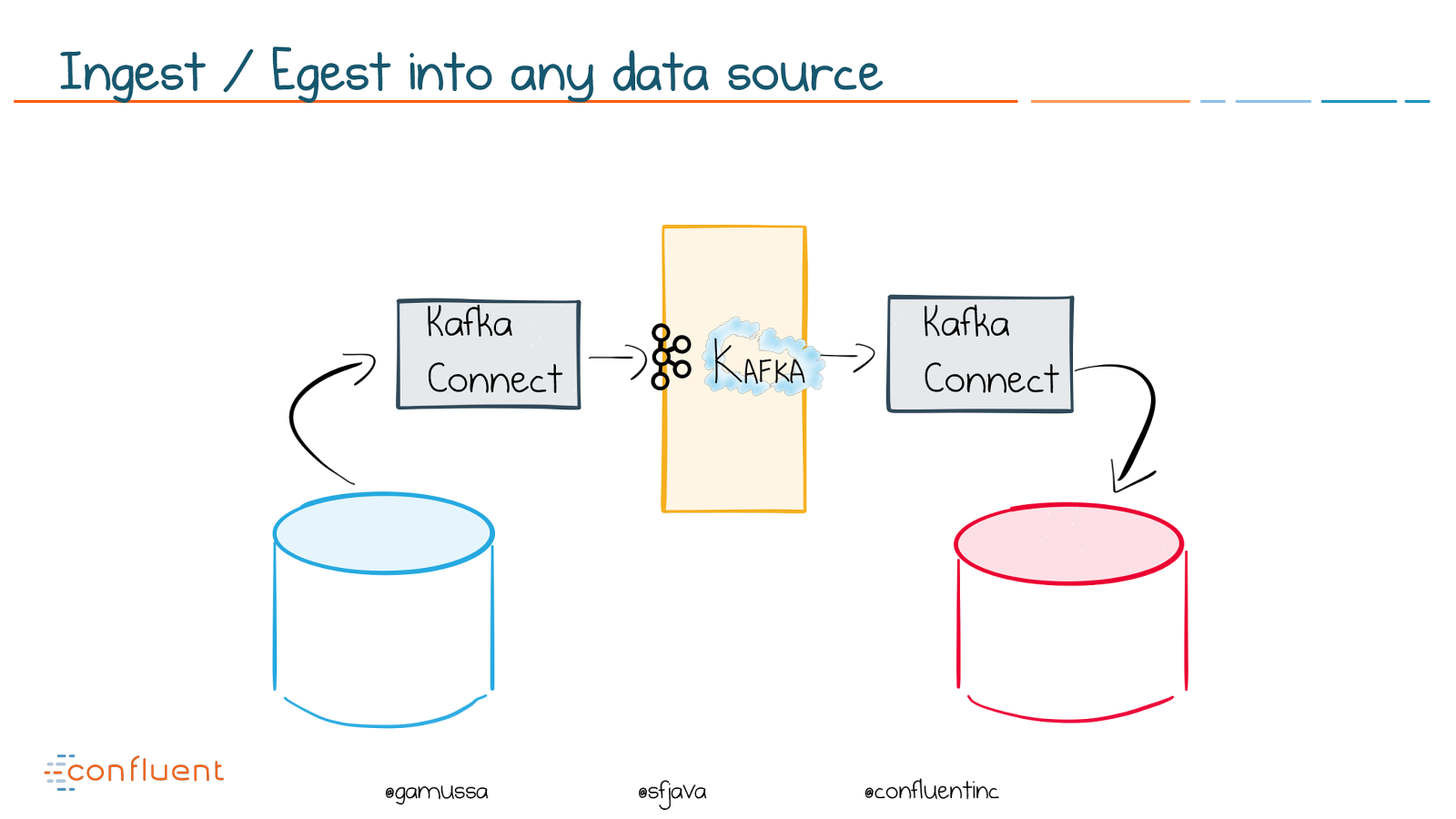

@ @gamussa @sfjava @confluentinc Ingest / Egest into any data source Kafka

Connect Kafka

Connect

Slide 44

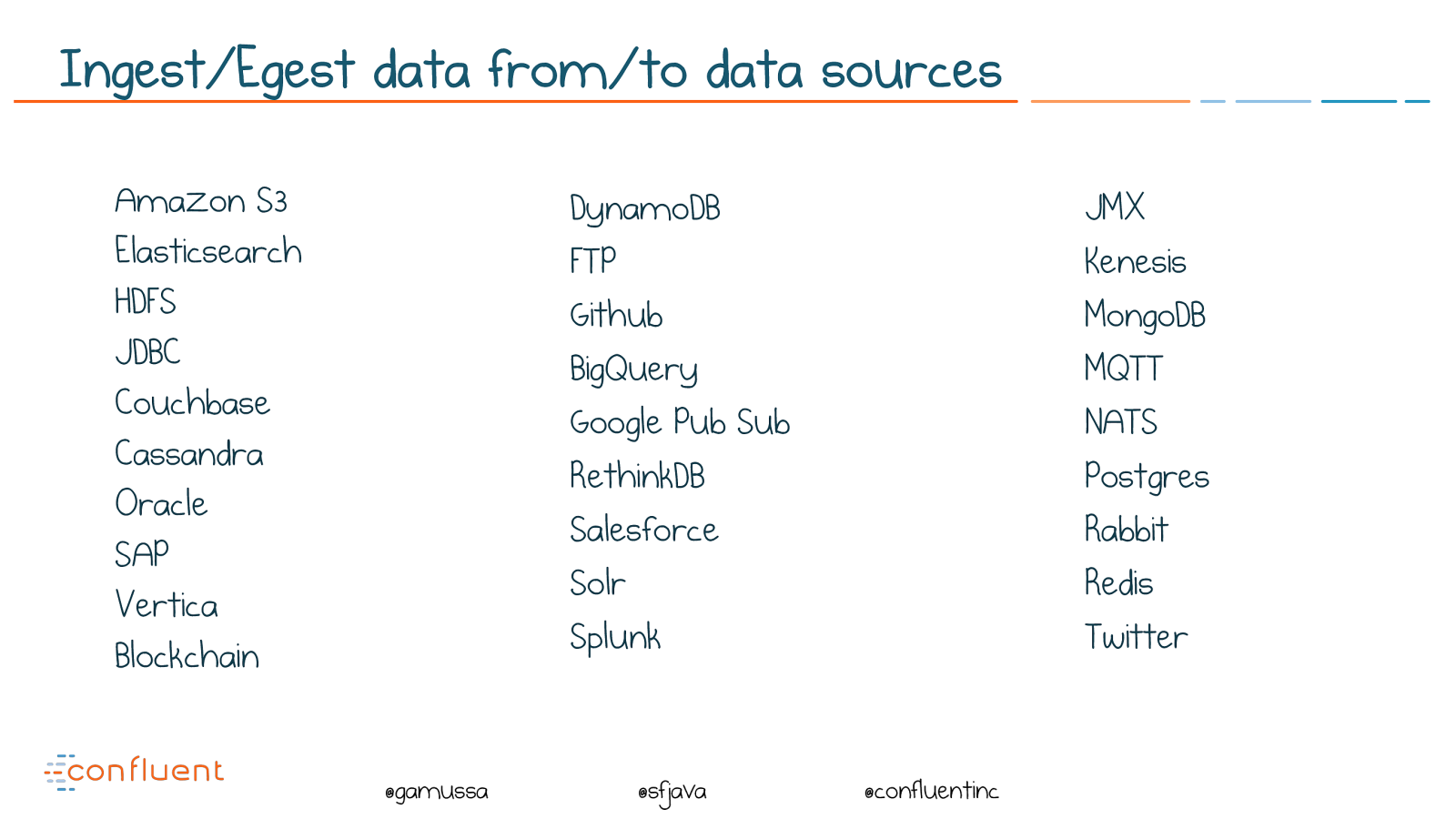

@ @gamussa @sfjava @confluentinc Ingest/Egest data from/to data sources Amazon S3 Elasticsearch HDFS JDBC Couchbase Cassandra Oracle SAP Vertica Blockchain JMX

Kenesis MongoDB

MQTT

NATS

Postgres

Rabbit

Redis Twitter

DynamoDB FTP

Github BigQuery Google Pub Sub

RethinkDB Salesforce

Solr Splunk

Slide 45

@ @gamussa @sfjava @confluentinc Kafka Streams and KSQL The Log Connectors Connectors Producer Consumer Streaming Engine

Slide 46

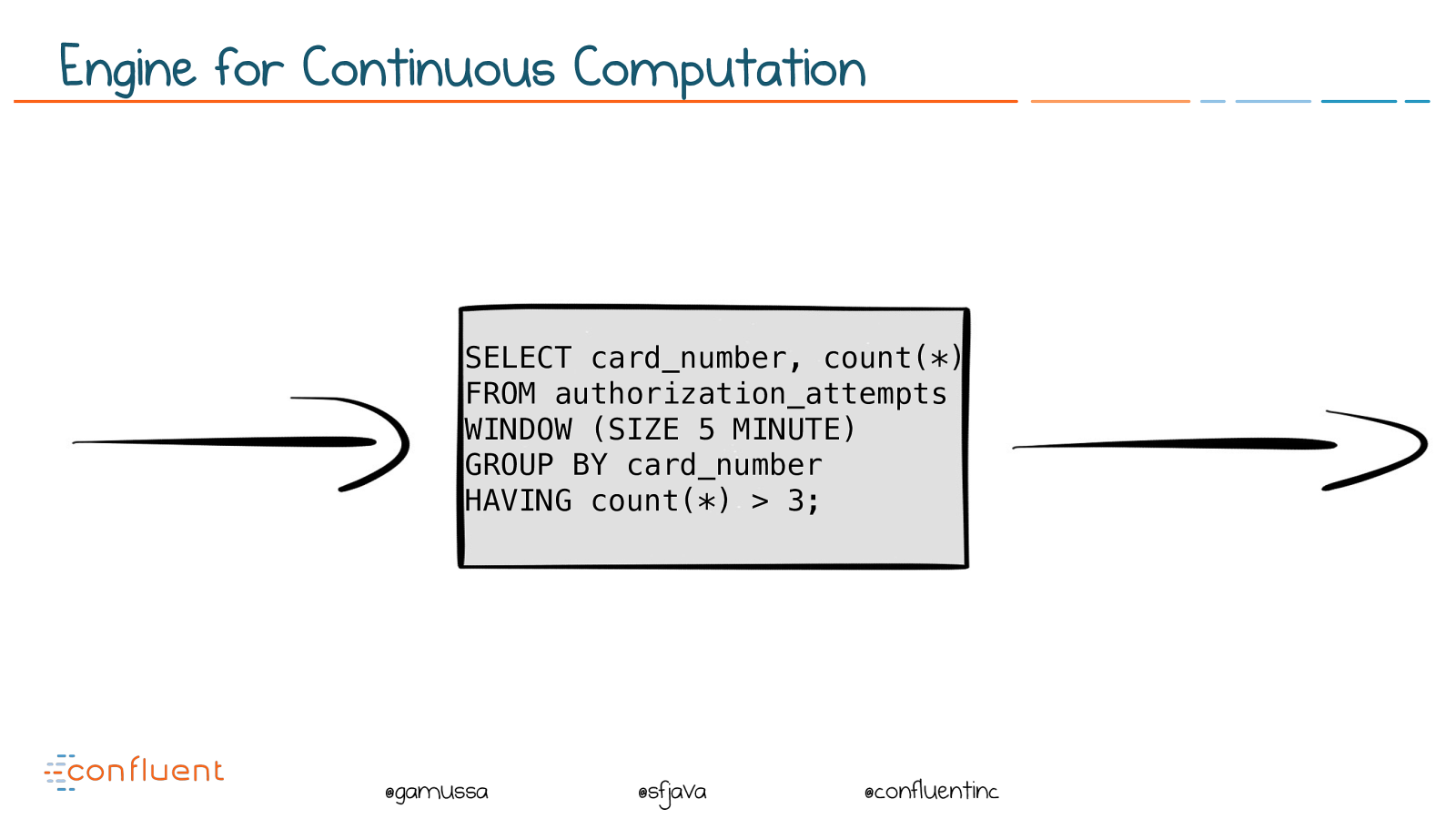

@ @gamussa @sfjava @confluentinc SELECT card_number, count(*)

FROM authorization_attempts

WINDOW (SIZE 5 MINUTE)

GROUP BY card_number

HAVING count(*) > 3;

Engine for Continuous Computation

Slide 47

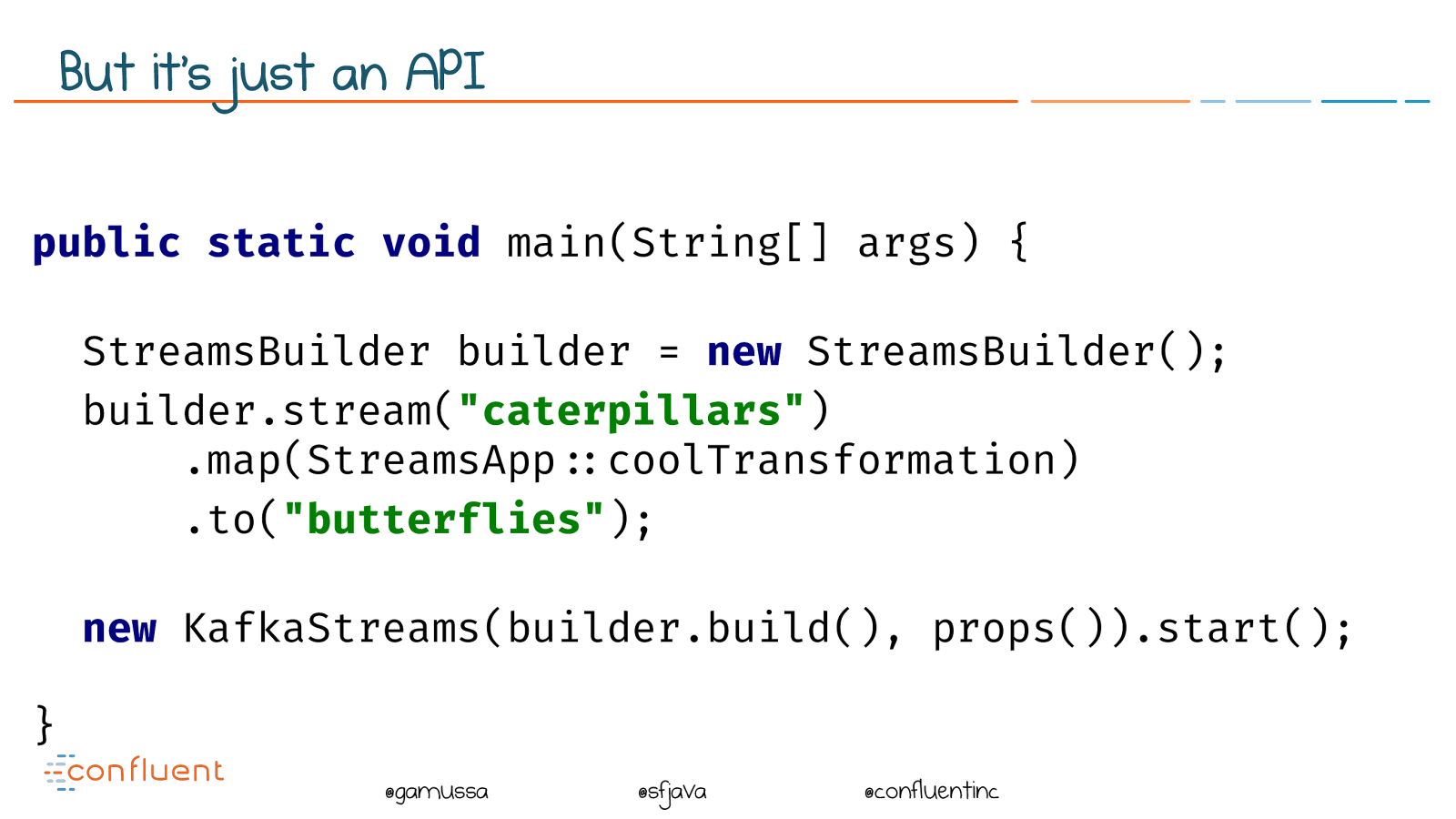

@ @gamussa @sfjava @confluentinc But it’s just an API public static void main(String[] args) { StreamsBuilder builder = new StreamsBuilder(); builder.stream( "caterpillars" ) .map(StreamsApp !" coolTransformation) .to( "butterflies" );

new KafkaStreams(builder.build(), props()).start(); }

Slide 48

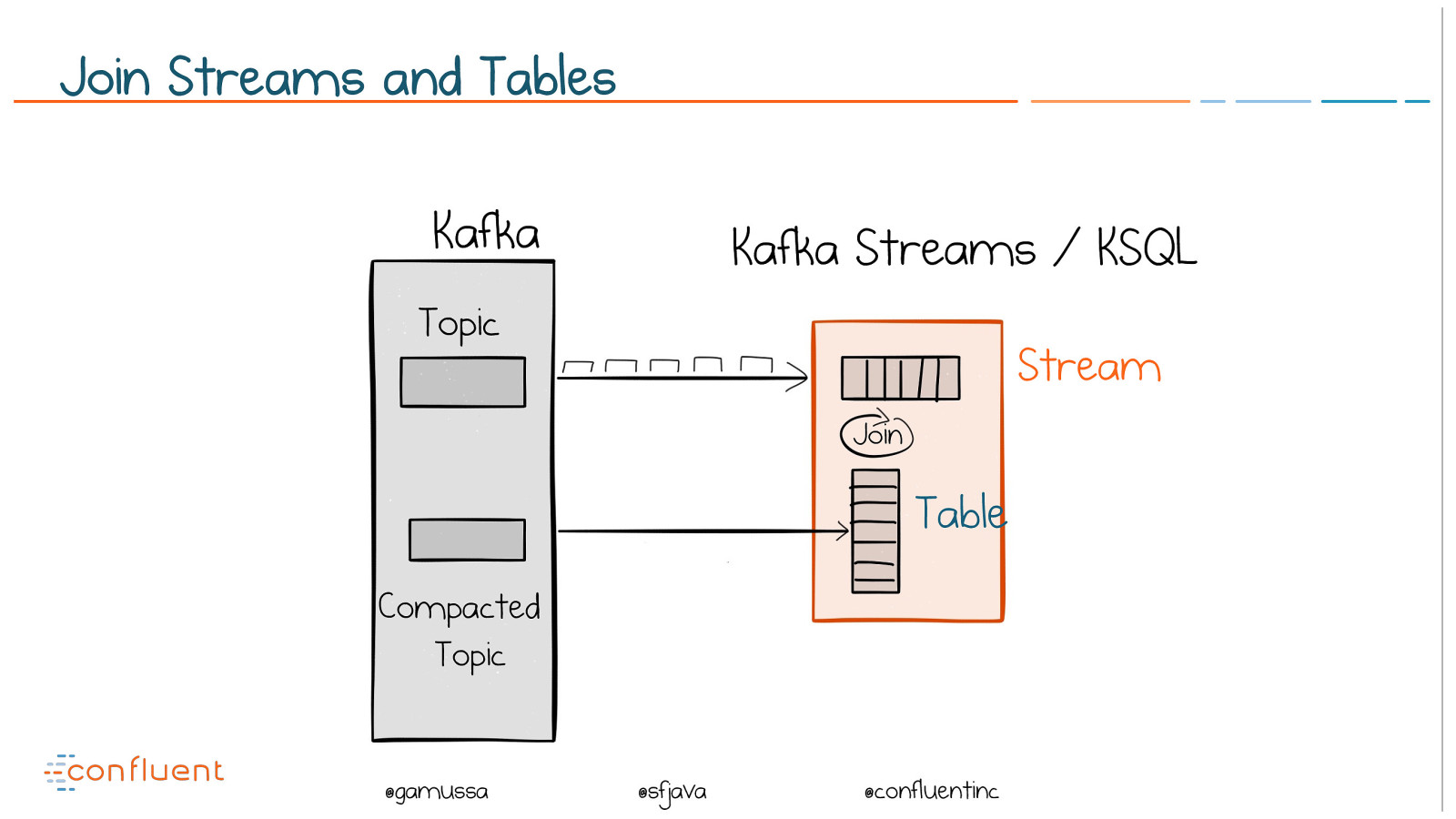

@ @gamussa @sfjava @confluentinc Compacted

Topic Join Stream Table Kafka Kafka Streams / KSQL Topic Join Streams and Tables

Slide 49

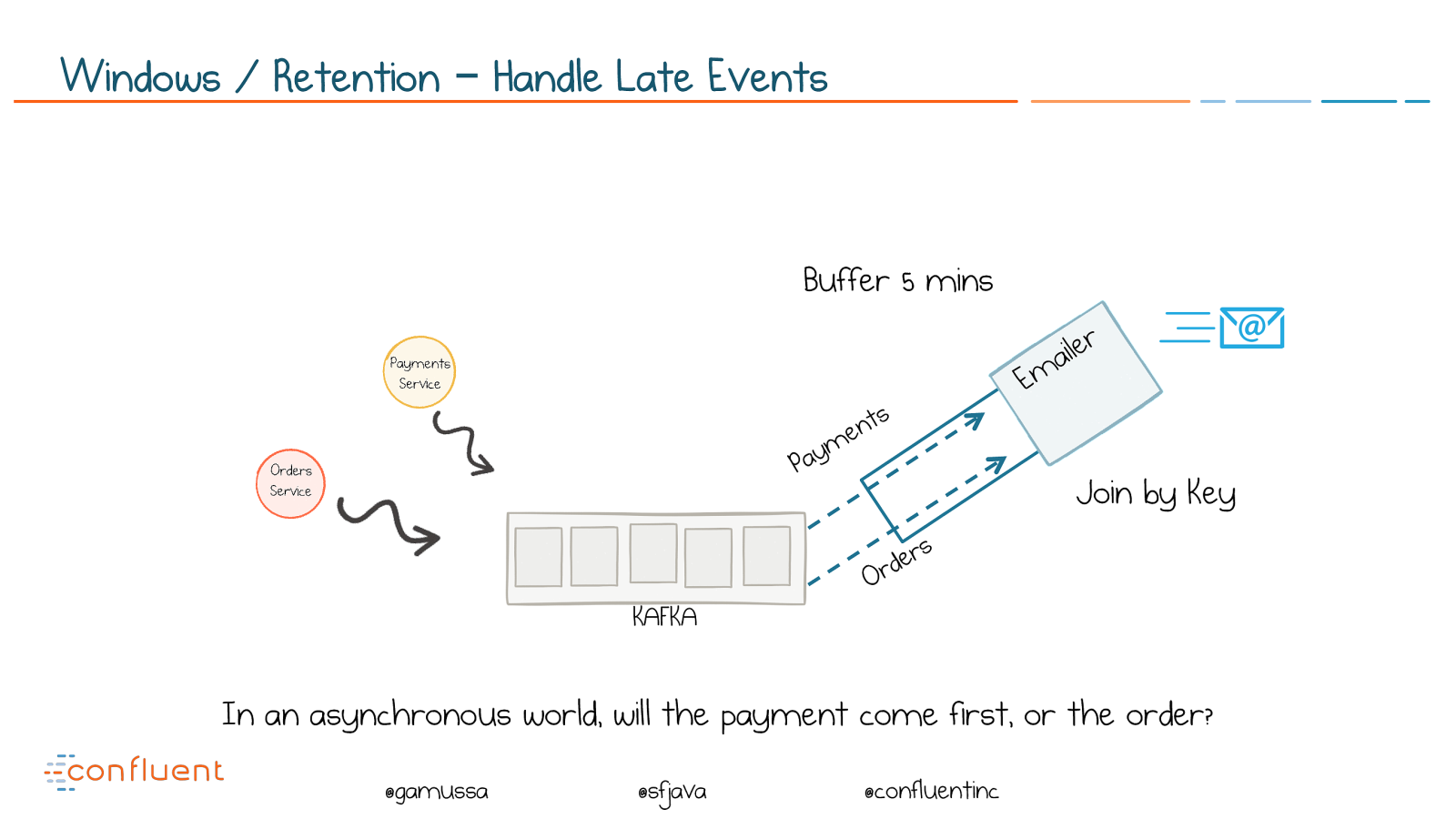

@ @gamussa @sfjava @confluentinc KAFKA Payments Orders Buffer 5 mins Emailer Windows / Retention – Handle Late Events In an asynchronous world, will the payment come first, or the order? Join by Key

Slide 50

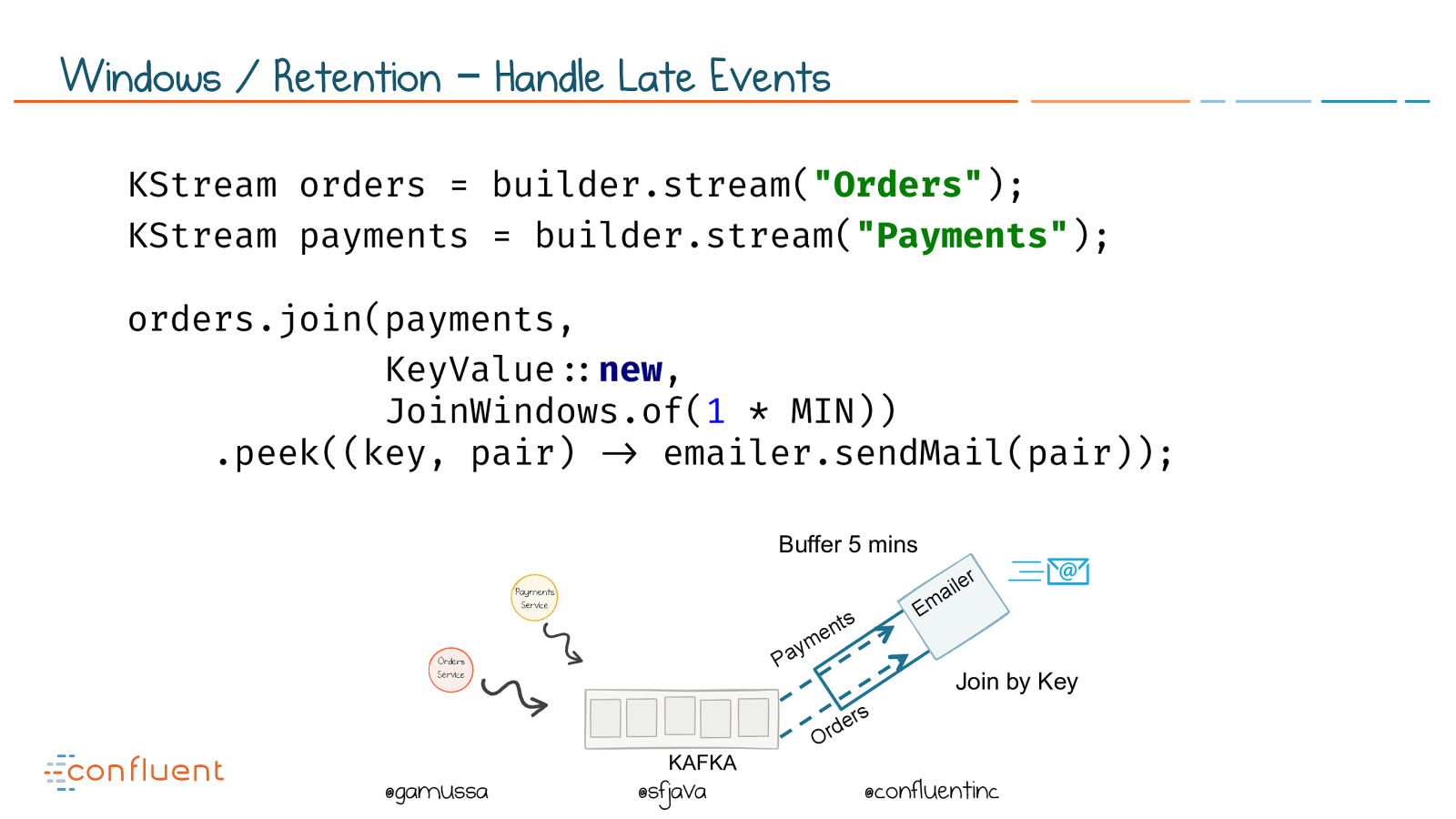

@ @gamussa @sfjava @confluentinc Windows / Retention – Handle Late Events KAFKA Payments Orders Buffer 5 mins Emailer Join by Key KStream orders = builder.stream( "Orders" ); KStream payments = builder.stream( "Payments" ); orders.join(payments, KeyValue !" new , JoinWindows.of( 1

- MIN)) .peek((key, pair) !# emailer.sendMail(pair));

Slide 51

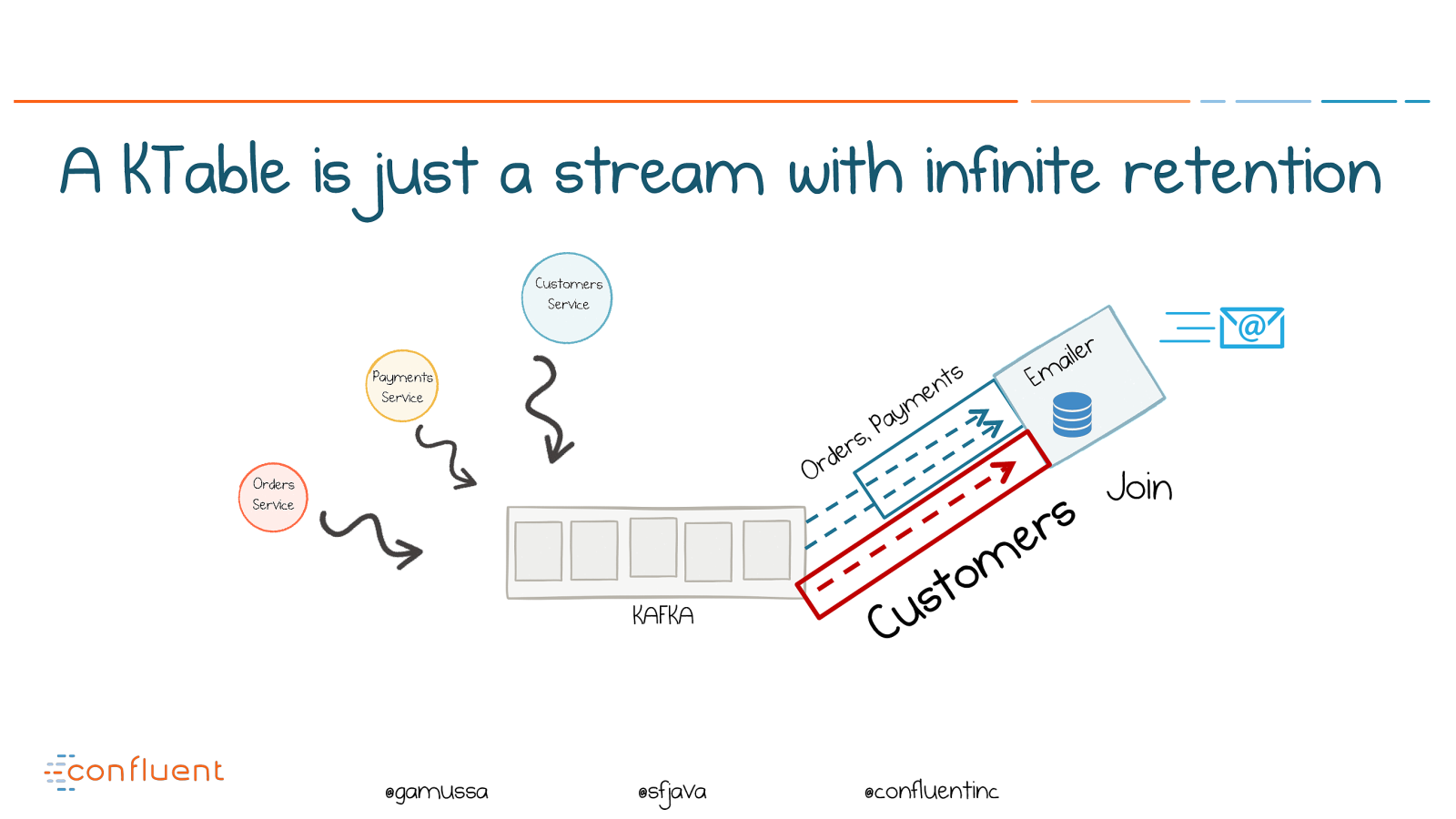

@ @gamussa @sfjava @confluentinc A KTable is just a stream with infinite retention KAFKA Emailer Orders, Payments Customers Join

Slide 52

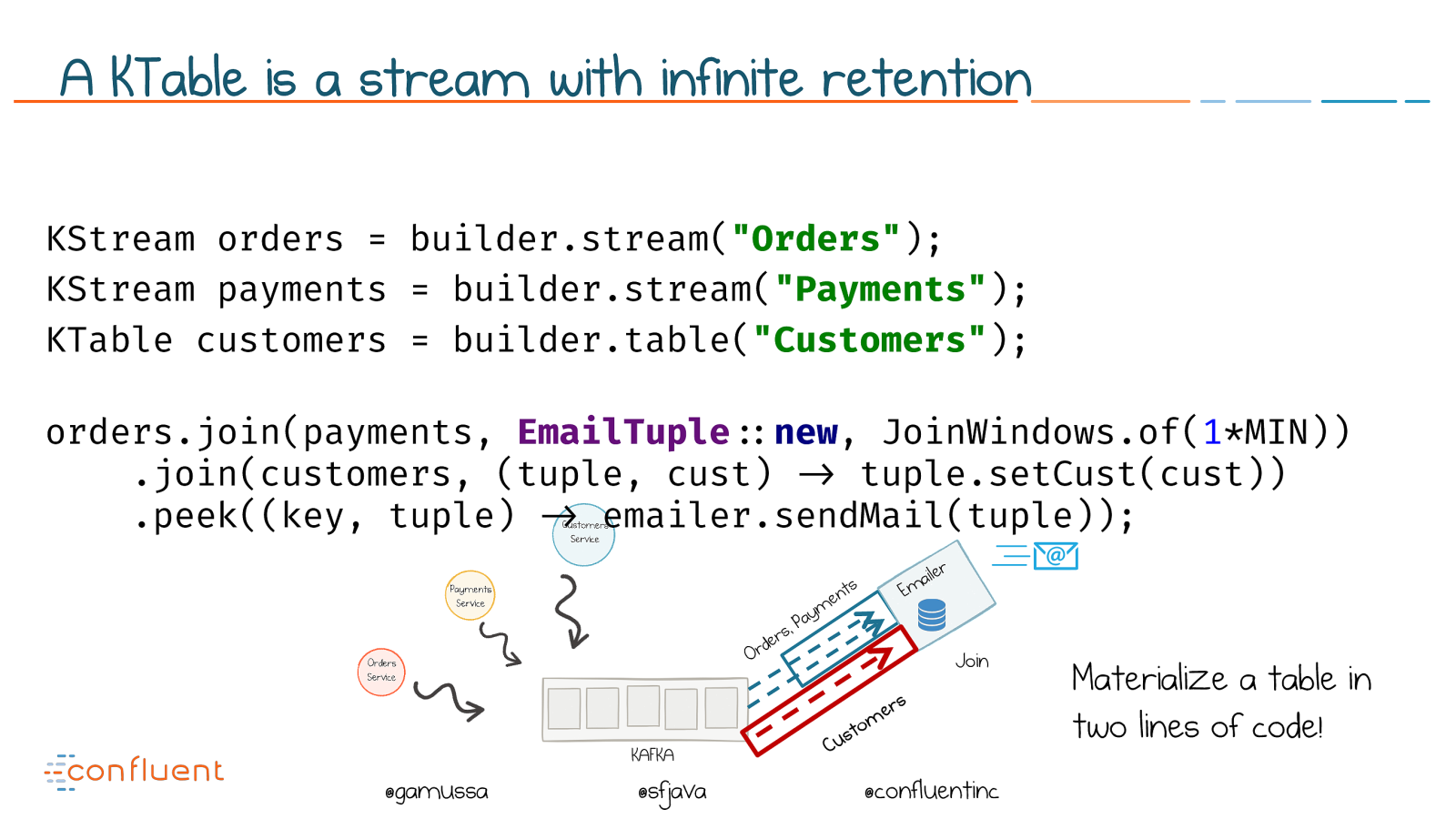

@ @gamussa @sfjava @confluentinc A KTable is a stream with infinite retention KAFKA Emailer Orders, Payments Customers Join Materialize a table in two lines of code! KStream orders = builder.stream( "Orders" ); KStream payments = builder.stream( "Payments" ); KTable customers = builder.table( "Customers" ); orders.join(payments, EmailTuple !" new , JoinWindows.of( 1 *MIN)) .join(customers, (tuple, cust) !# tuple.setCust(cust)) .peek((key, tuple) !# emailer.sendMail(tuple));

Slide 53

@ @gamussa @sfjava @confluentinc The Log Connectors Connectors Producer Consumer Streaming Engine Kafka is a complete Streaming Platform

Slide 54

@ @gamussa @sfjava @confluentinc The Log Connectors Connectors Producer Consumer Streaming Engine Kafka is a complete Streaming Platform

Slide 55

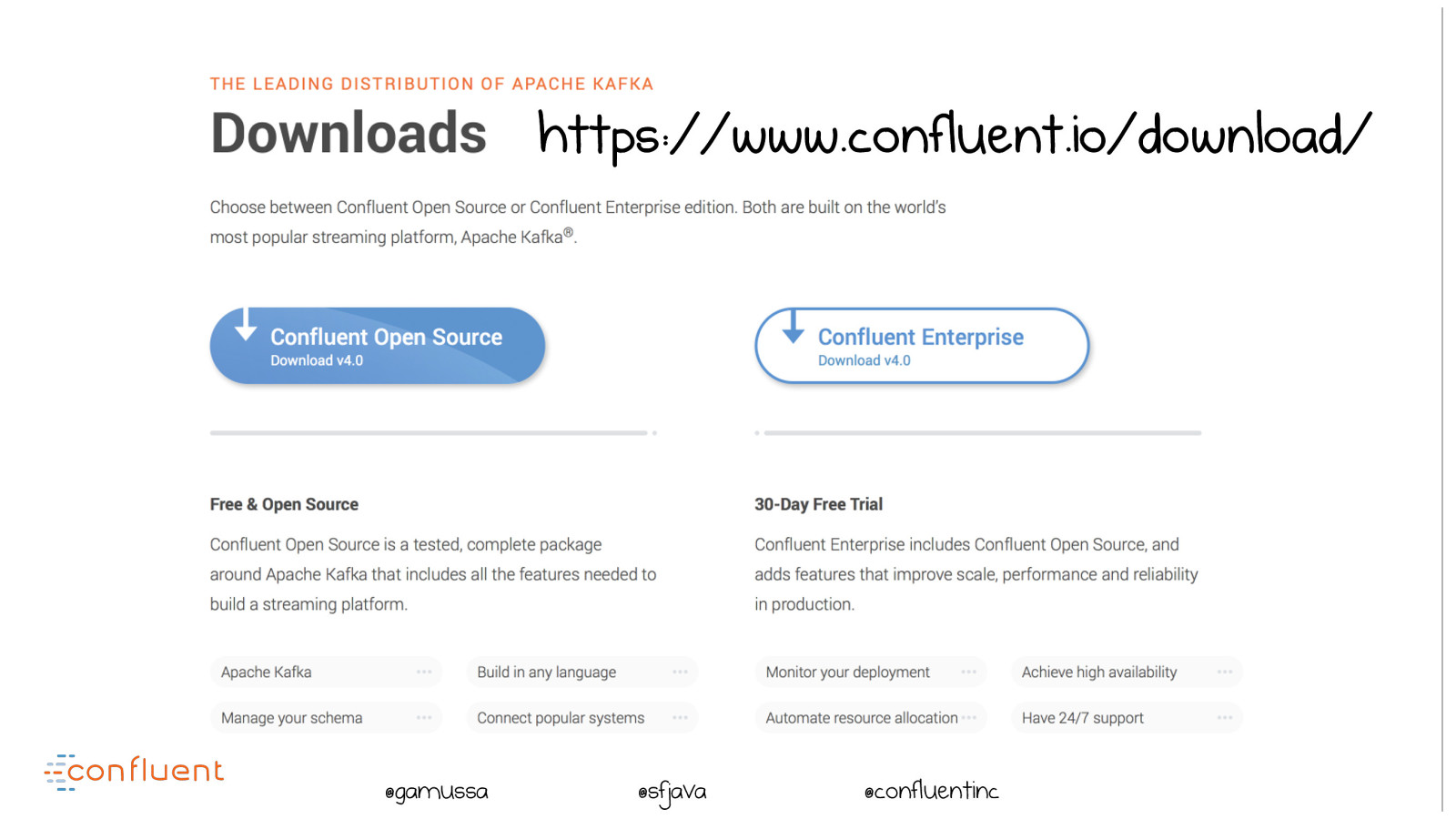

@ @gamussa @sfjava @confluentinc https://www.confluent.io/download/

Slide 56

@ @gamussa @sfjava @confluentinc We are hiring! https://www.confluent.io/careers/

Slide 57

@ @gamussa @sfjava @confluentinc One more thing …

Slide 58

@ @gamussa @sfjava @confluentinc

Slide 59

@ @gamussa @sfjava @confluentinc

Slide 60

@ @gamussa @sfjava @confluentinc

Slide 61

@ @gamussa @sfjava @confluentinc

Slide 62

@ @gamussa @sfjava @confluentinc

Slide 63

@ @gamussa @sfjava @confluentinc A Major New Paradigm

Slide 64

@ @gamussa @sfjava @confluentinc Thanks ! Stay for #javapuzzlersng!!! @gamussa viktor@confluent.io We are hiring! https://www.confluent.io/careers/