Apache Kafka and KSQL in Action: Let’s Build a Streaming Data Pipeline!

A presentation at Jfokus in in Stockholm, Sweden by Viktor Gamov

@gamussa #jfokus @confluentinc Apache Kafka and KSQL in Action : Let’s Build a Streaming Data Pipeline!

@gamussa #Jfokus @confluentinc

@gamussa #Jfokus @confluentinc

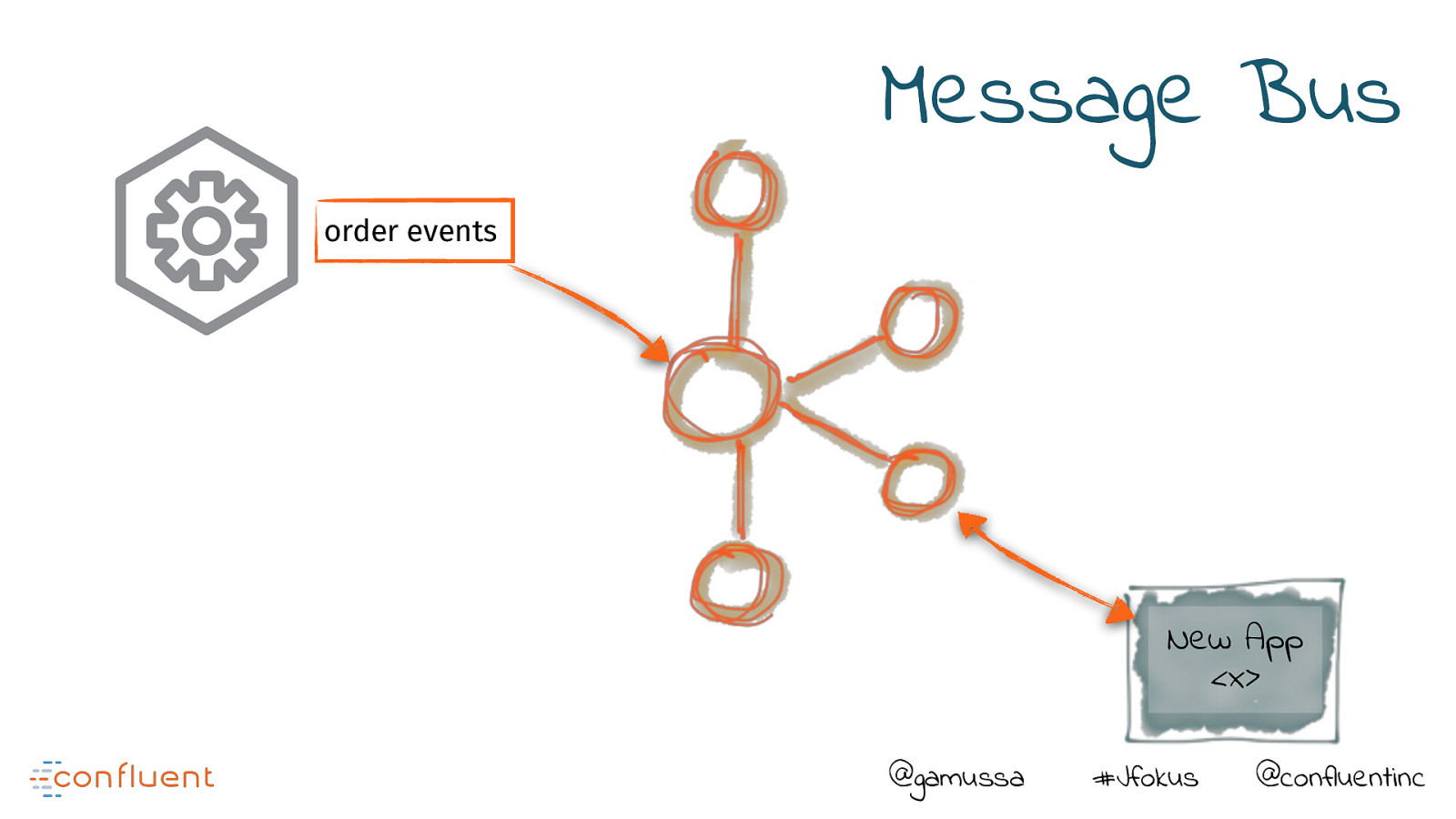

Message Bus order events New App <x> @gamussa #Jfokus @confluentinc

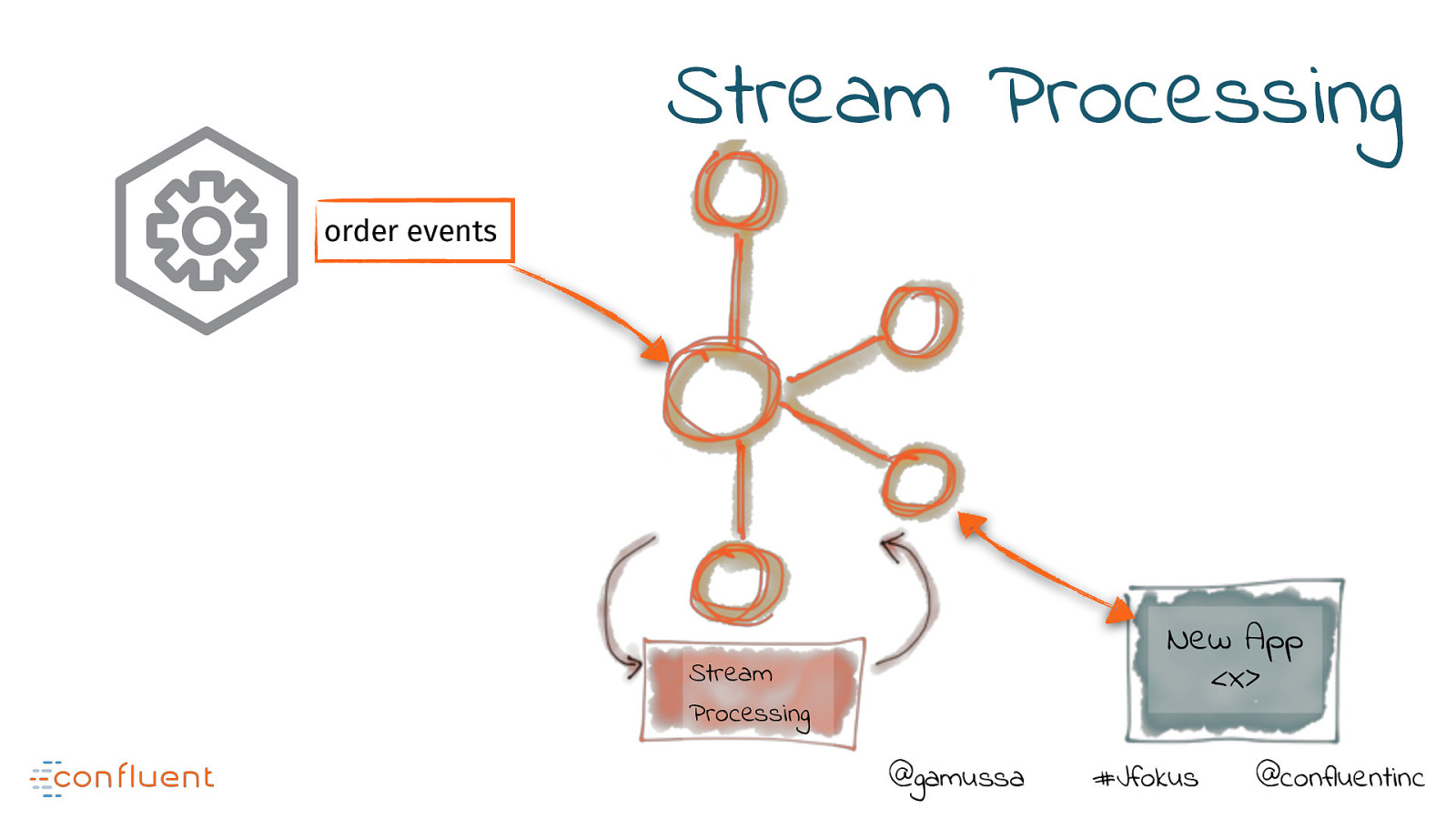

Stream Processing order events New App <x> Stream Processing @gamussa #Jfokus @confluentinc

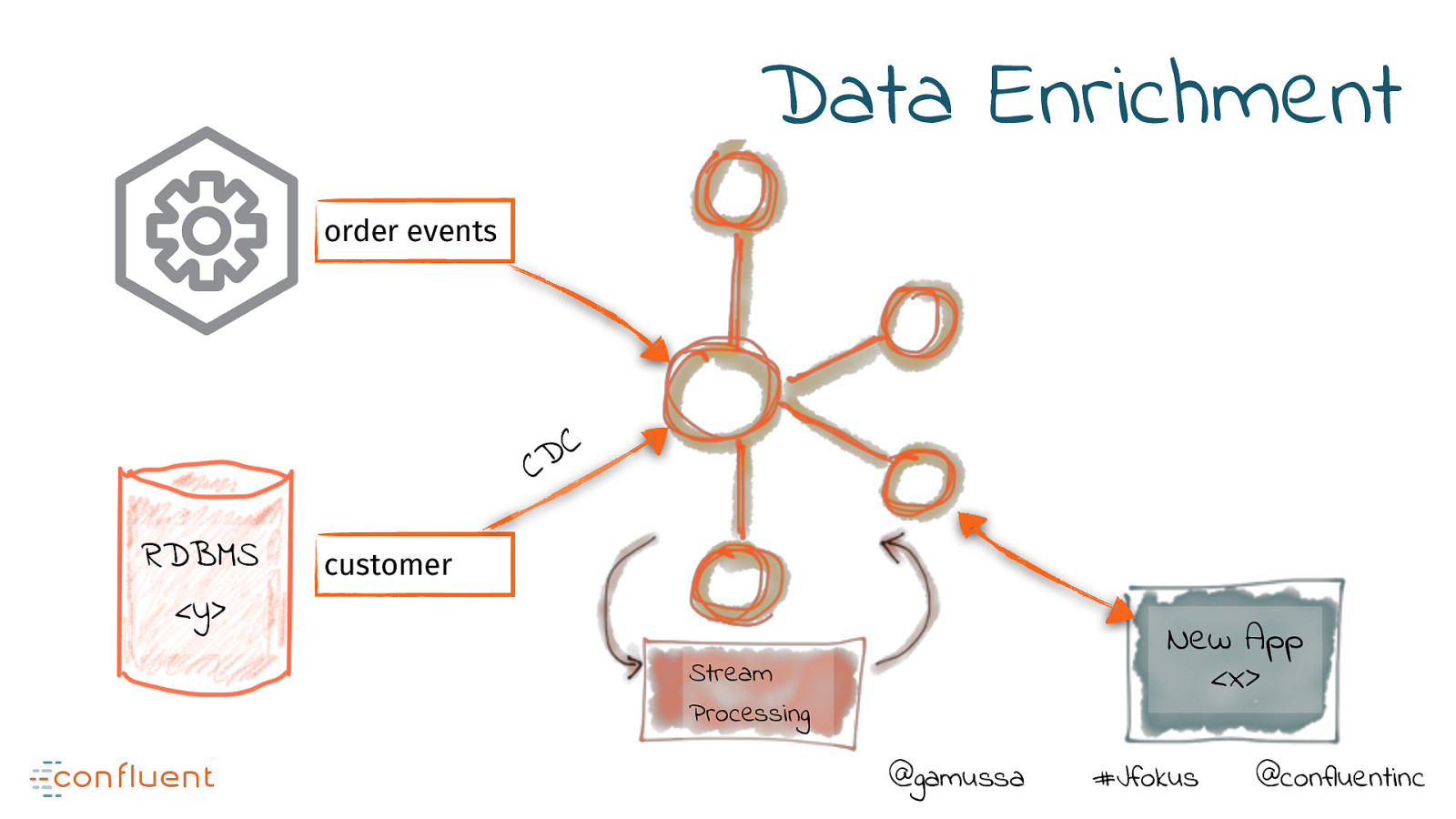

Data Enrichment order events C D C RDBMS <y> customer New App <x> Stream Processing @gamussa #Jfokus @confluentinc

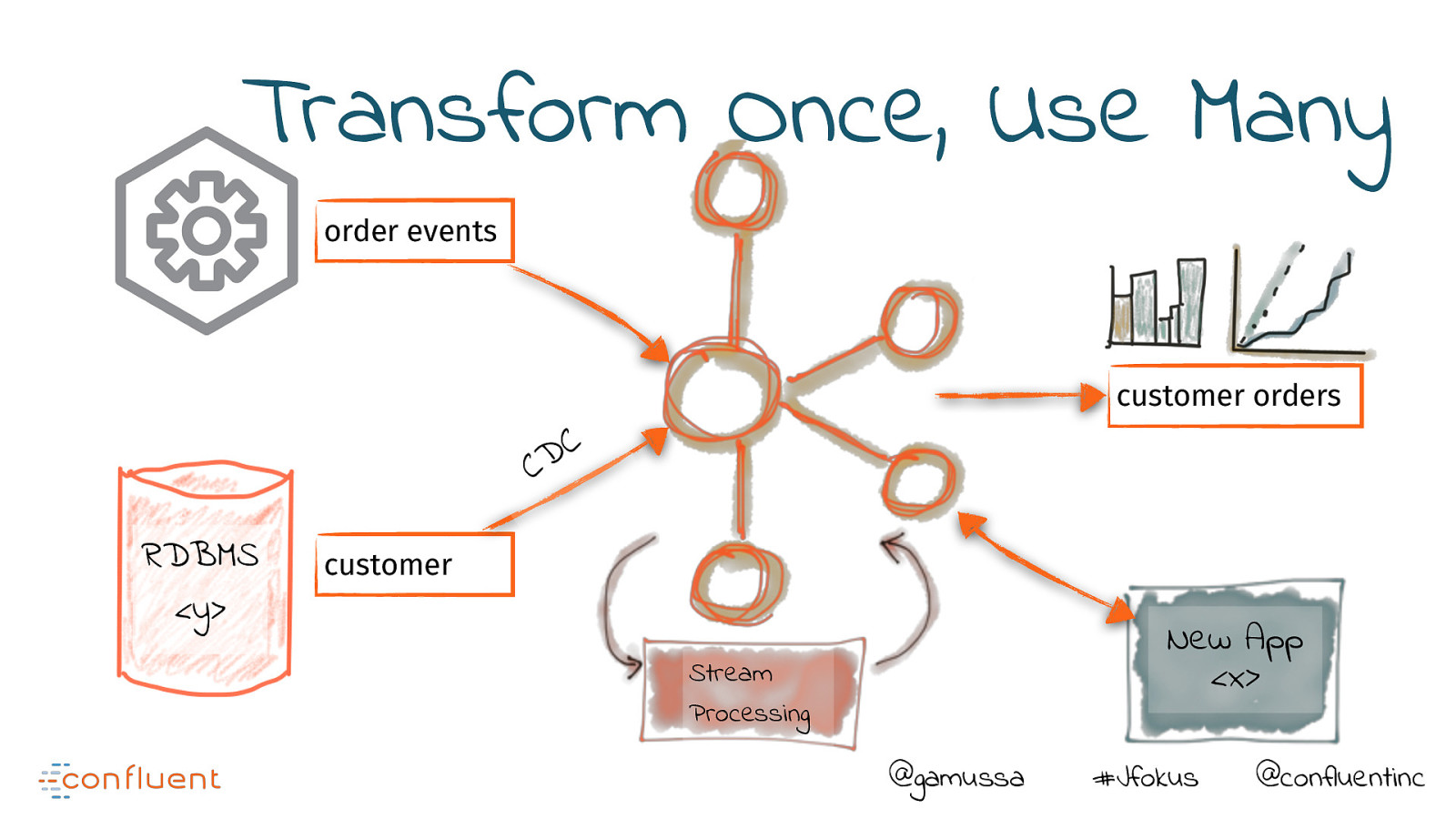

Transform Once, Use Many order events customer orders C D C RDBMS <y> customer New App <x> Stream Processing @gamussa #Jfokus @confluentinc

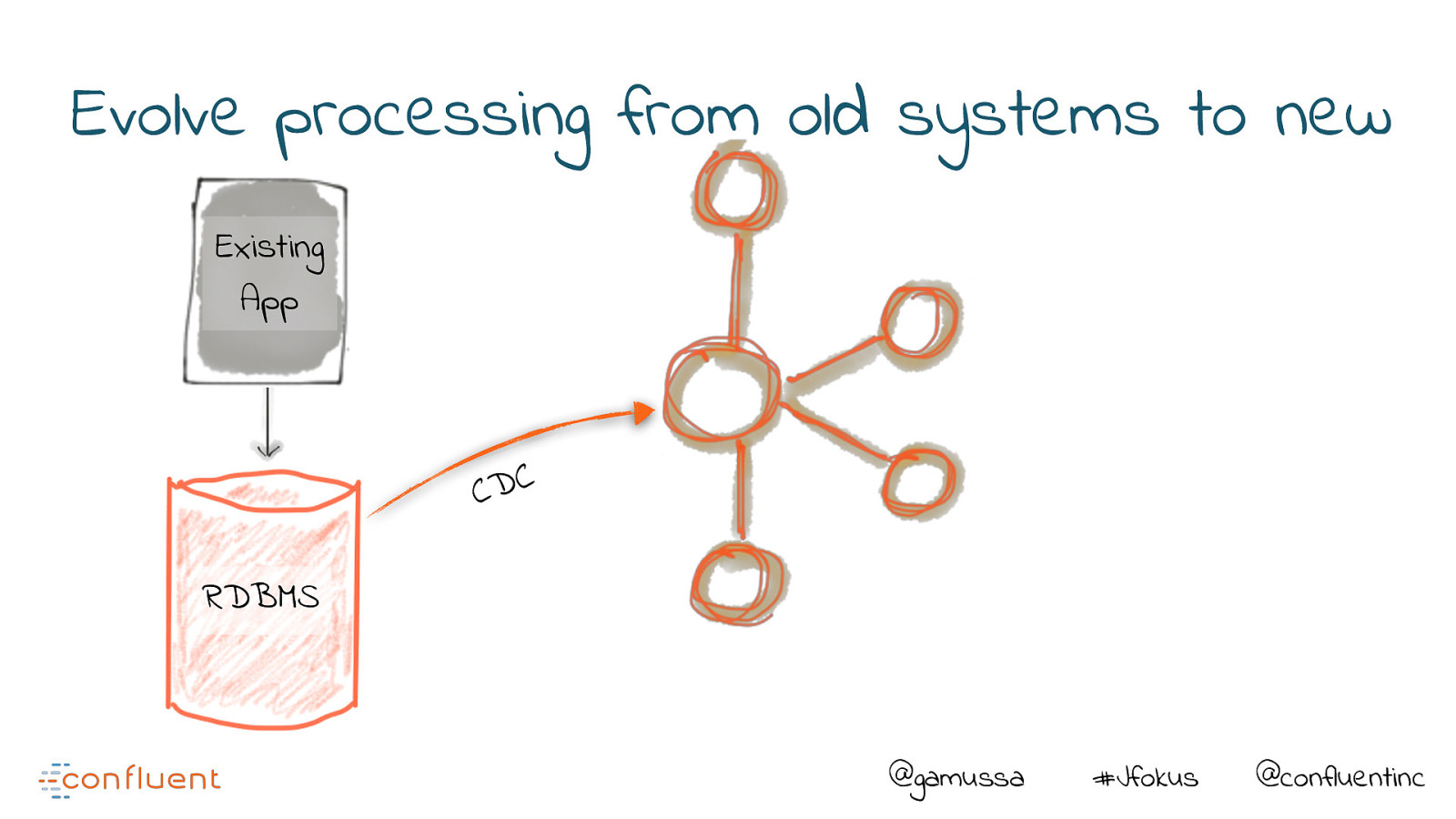

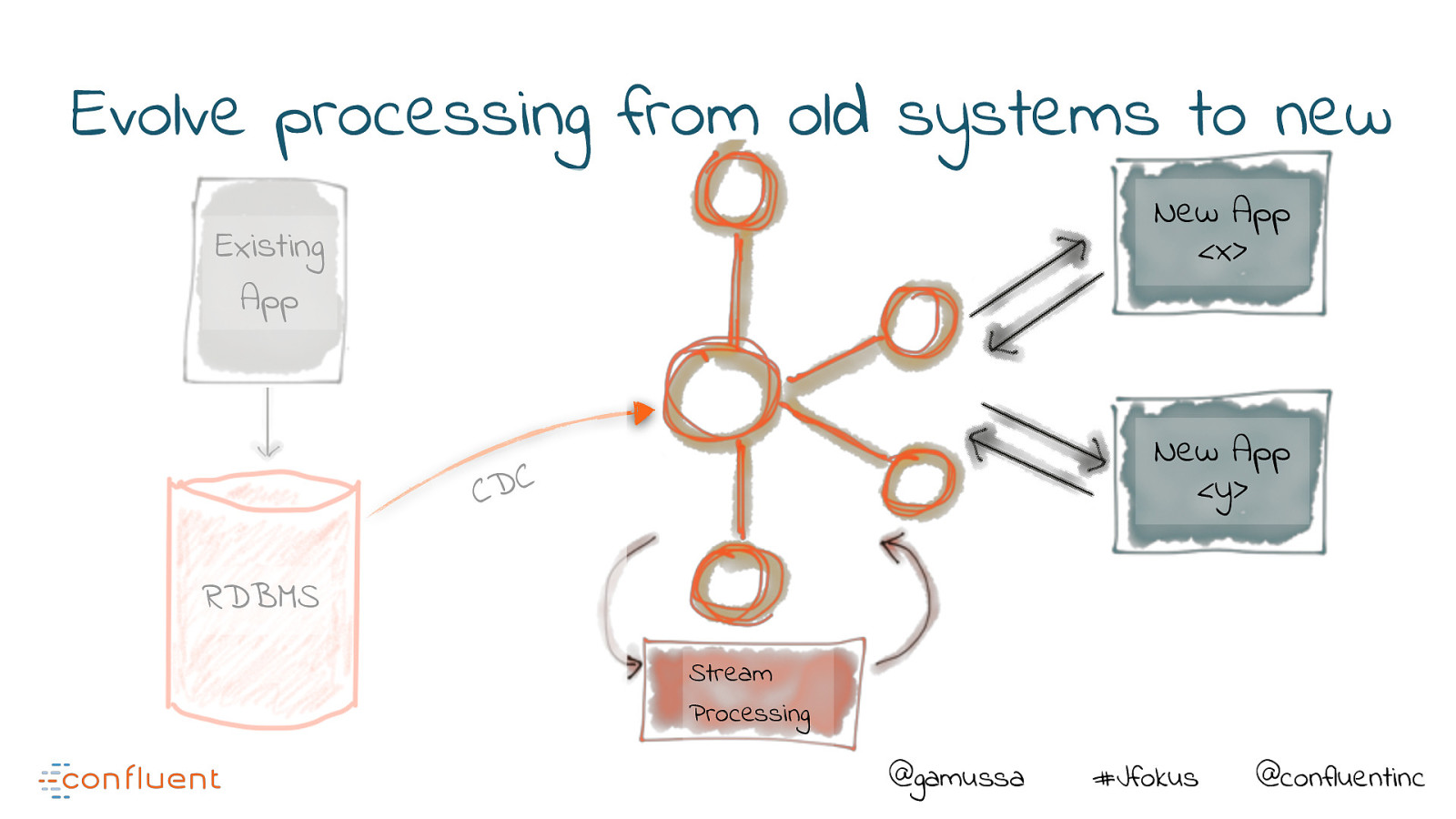

Evolve processing from old systems to new Existing App C D C RDBMS @gamussa #Jfokus @confluentinc

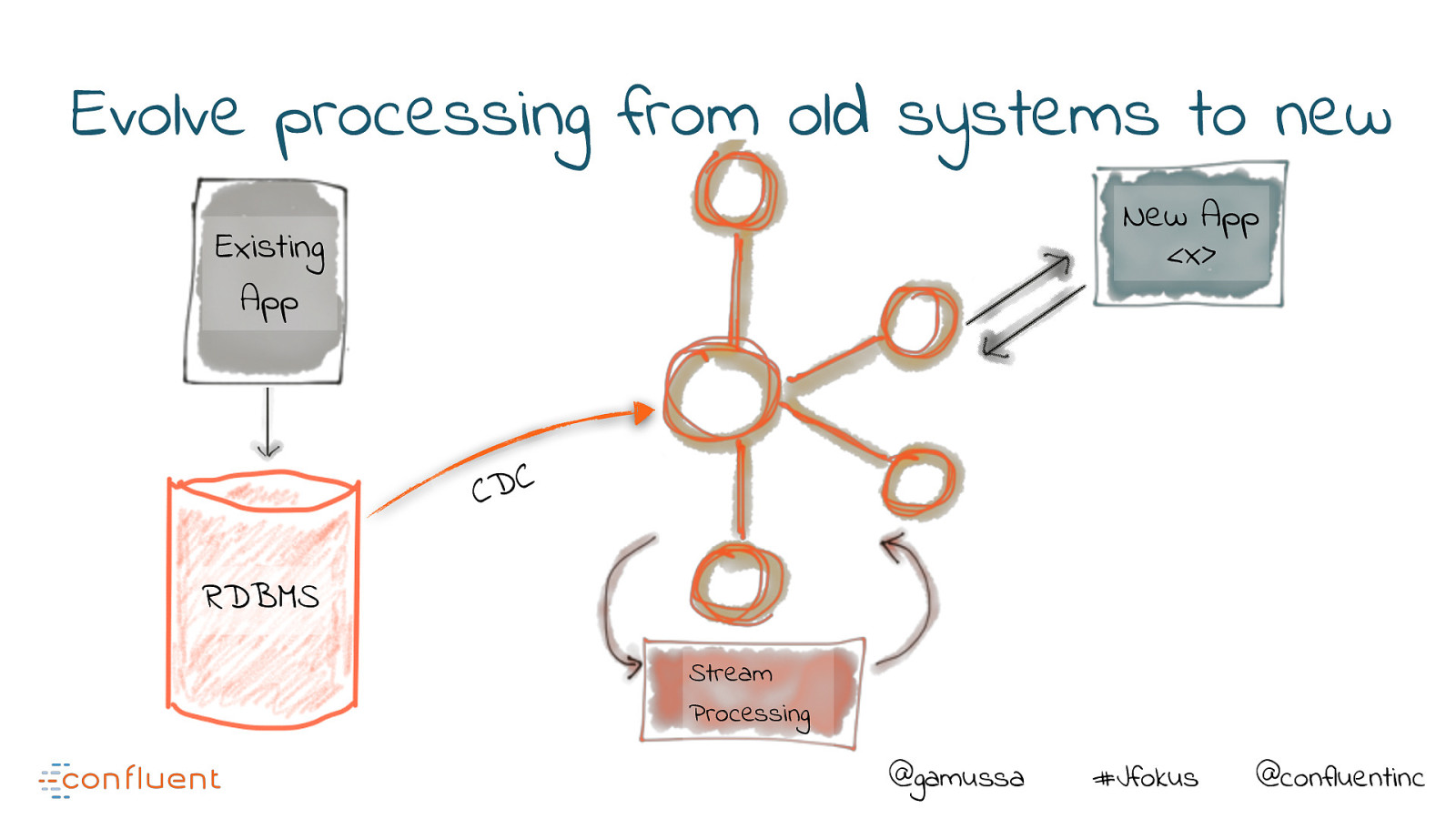

Evolve processing from old systems to new New App <x> Existing App C D C RDBMS Stream Processing @gamussa #Jfokus @confluentinc

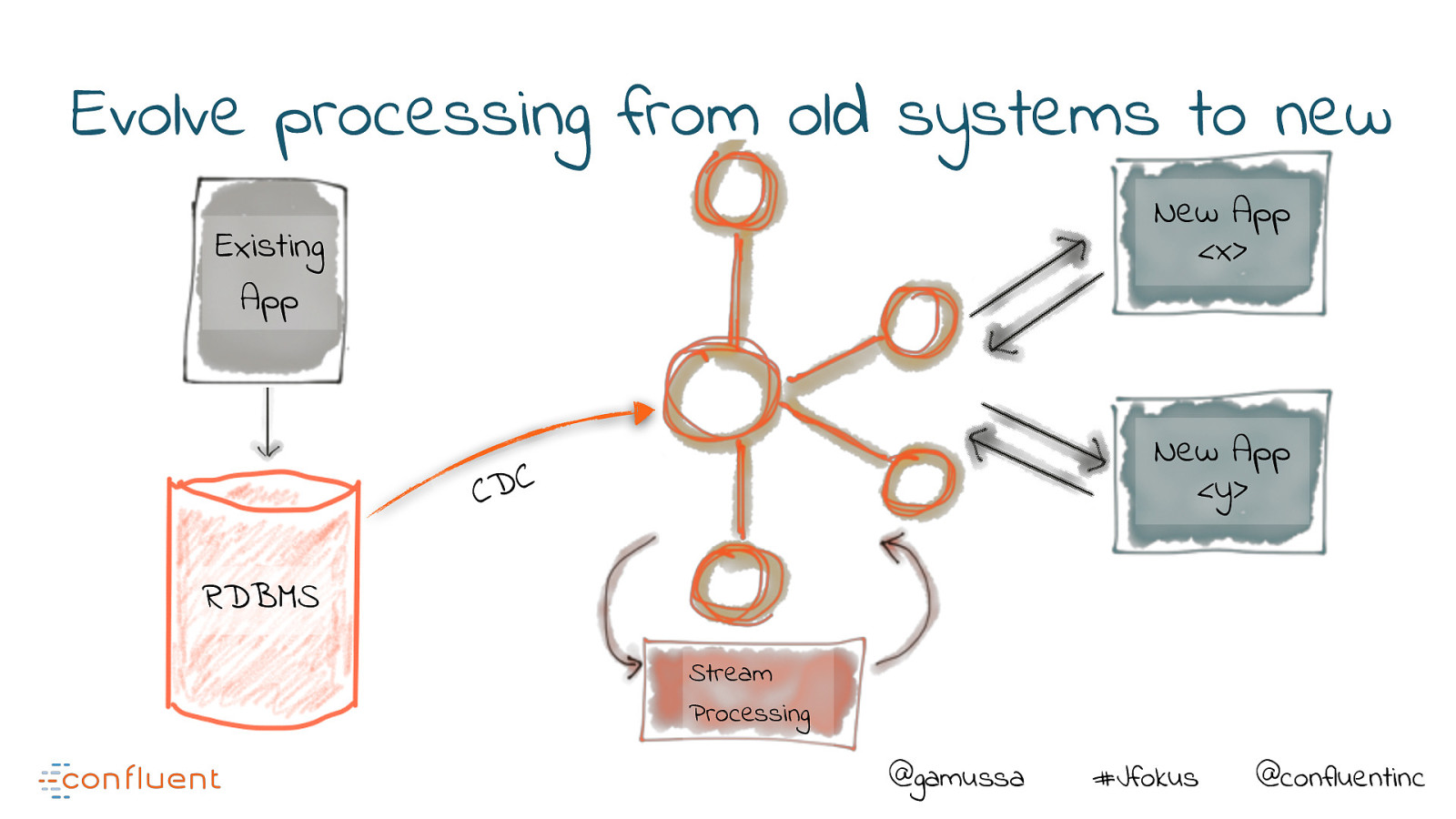

Evolve processing from old systems to new New App <x> Existing App New App <y> C D C RDBMS Stream Processing @gamussa #Jfokus @confluentinc

Evolve processing from old systems to new New App <x> Existing App New App <y> C D C RDBMS Stream Processing @gamussa #Jfokus @confluentinc

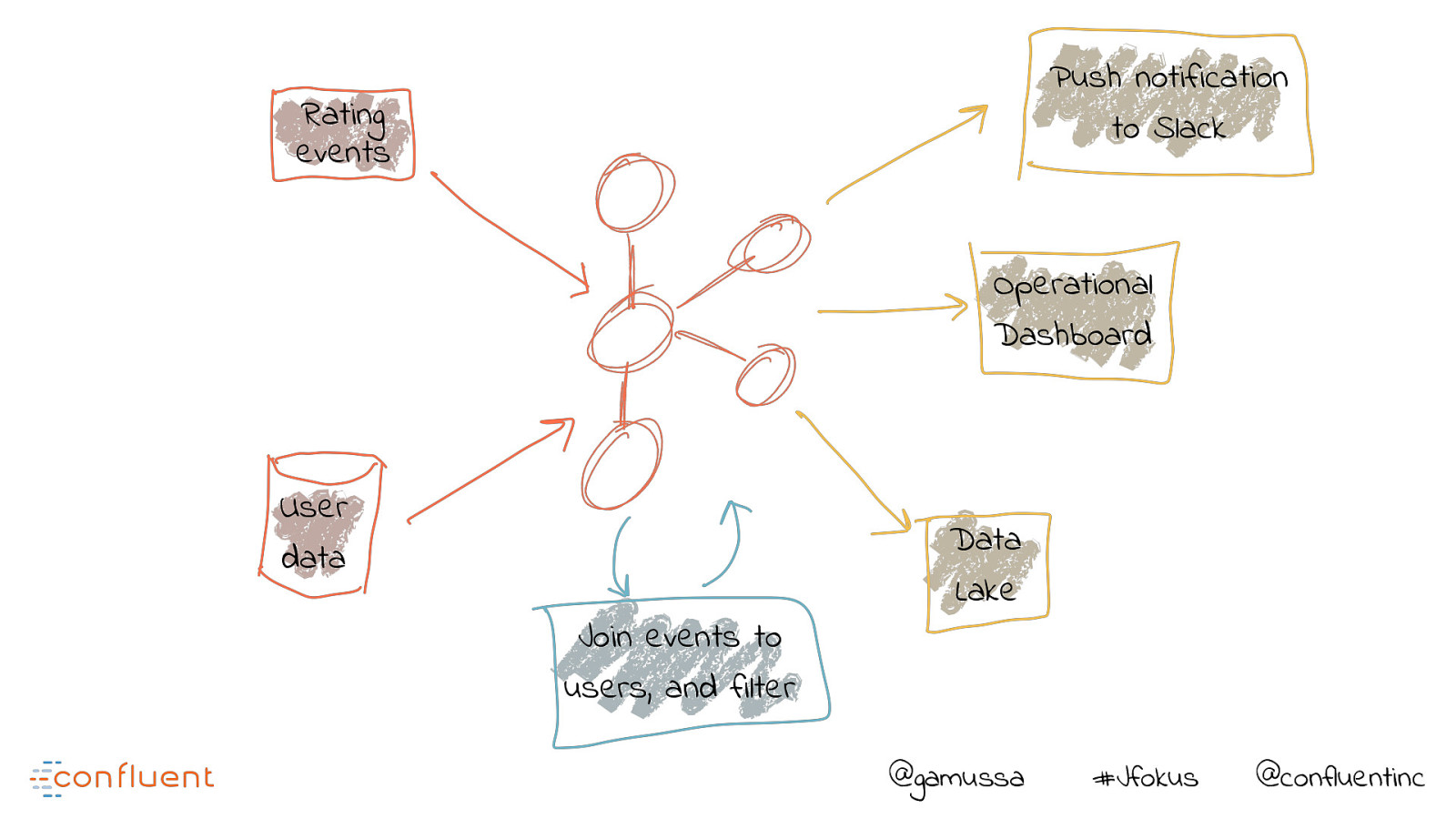

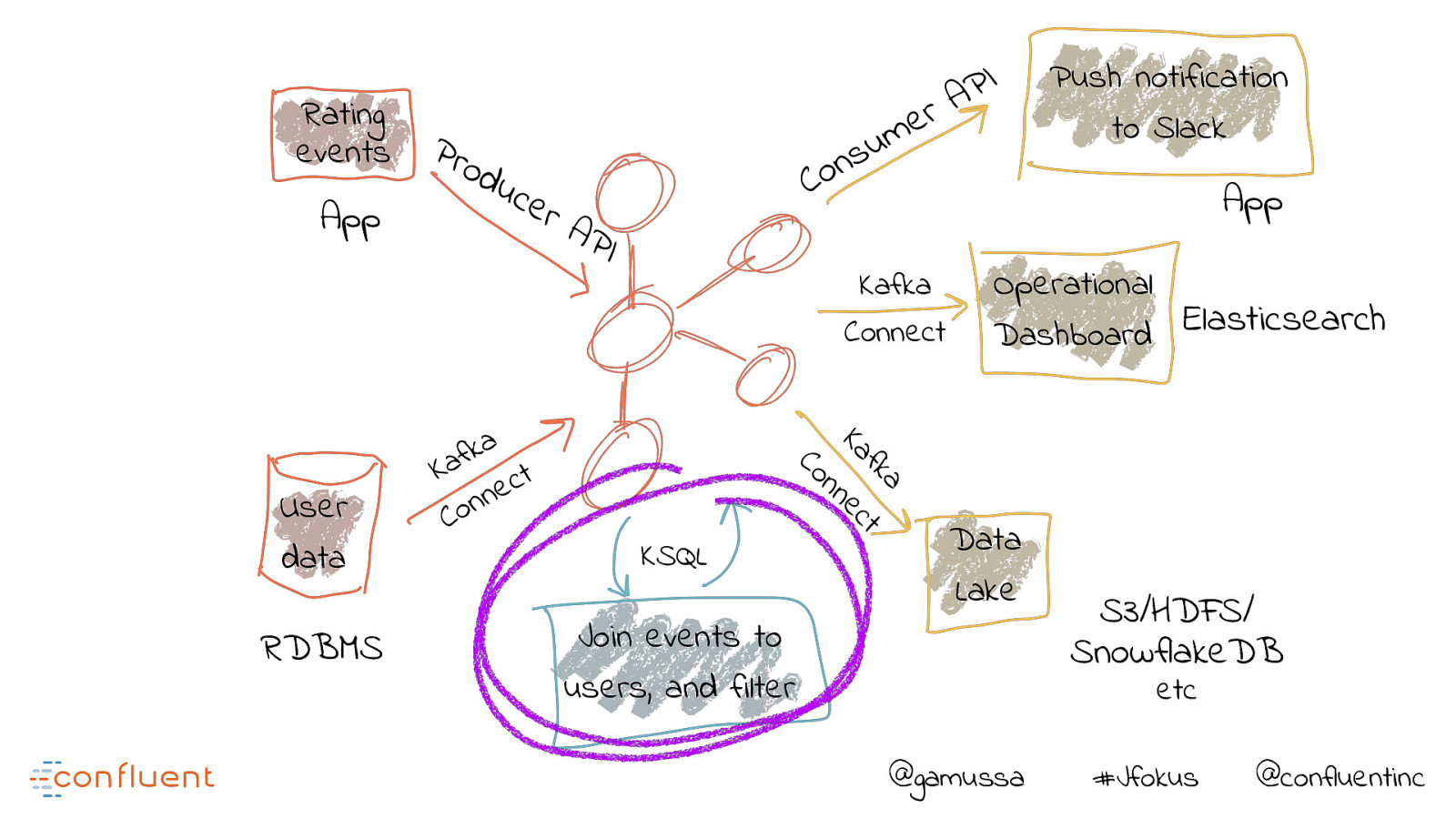

Push notification to Slack Rating events Operational Dashboard User data Join events to users, and filter Data Lake @gamussa #Jfokus @confluentinc

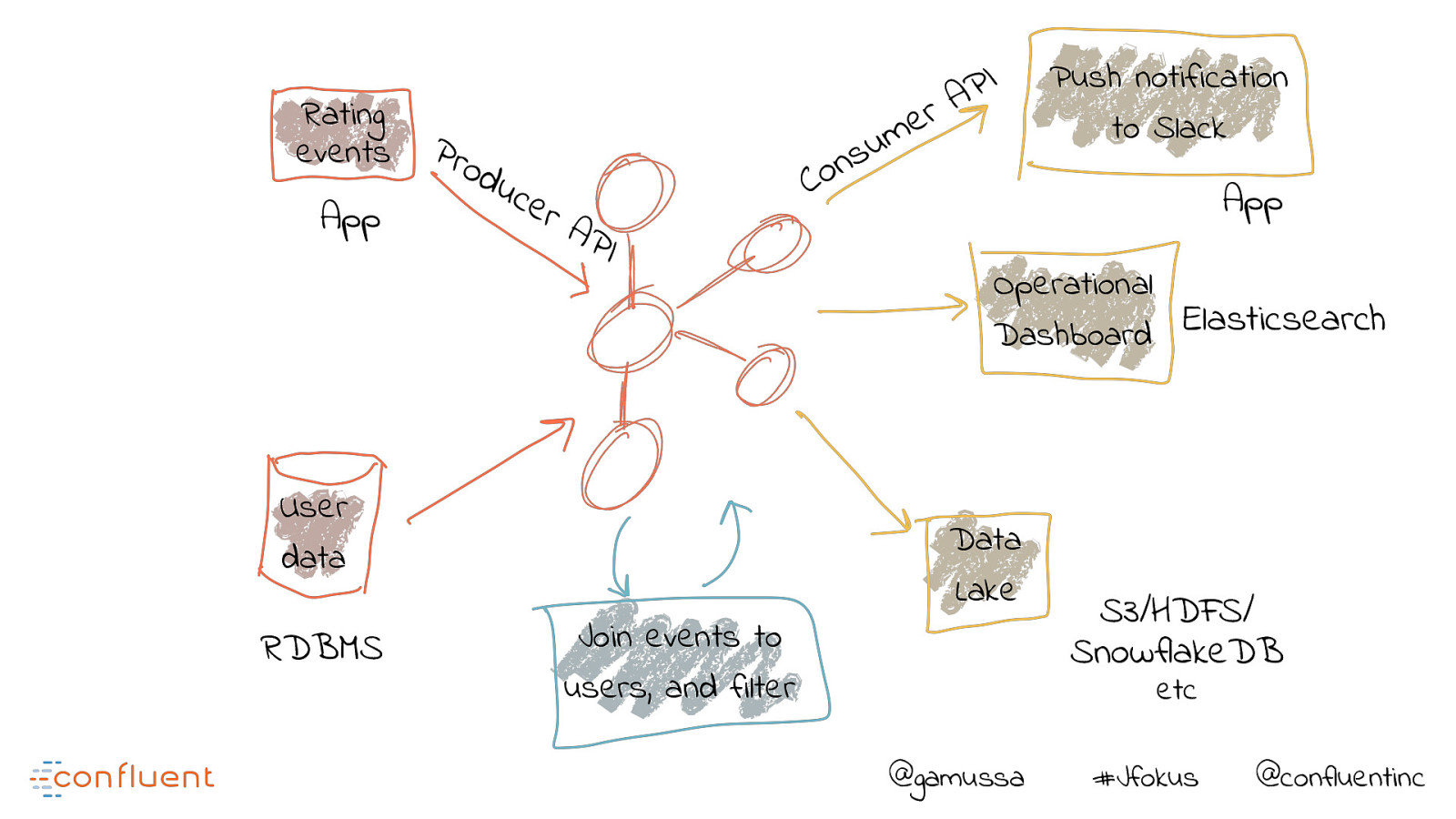

Rating events App Pro d uc e rA PI User data RDBMS Join events to users, and filter I P A r e m u s n o C Push notification to Slack App Operational Elasticsearch Dashboard Data Lake S3/HDFS/ SnowflakeDB etc @gamussa #Jfokus @confluentinc

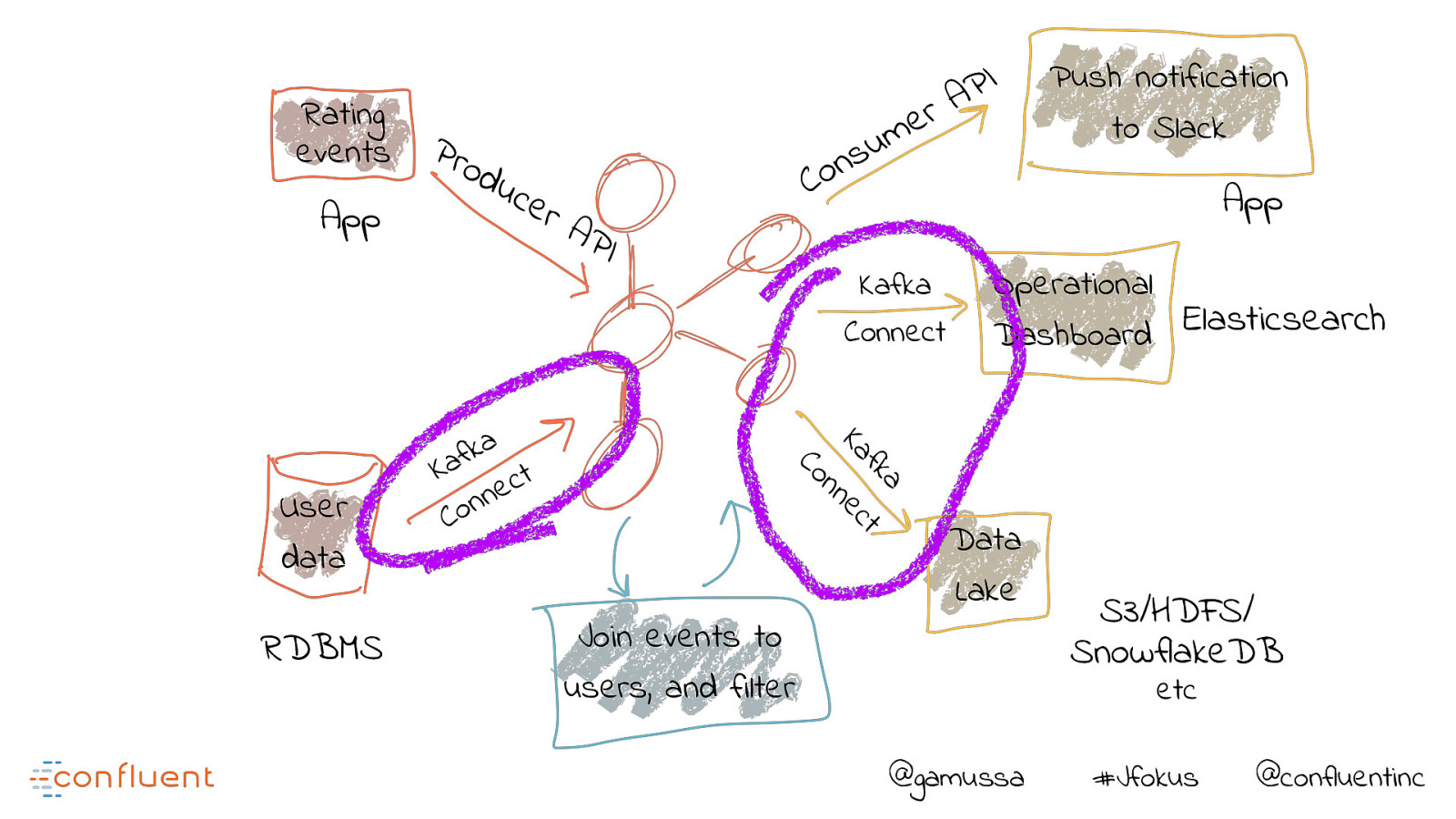

Rating events App uc e rA PI a k f a K t c e n n o C App Kafka Connect a fk t Ka ec n RDBMS u s n o C Push notification to Slack Operational Elasticsearch Dashboard n Co User data Pro d I P A r e m Join events to users, and filter Data Lake S3/HDFS/ SnowflakeDB etc @gamussa #Jfokus @confluentinc

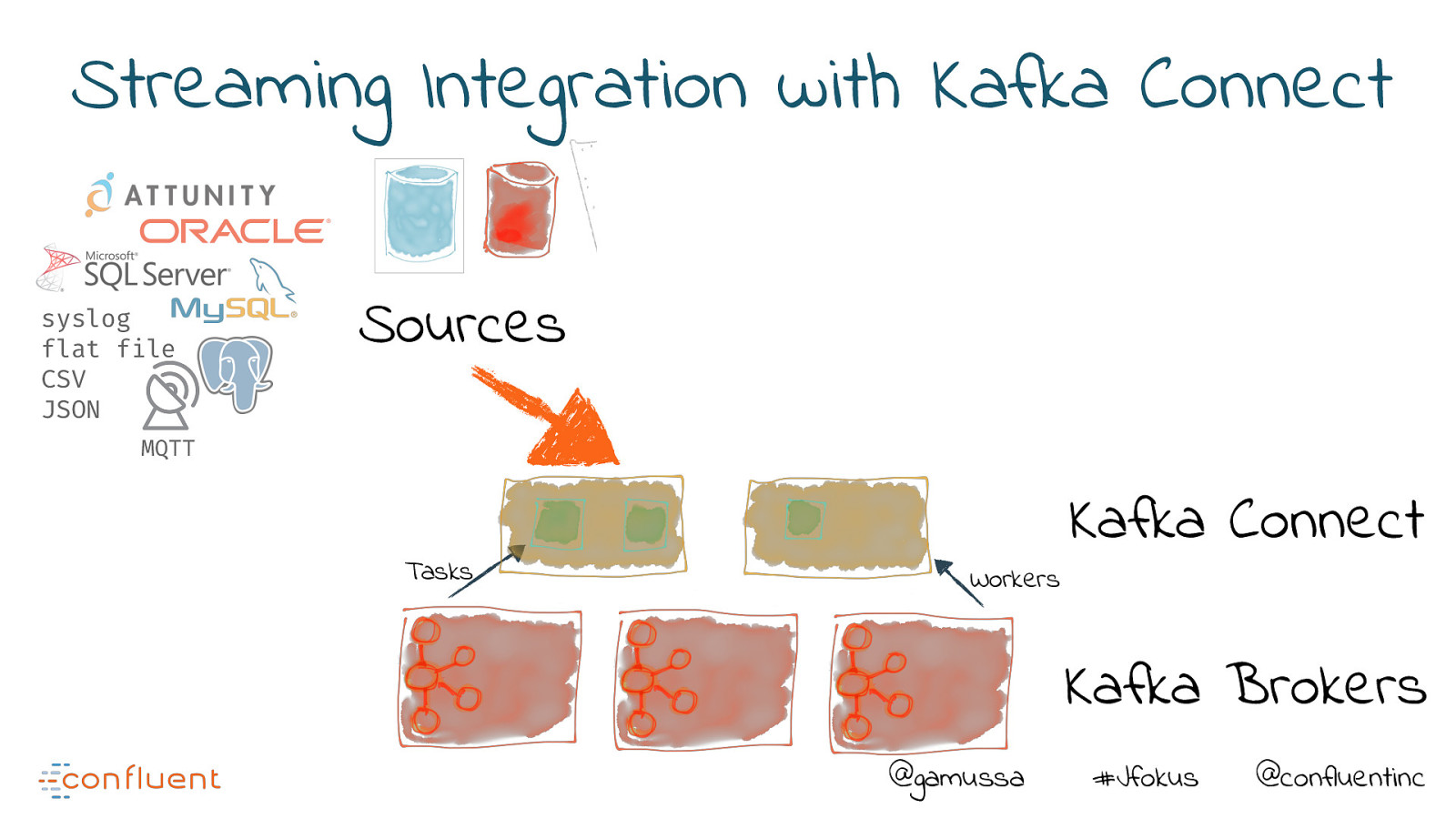

Streaming Integration with Kafka Connect syslog flat file CSV JSON Sources MQTT Tasks Workers Kafka Connect Kafka Brokers @gamussa #Jfokus @confluentinc

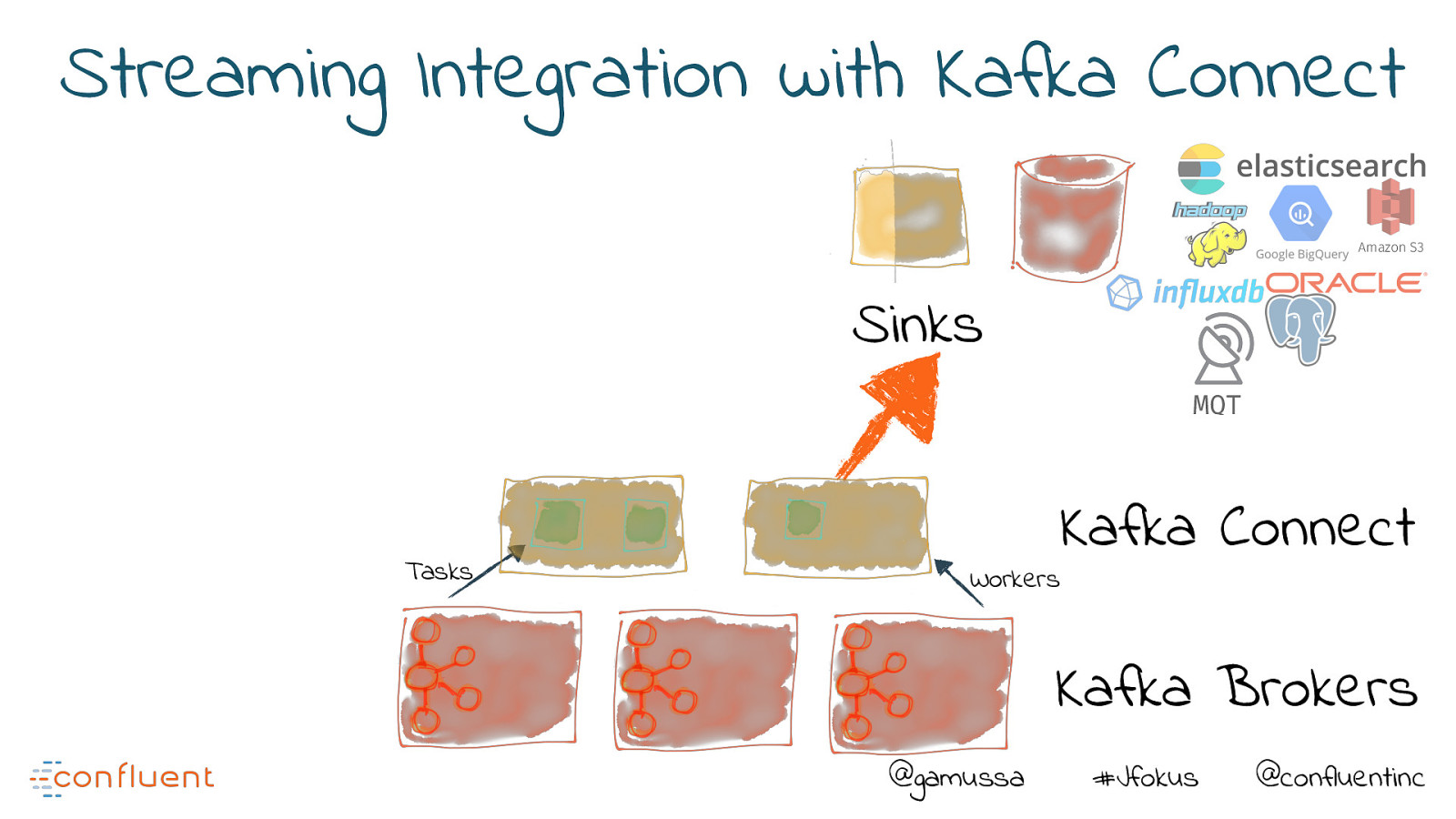

Streaming Integration with Kafka Connect Amazon S3 Sinks MQT Tasks Kafka Connect Workers Kafka Brokers @gamussa #Jfokus @confluentinc

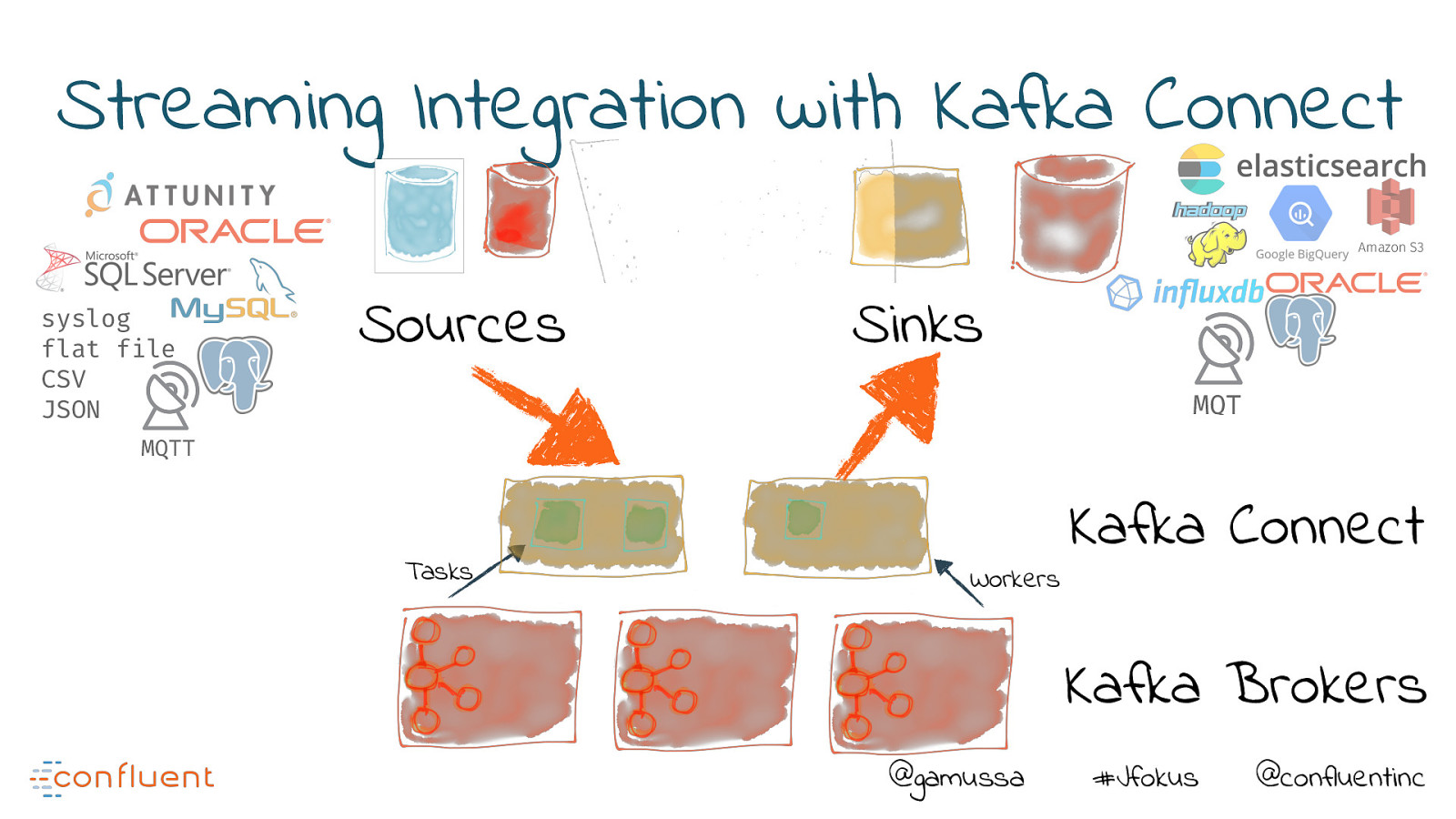

Streaming Integration with Kafka Connect Amazon S3 syslog flat file CSV JSON Sources Sinks MQT MQTT Tasks Workers Kafka Connect Kafka Brokers @gamussa #Jfokus @confluentinc

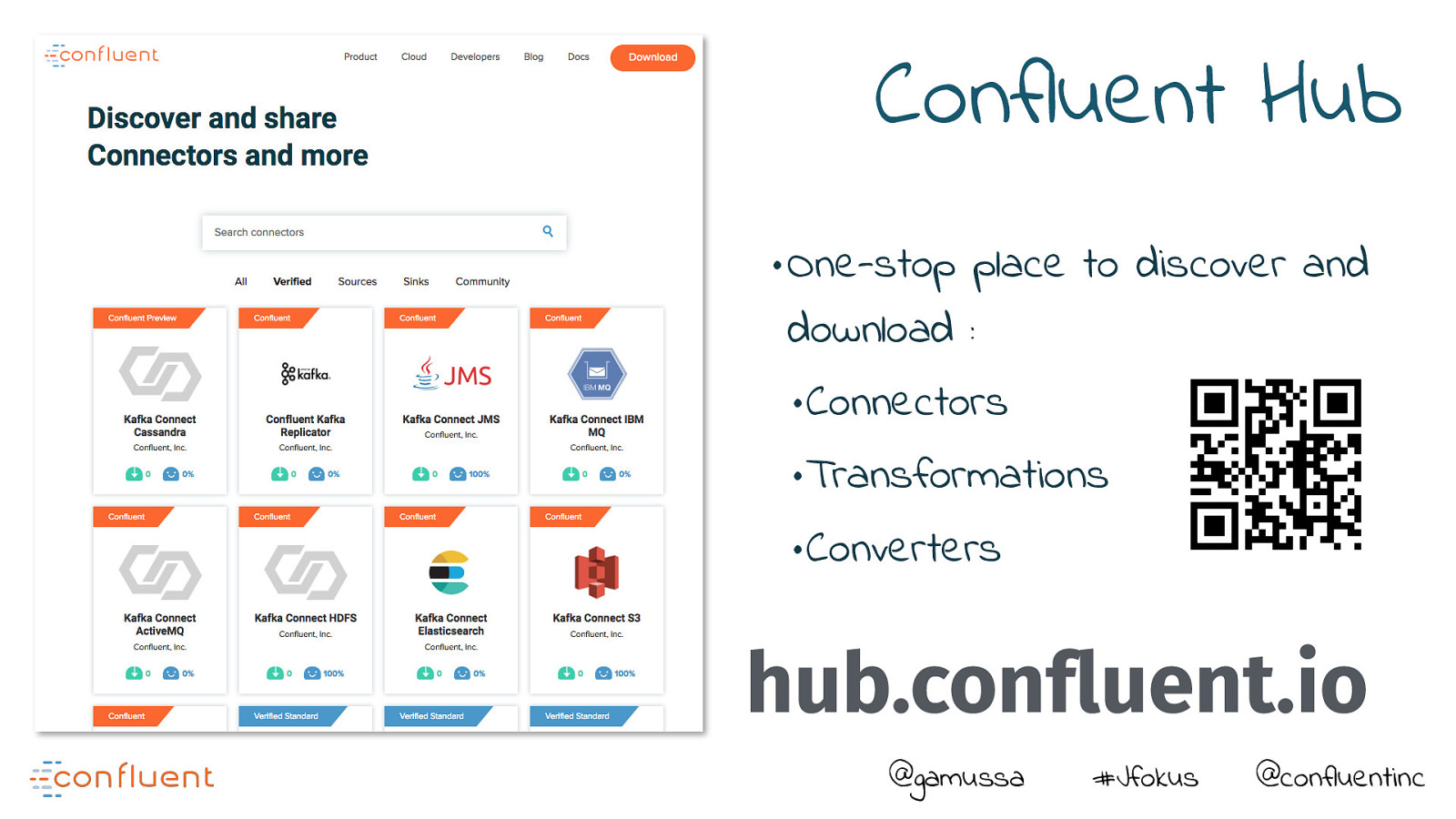

Confluent Hub •One-stop place to discover and download : •Connectors •Transformations •Converters hub.confluent.io @gamussa #Jfokus @confluentinc

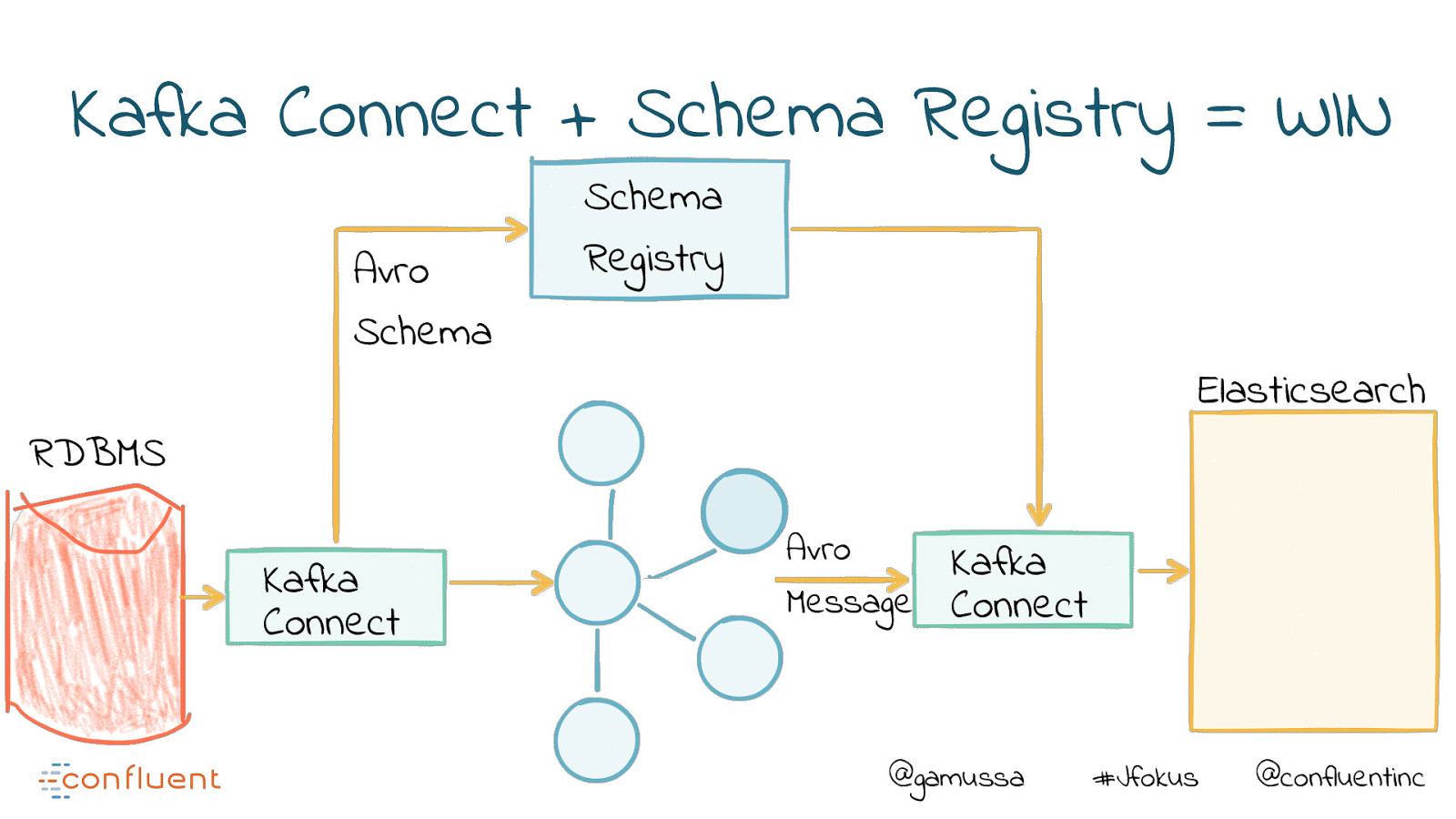

Kafka Connect + Schema Registry = WIN Avro Schema Schema Registry Elasticsearch RDBMS Kafka Connect Avro Message Kafka Connect @gamussa #Jfokus @confluentinc

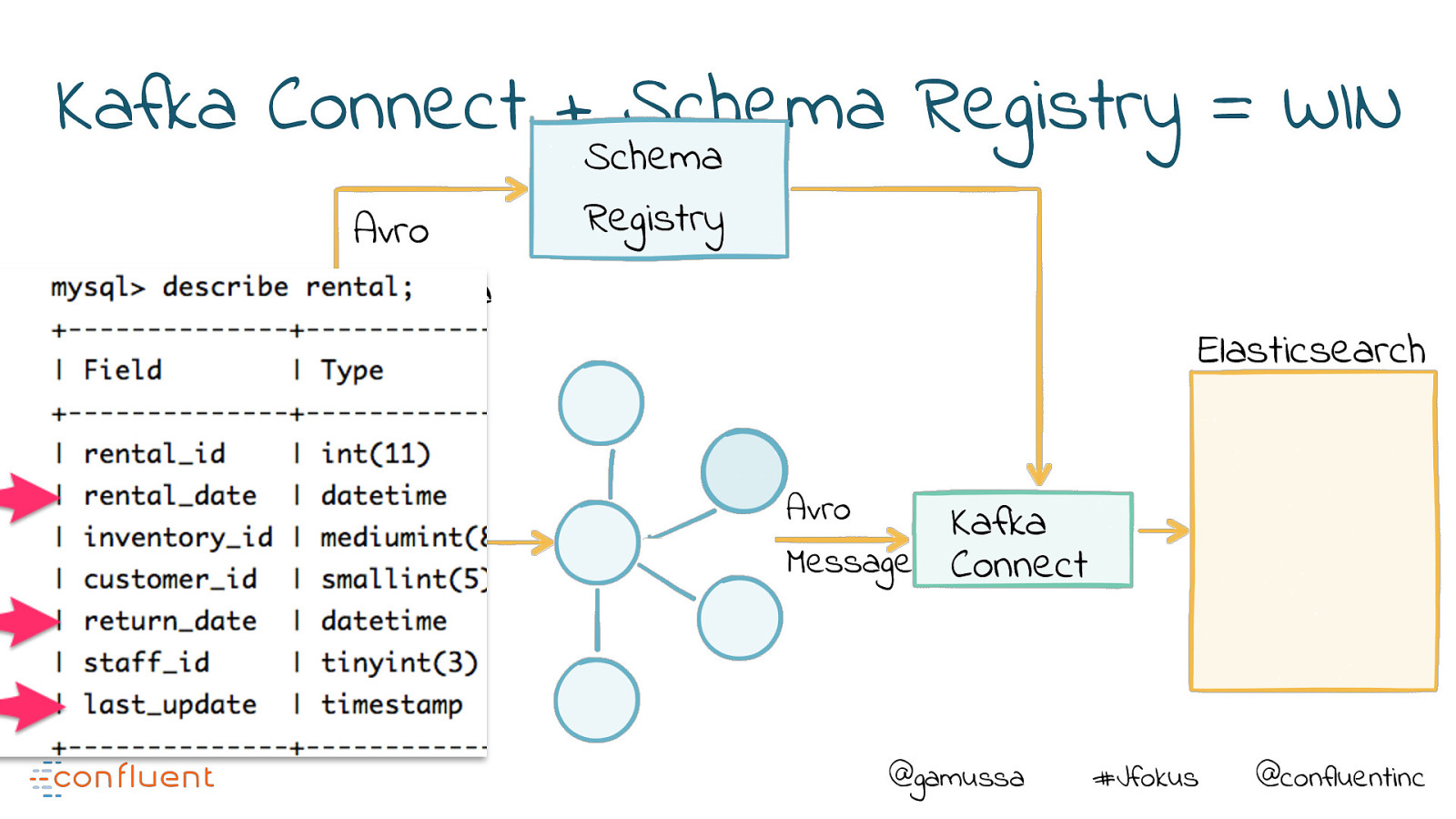

Kafka Connect +Schema Schema Registry = WIN Avro Schema Registry Elasticsearch RDBMS Kafka Connect Avro Message Kafka Connect @gamussa #Jfokus @confluentinc

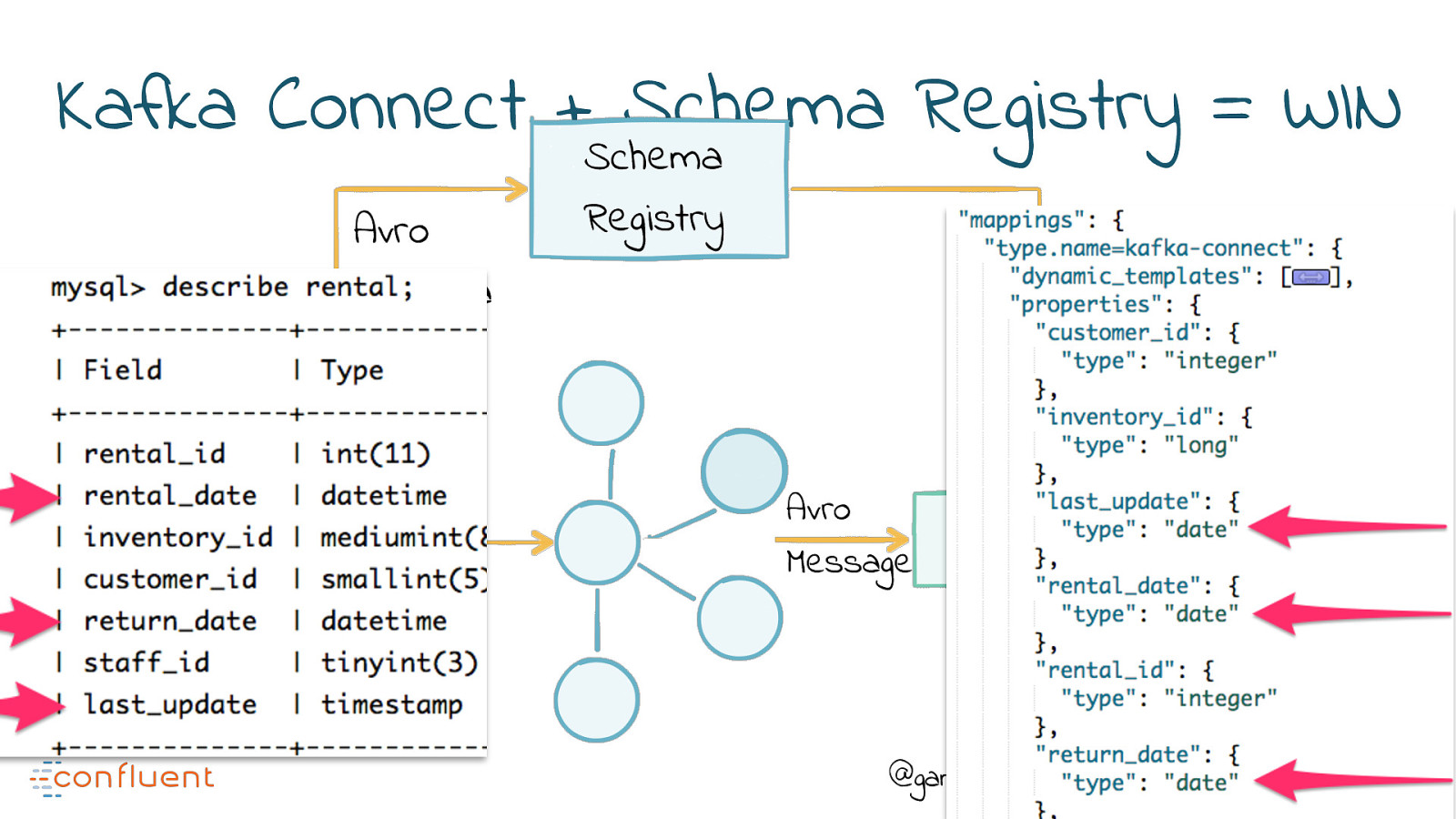

Kafka Connect +Schema Schema Registry = WIN Avro Schema Registry Elasticsearch RDBMS Kafka Connect Avro Message Kafka Connect @gamussa #Jfokus @confluentinc

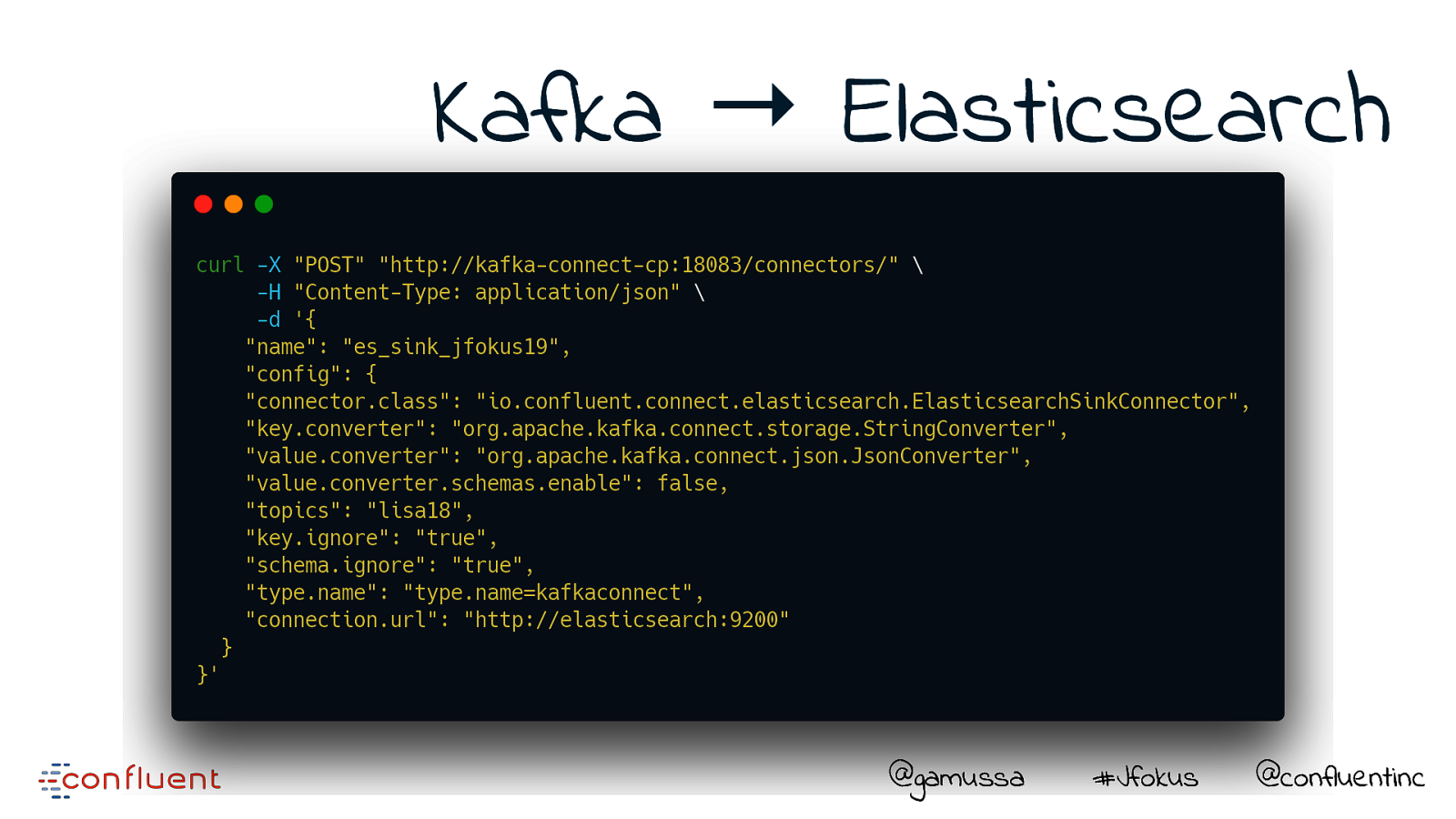

Kafka → Elasticsearch @gamussa #Jfokus @confluentinc

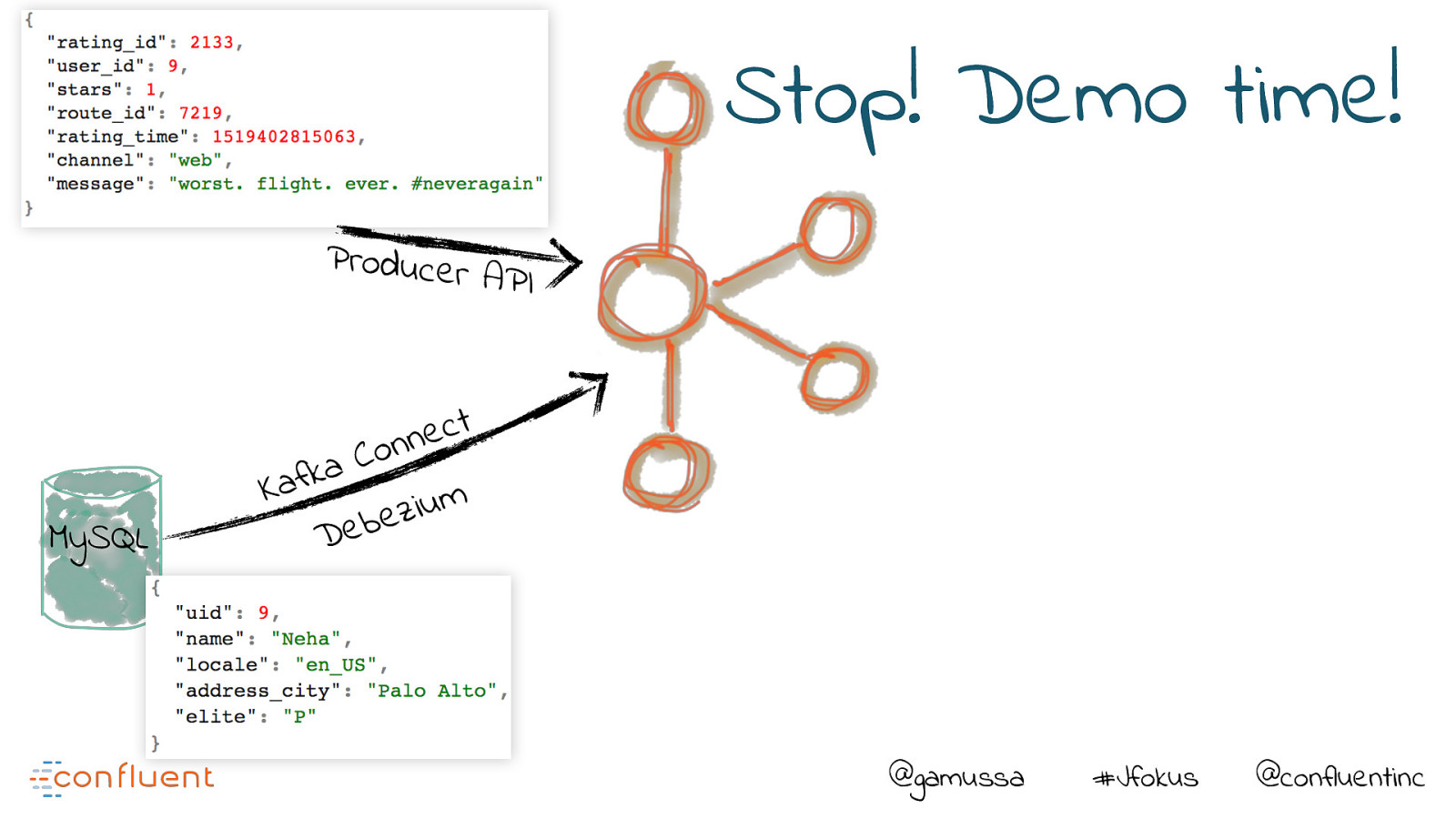

Stop! Demo time! Producer API MySQL t c e n n o C a k f Ka m u i z e b e D @gamussa #Jfokus @confluentinc

Rating events App uc e rA PI a k f a K t c e n n o C App Kafka Connect a fk t Ka ec n RDBMS u s n o C Push notification to Slack Operational Elasticsearch Dashboard n Co User data Pro d I P A r e m KSQL Join events to users, and filter Data Lake S3/HDFS/ SnowflakeDB etc @gamussa #Jfokus @confluentinc

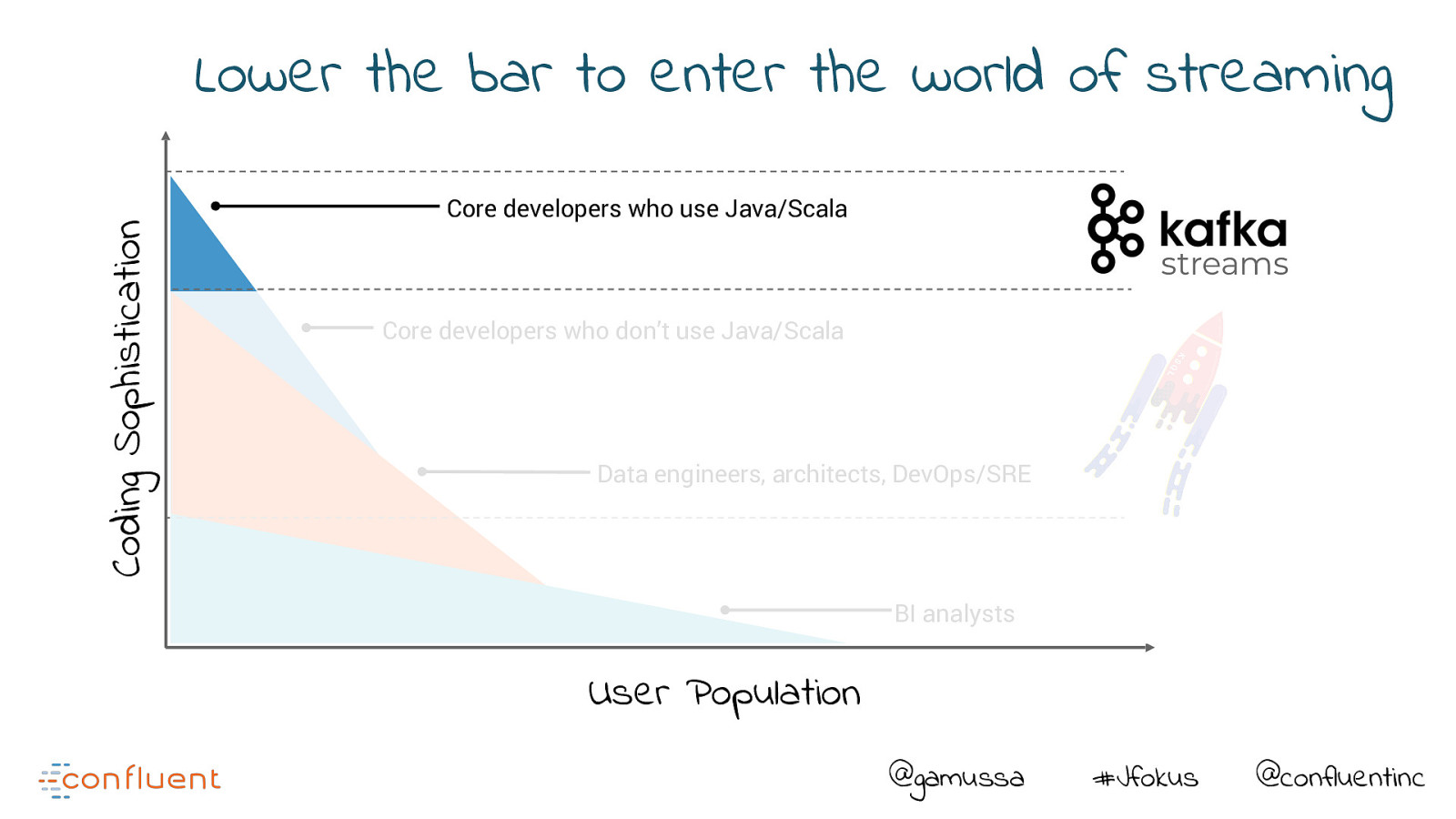

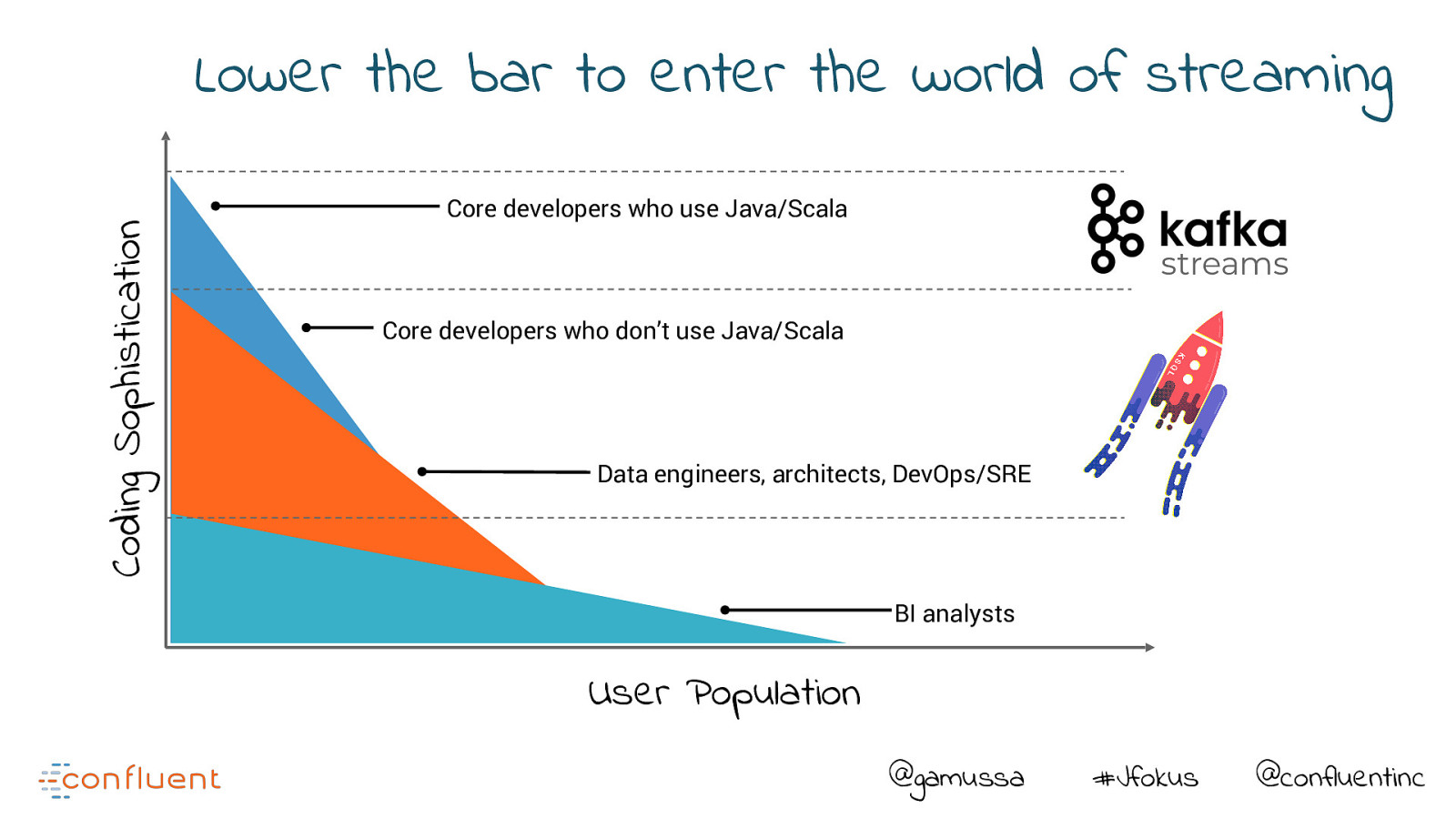

Coding Sophistication Lower the bar to enter the world of streaming Core developers who use Java/Scala streams Core developers who don’t use Java/Scala Data engineers, architects, DevOps/SRE BI analysts User Population @gamussa #Jfokus @confluentinc

Coding Sophistication Lower the bar to enter the world of streaming Core developers who use Java/Scala streams Core developers who don’t use Java/Scala Data engineers, architects, DevOps/SRE BI analysts User Population @gamussa #Jfokus @confluentinc

KSQL #FTW @gamussa #Jfokus @confluentinc

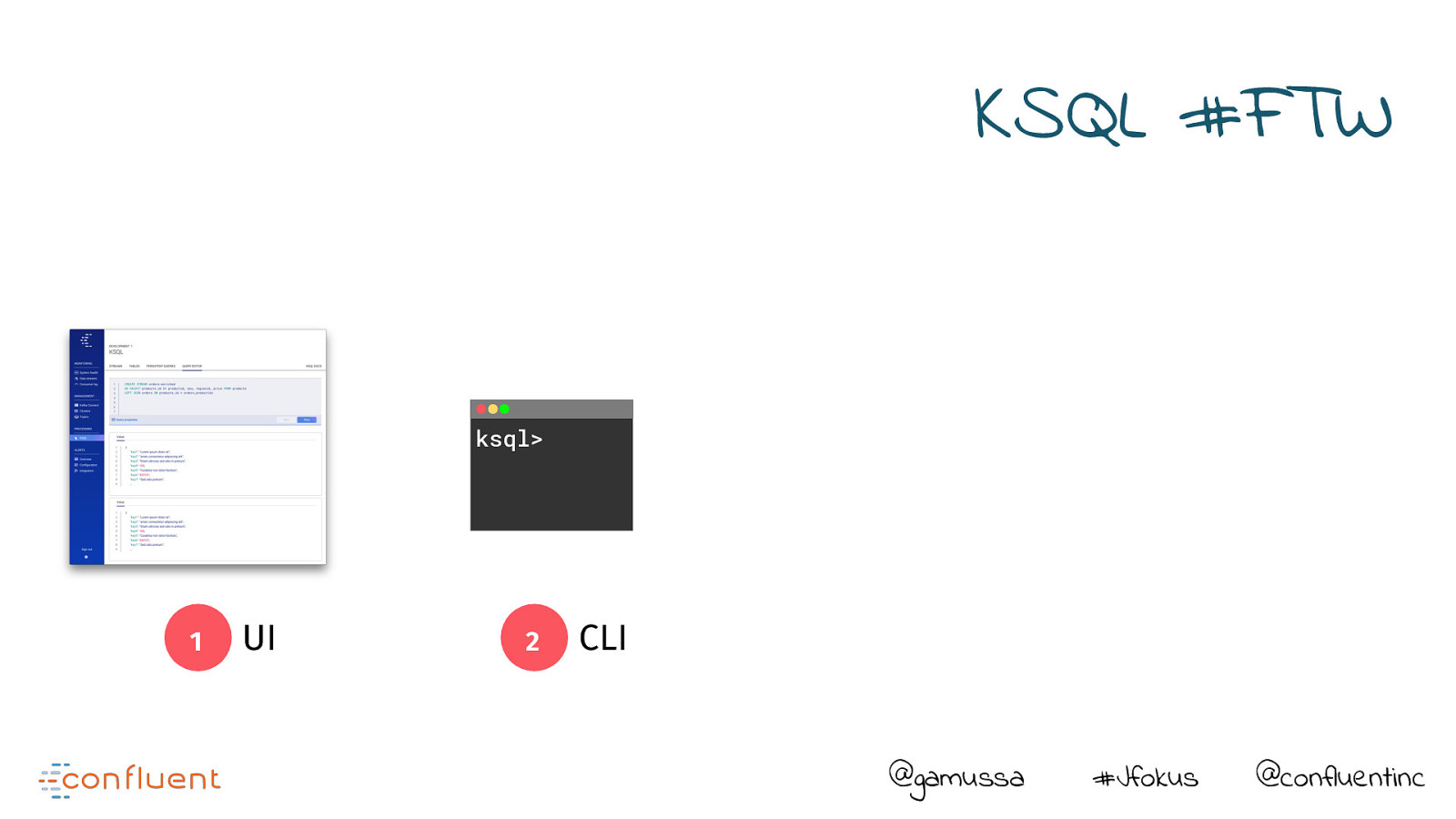

KSQL #FTW 1 UI @gamussa #Jfokus @confluentinc

KSQL #FTW ksql> 1 UI 2 CLI @gamussa #Jfokus @confluentinc

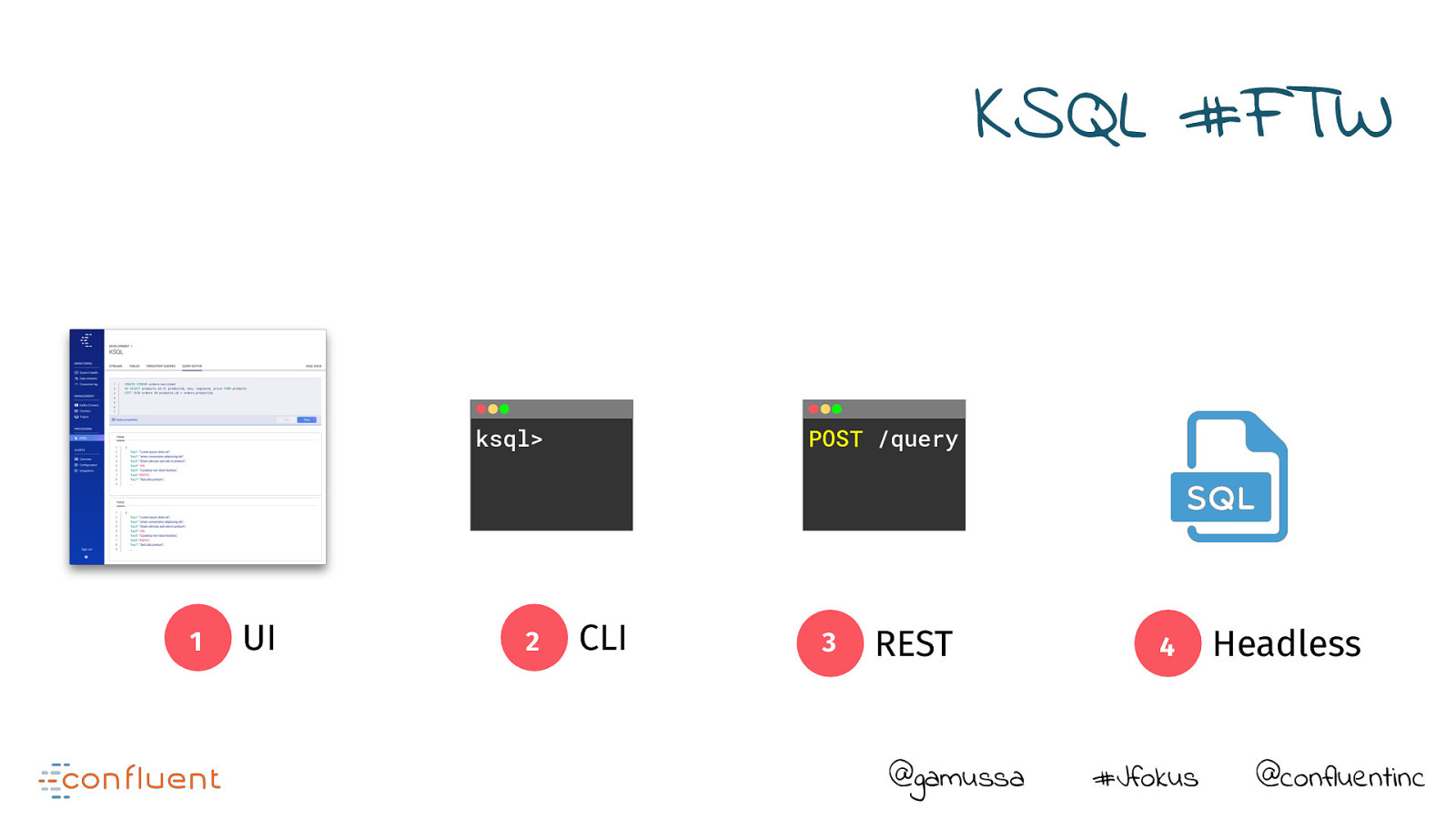

KSQL #FTW ksql> 1 UI 2 POST /query CLI 3 REST @gamussa #Jfokus @confluentinc

KSQL #FTW ksql> 1 UI 2 POST /query CLI 3 REST @gamussa 4 #Jfokus Headless @confluentinc

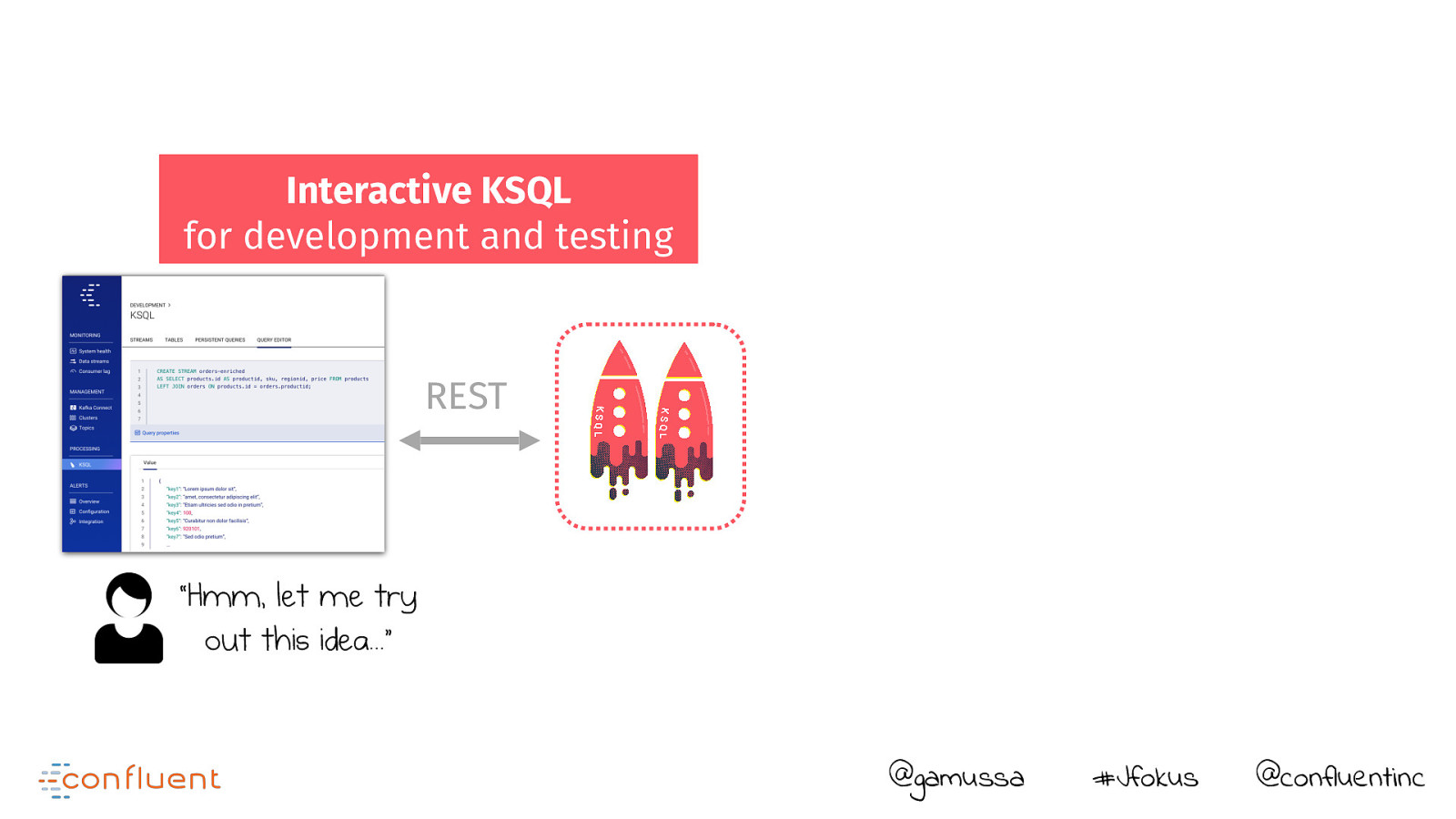

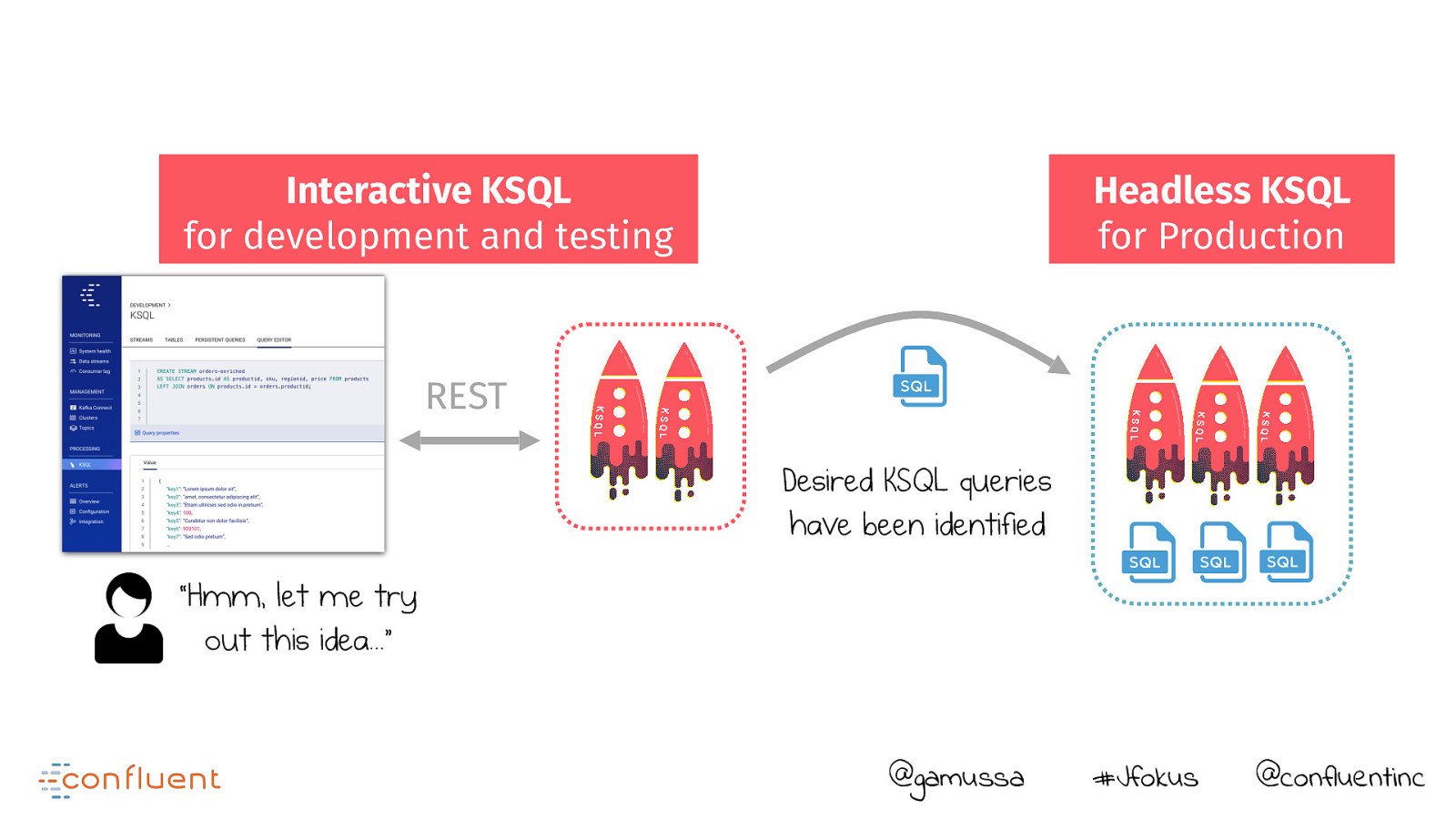

Interactive KSQL for development and testing REST “Hmm, let me try out this idea…” @gamussa #Jfokus @confluentinc

Interactive KSQL for development and testing Headless KSQL for Production REST Desired KSQL queries have been identified “Hmm, let me try out this idea…” @gamussa #Jfokus @confluentinc

Interaction with Kafka Kafka (data) @gamussa #Jfokus @confluentinc

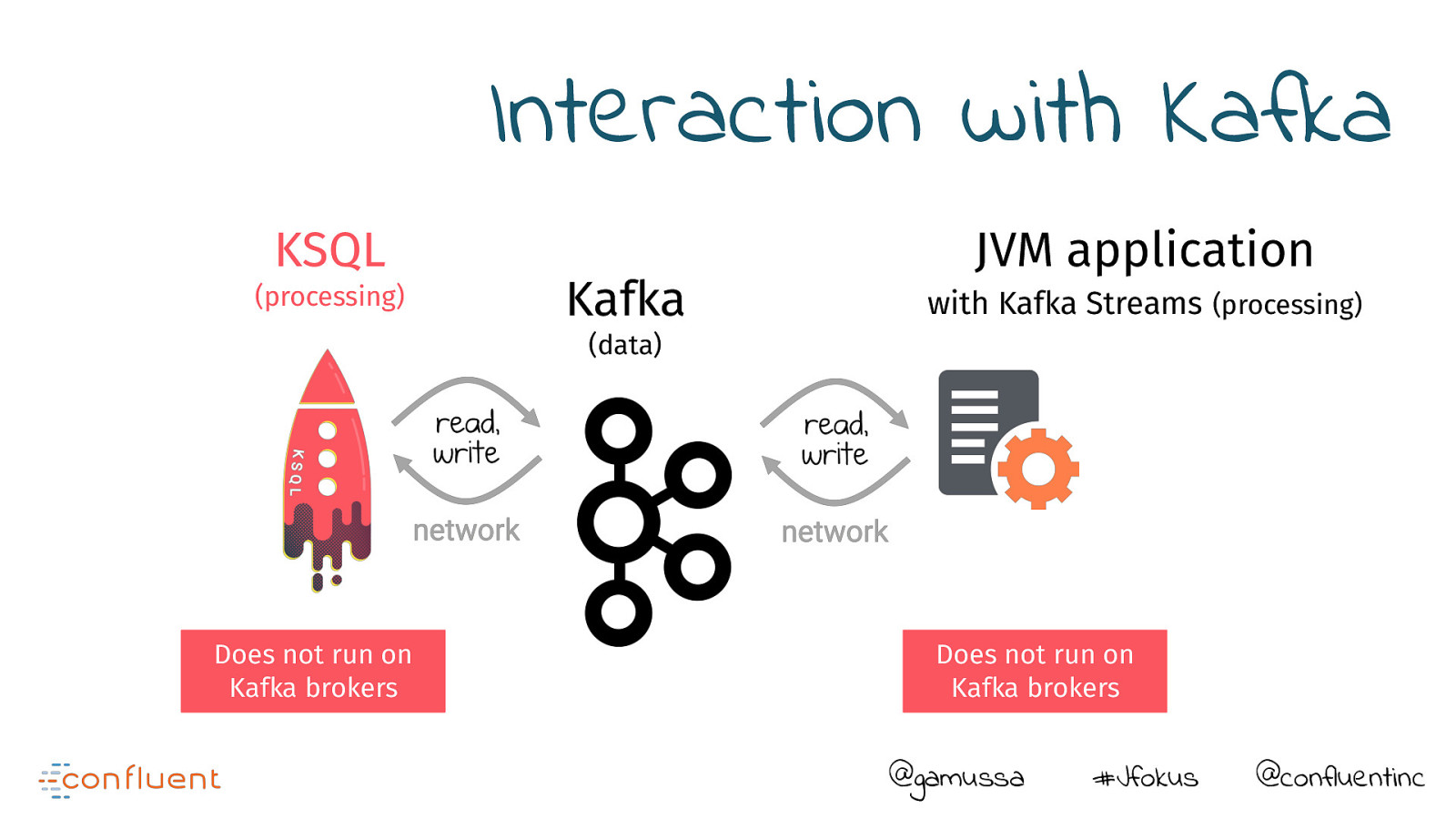

Interaction with Kafka KSQL (processing) Kafka JVM application with Kafka Streams (processing) (data) Does not run on Kafka brokers Does not run on Kafka brokers @gamussa #Jfokus @confluentinc

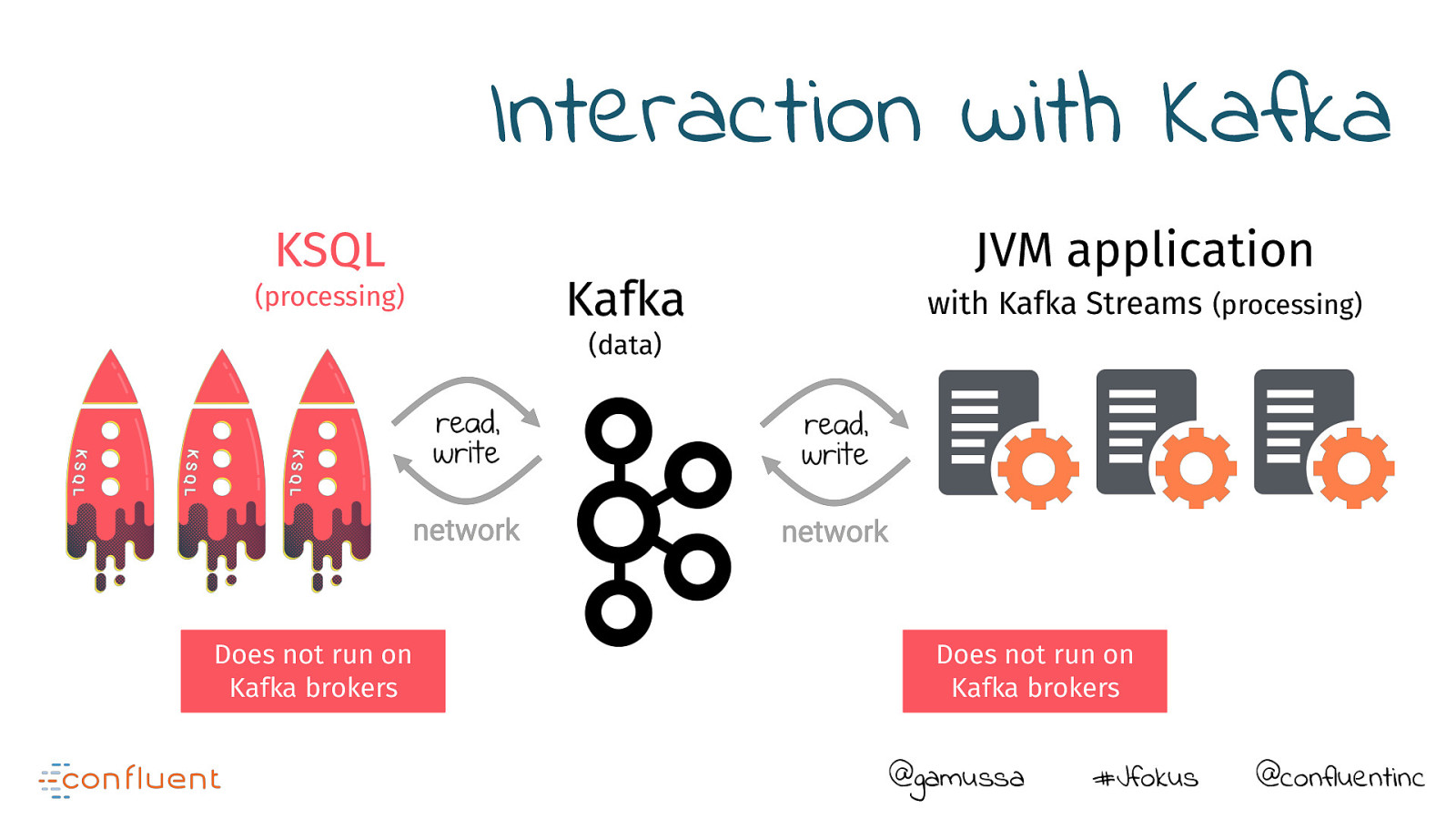

Interaction with Kafka KSQL (processing) Kafka JVM application with Kafka Streams (processing) (data) Does not run on Kafka brokers Does not run on Kafka brokers @gamussa #Jfokus @confluentinc

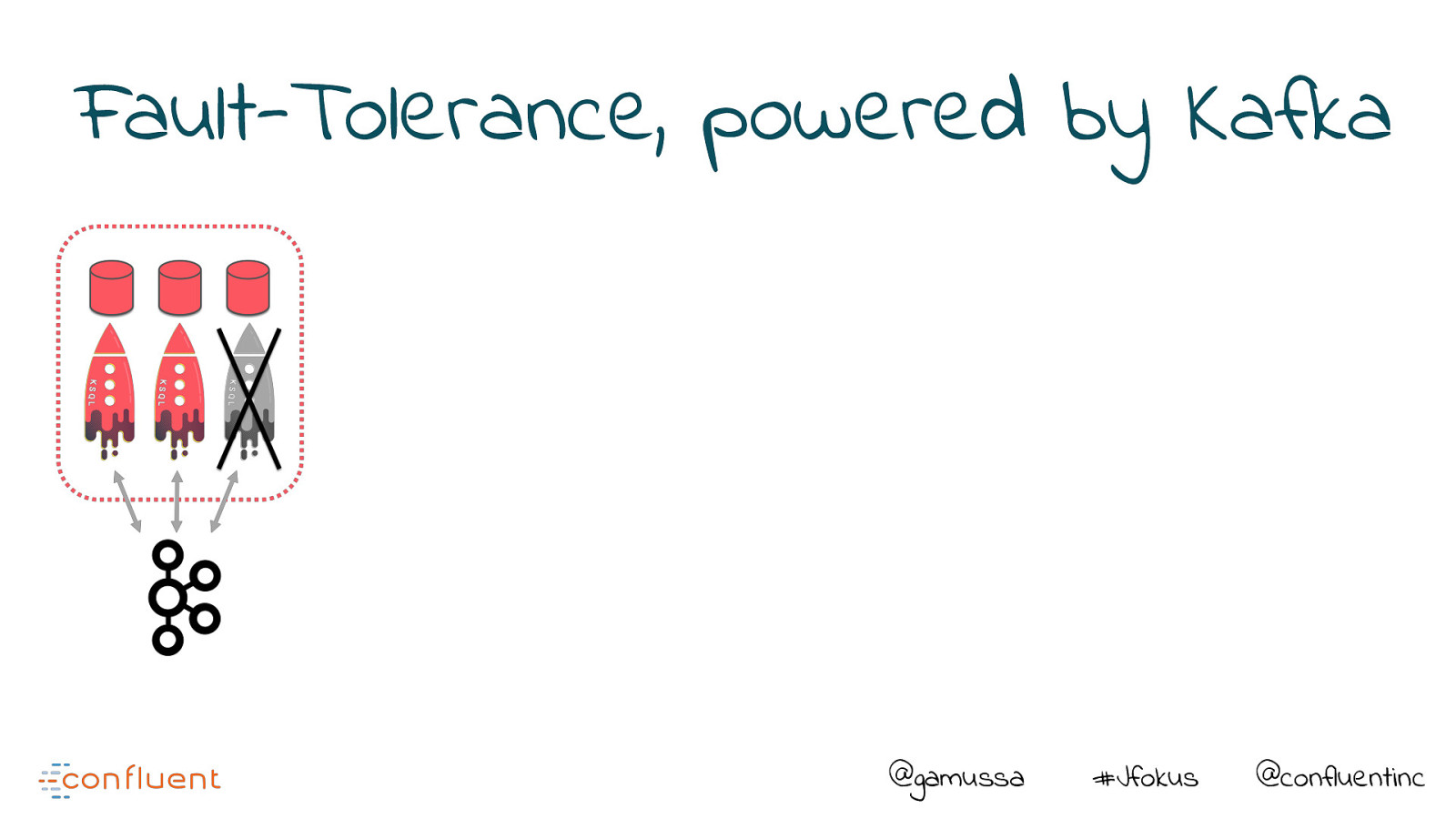

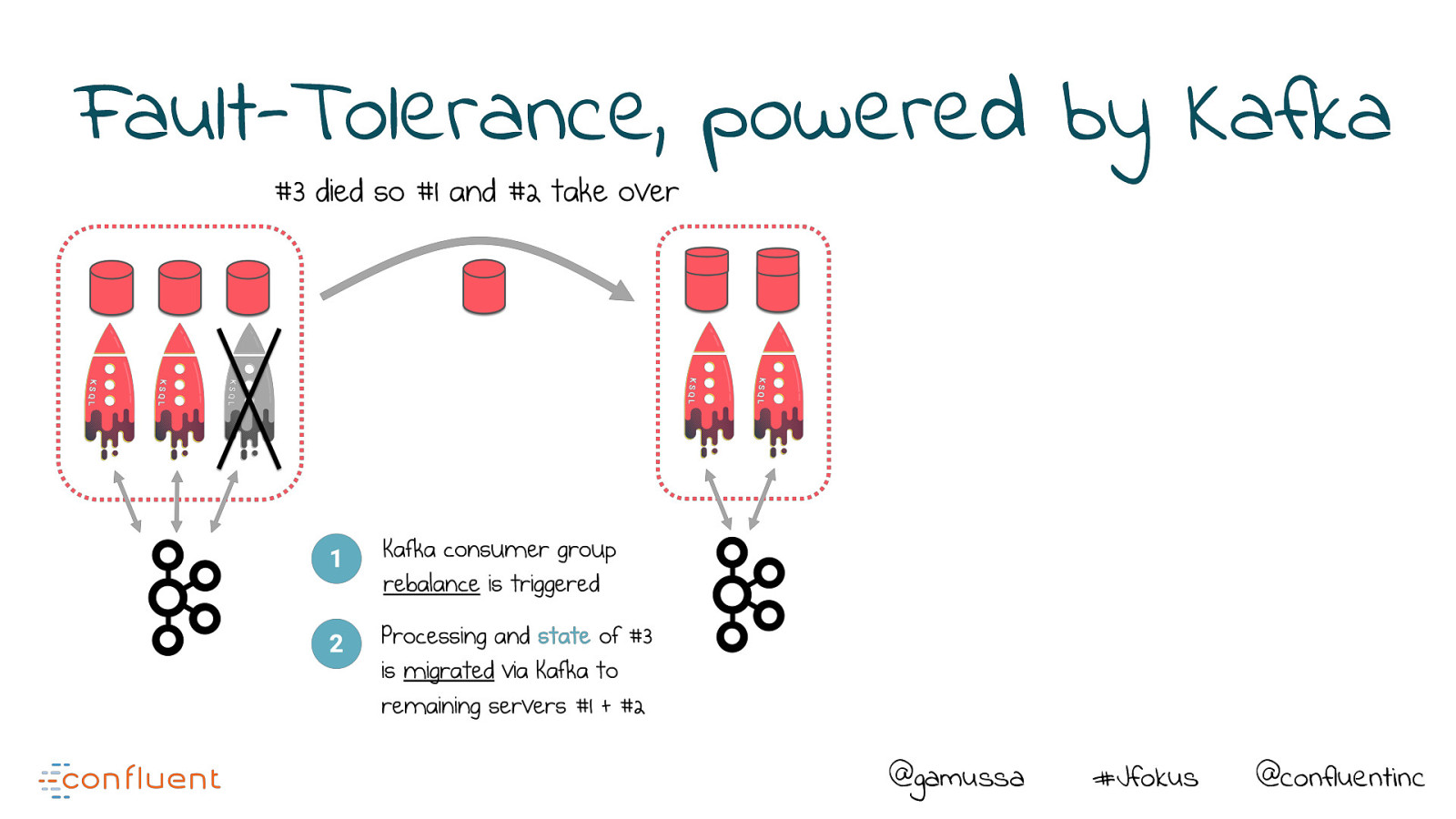

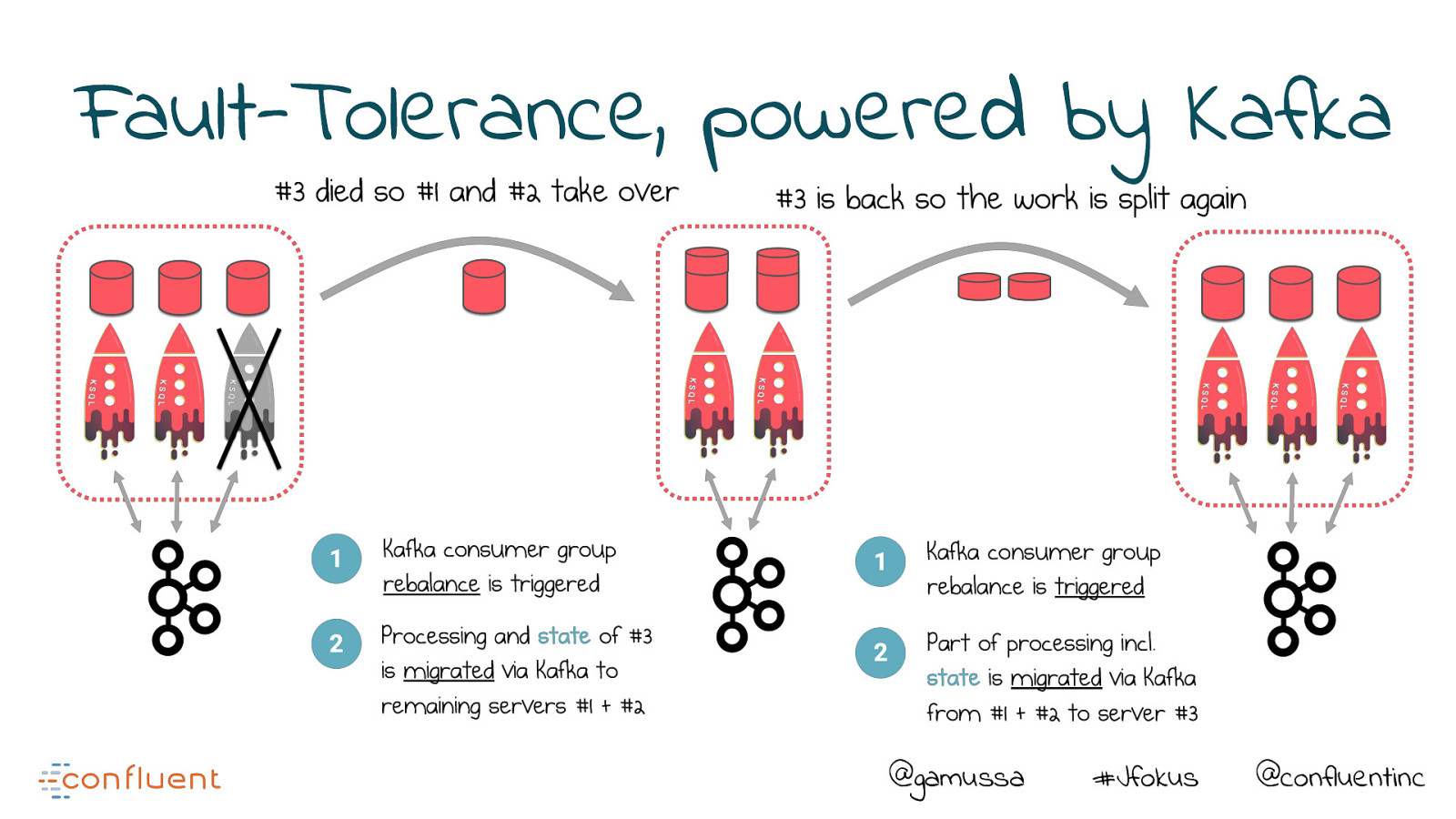

Fault-Tolerance, powered by Kafka @gamussa #Jfokus @confluentinc

Fault-Tolerance, powered by Kafka @gamussa #Jfokus @confluentinc

Fault-Tolerance, powered by Kafka @gamussa #Jfokus @confluentinc

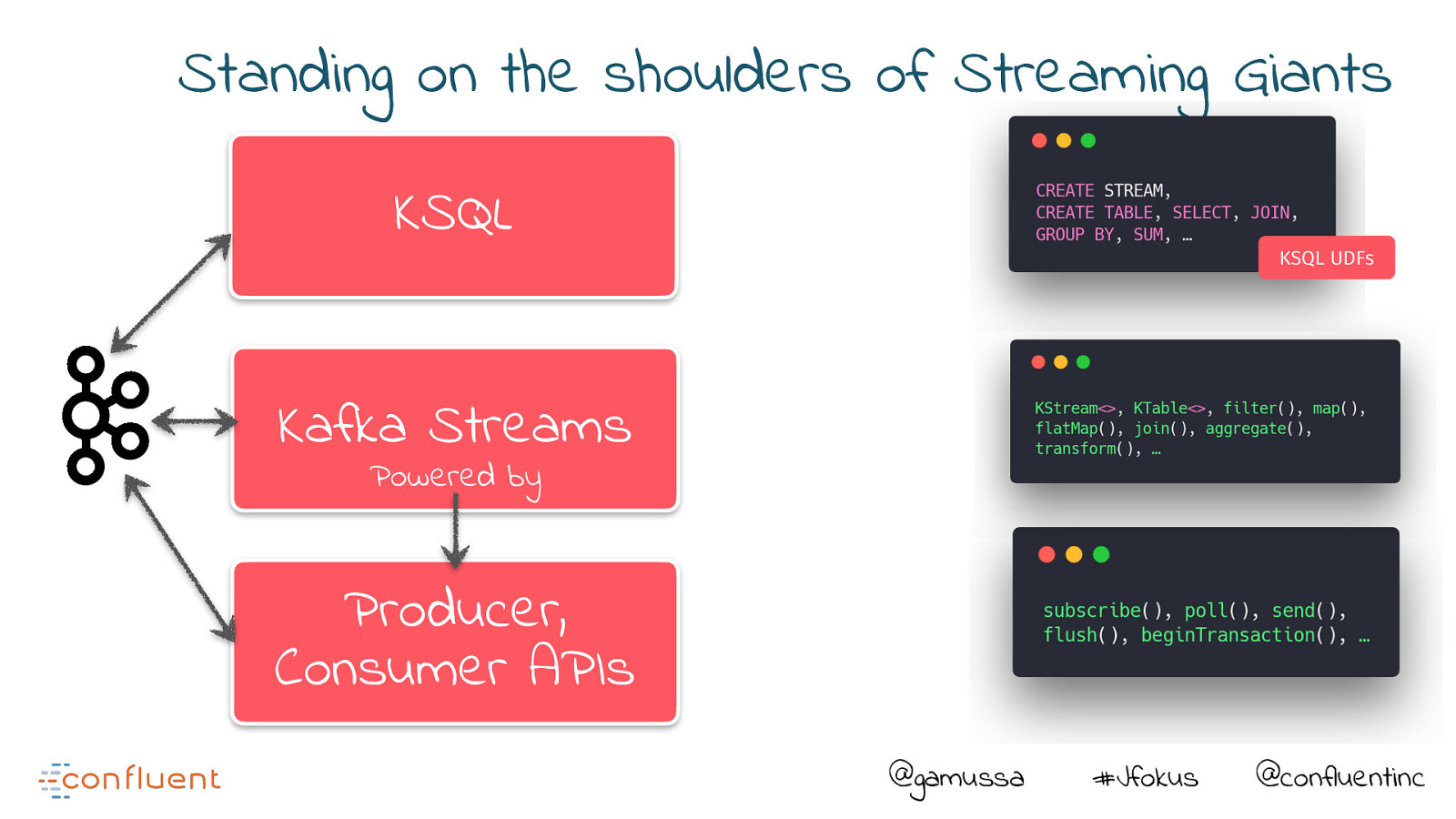

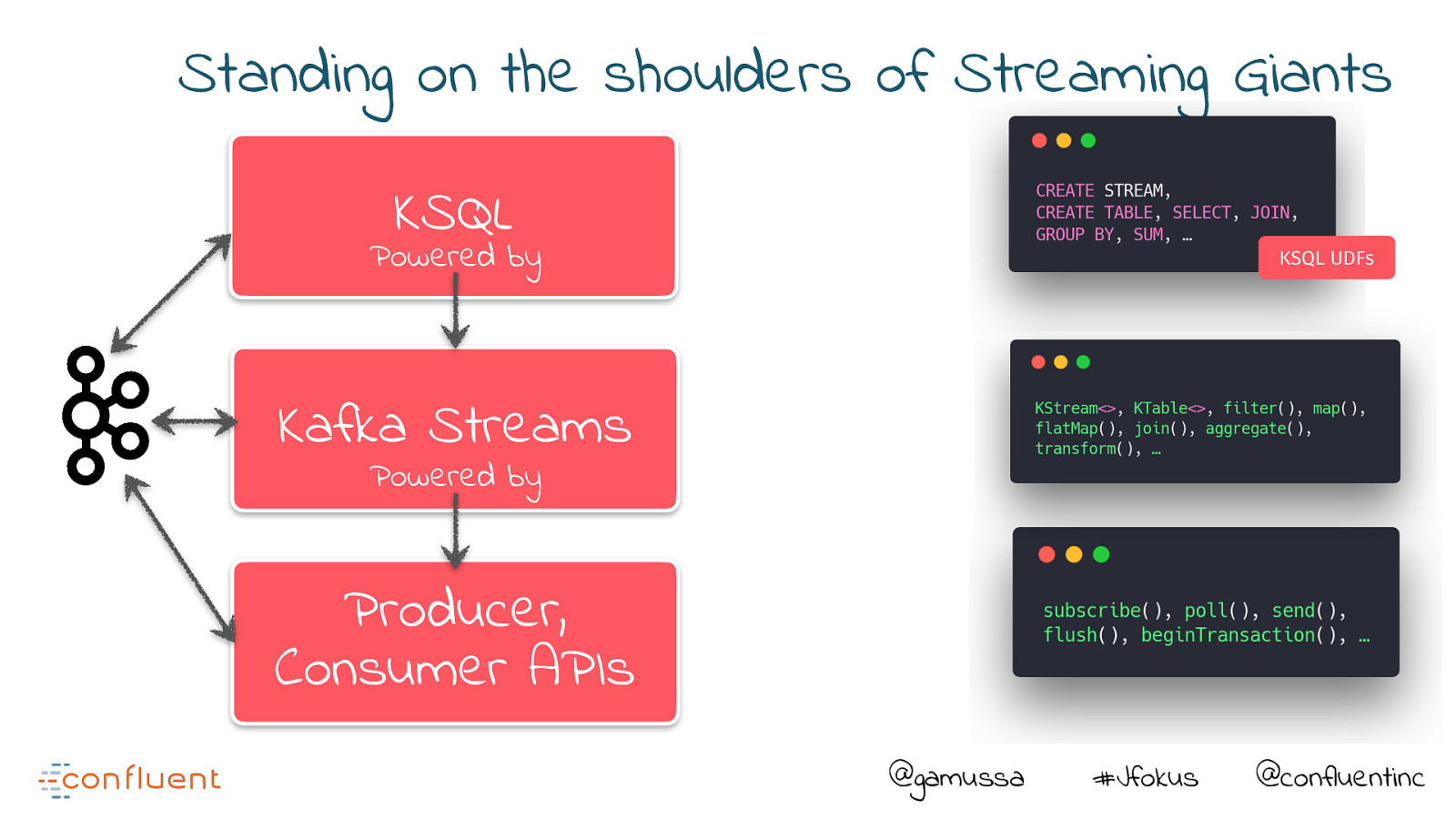

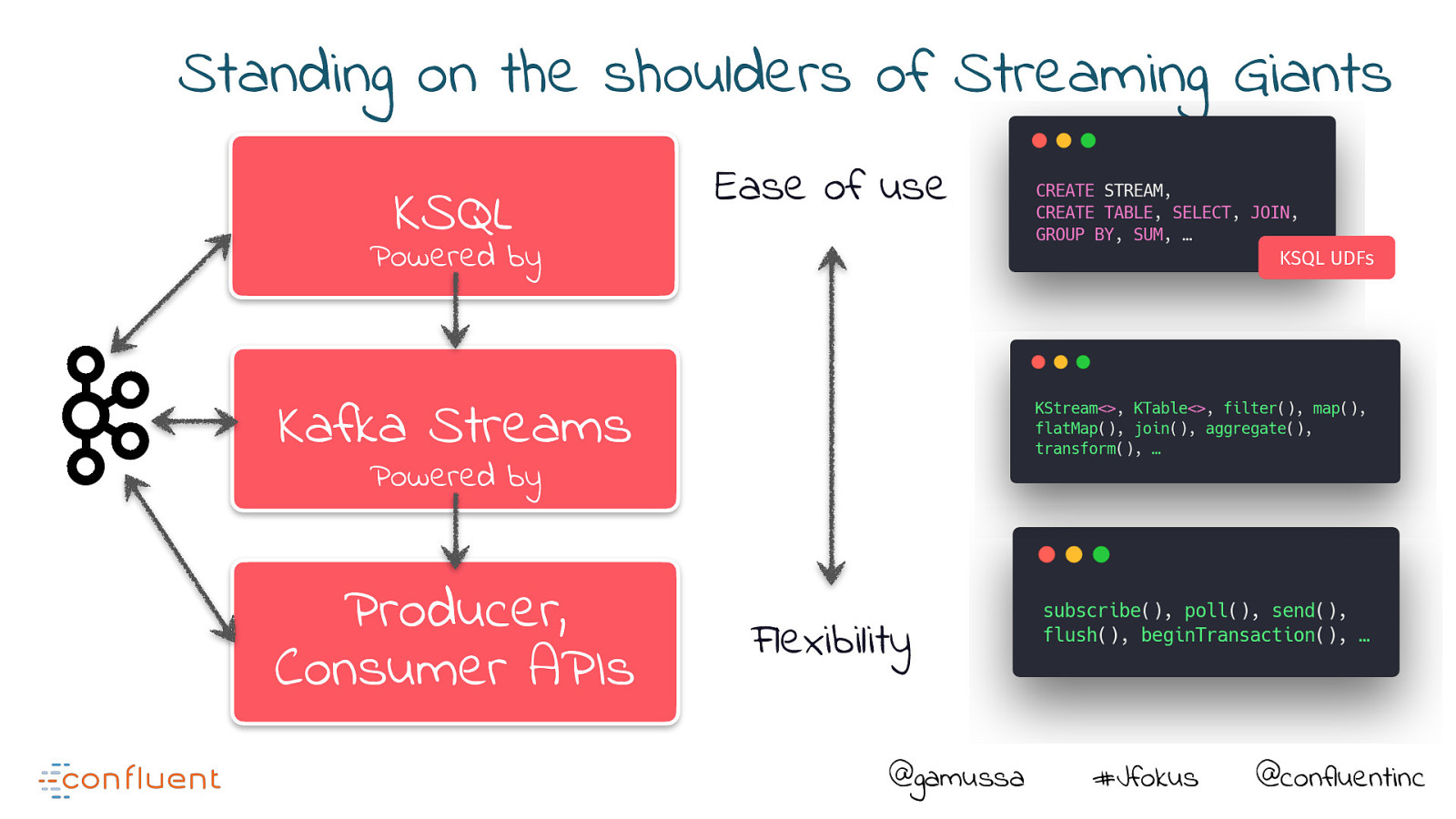

Standing on the shoulders of Streaming Giants @gamussa #Jfokus @confluentinc

Standing on the shoulders of Streaming Giants @gamussa #Jfokus @confluentinc

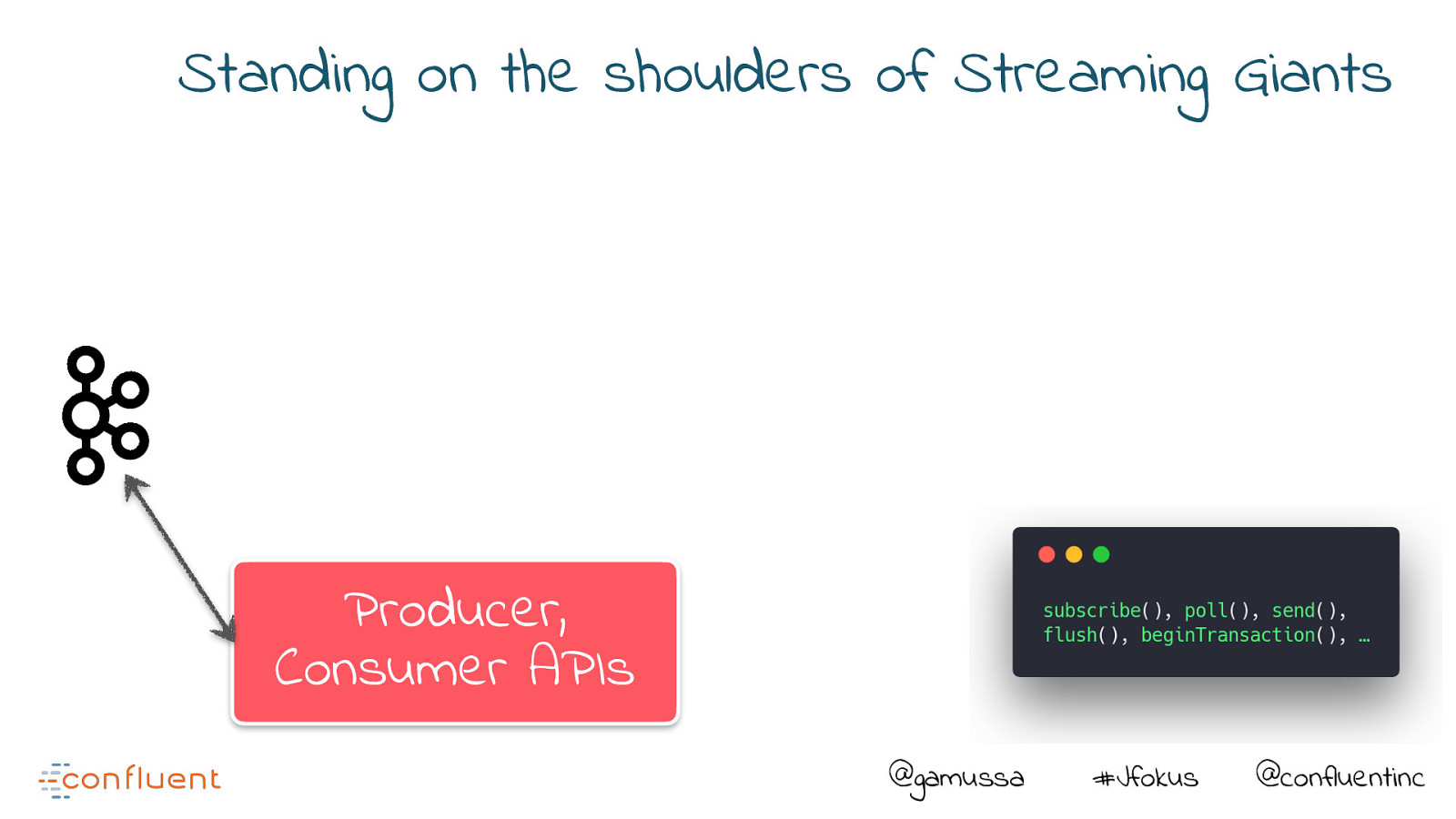

Standing on the shoulders of Streaming Giants Producer, Consumer APIs @gamussa #Jfokus @confluentinc

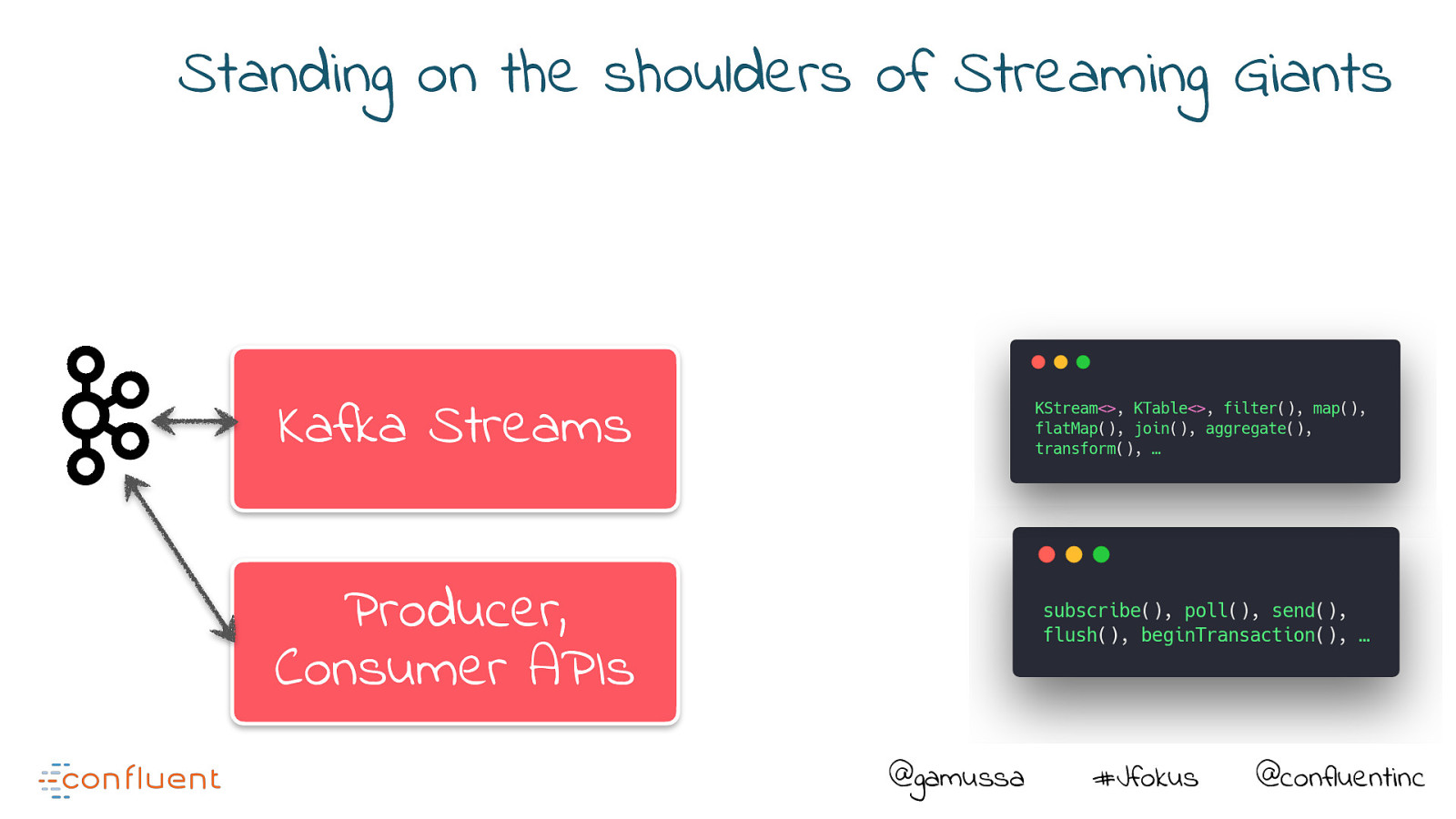

Standing on the shoulders of Streaming Giants Kafka Streams Producer, Consumer APIs @gamussa #Jfokus @confluentinc

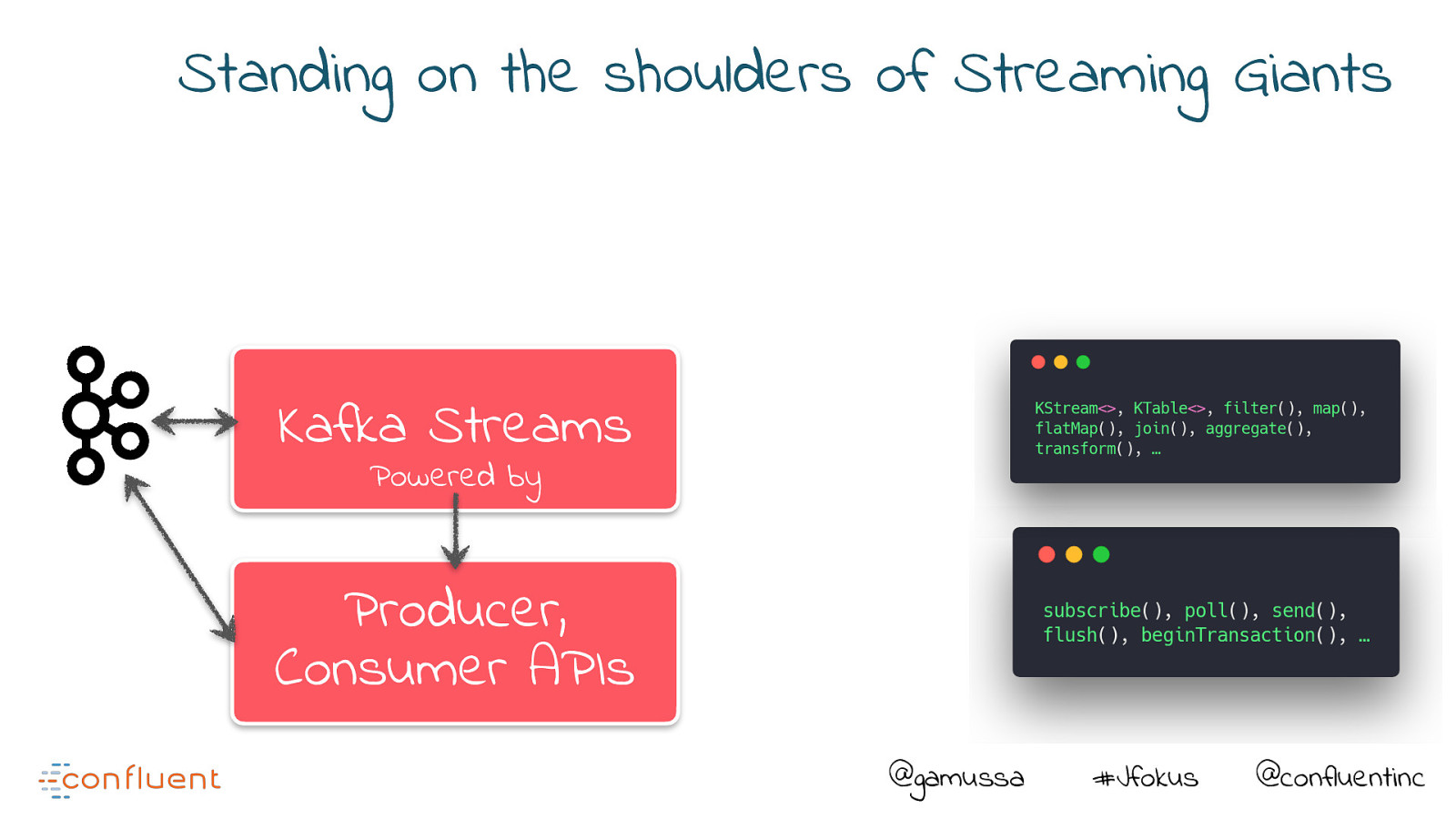

Standing on the shoulders of Streaming Giants Kafka Streams Powered by Producer, Consumer APIs @gamussa #Jfokus @confluentinc

Standing on the shoulders of Streaming Giants KSQL KSQL UDFs Kafka Streams Powered by Producer, Consumer APIs @gamussa #Jfokus @confluentinc

Standing on the shoulders of Streaming Giants KSQL Powered by KSQL UDFs Kafka Streams Powered by Producer, Consumer APIs @gamussa #Jfokus @confluentinc

Standing on the shoulders of Streaming Giants KSQL Ease of use Powered by KSQL UDFs Kafka Streams Powered by Producer, Consumer APIs Flexibility @gamussa #Jfokus @confluentinc

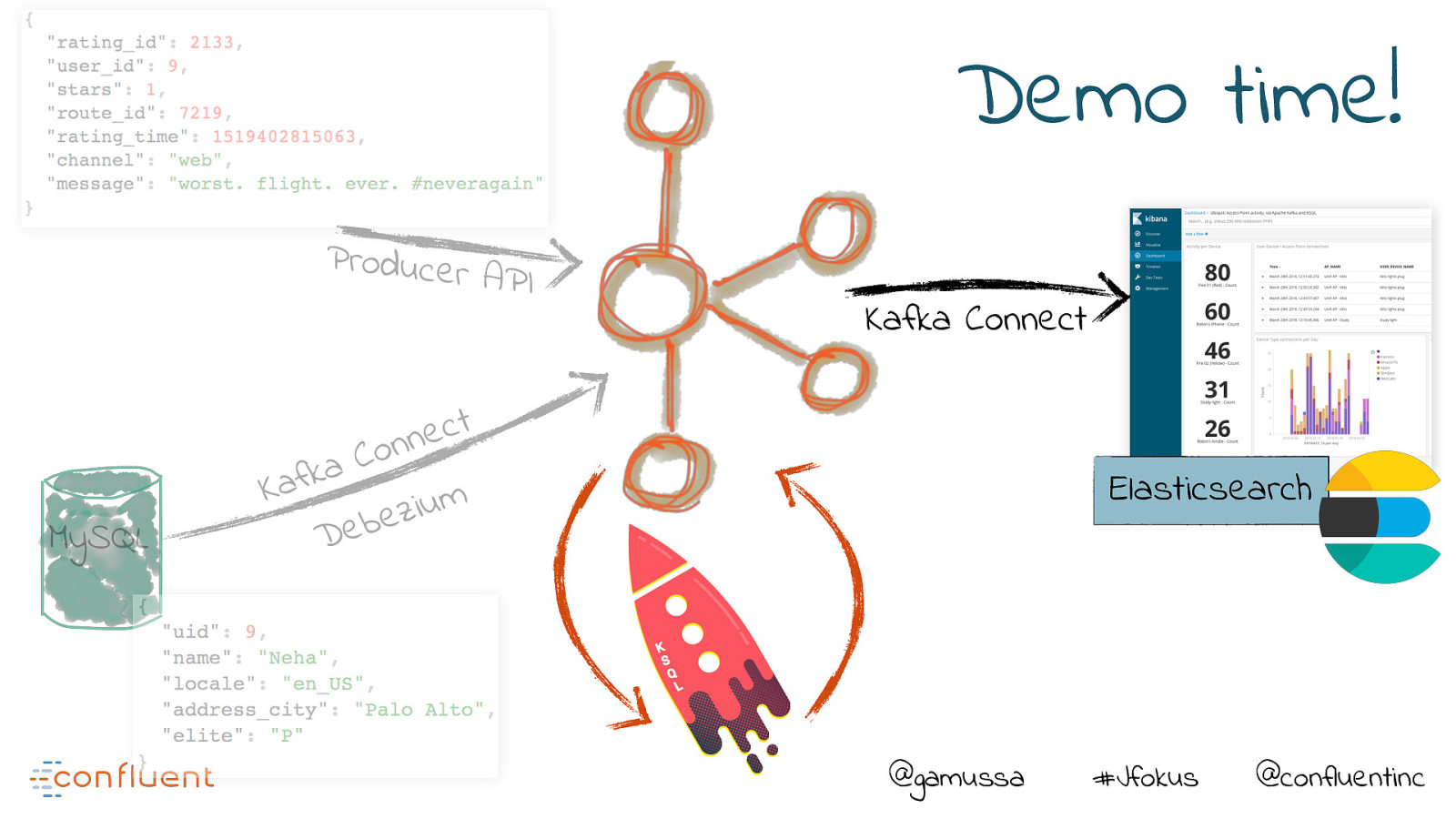

Demo time! Producer API MySQL Kafka Connect t c e n n o C a k f Ka m u i z e b e D Elasticsearch @gamussa #Jfokus @confluentinc

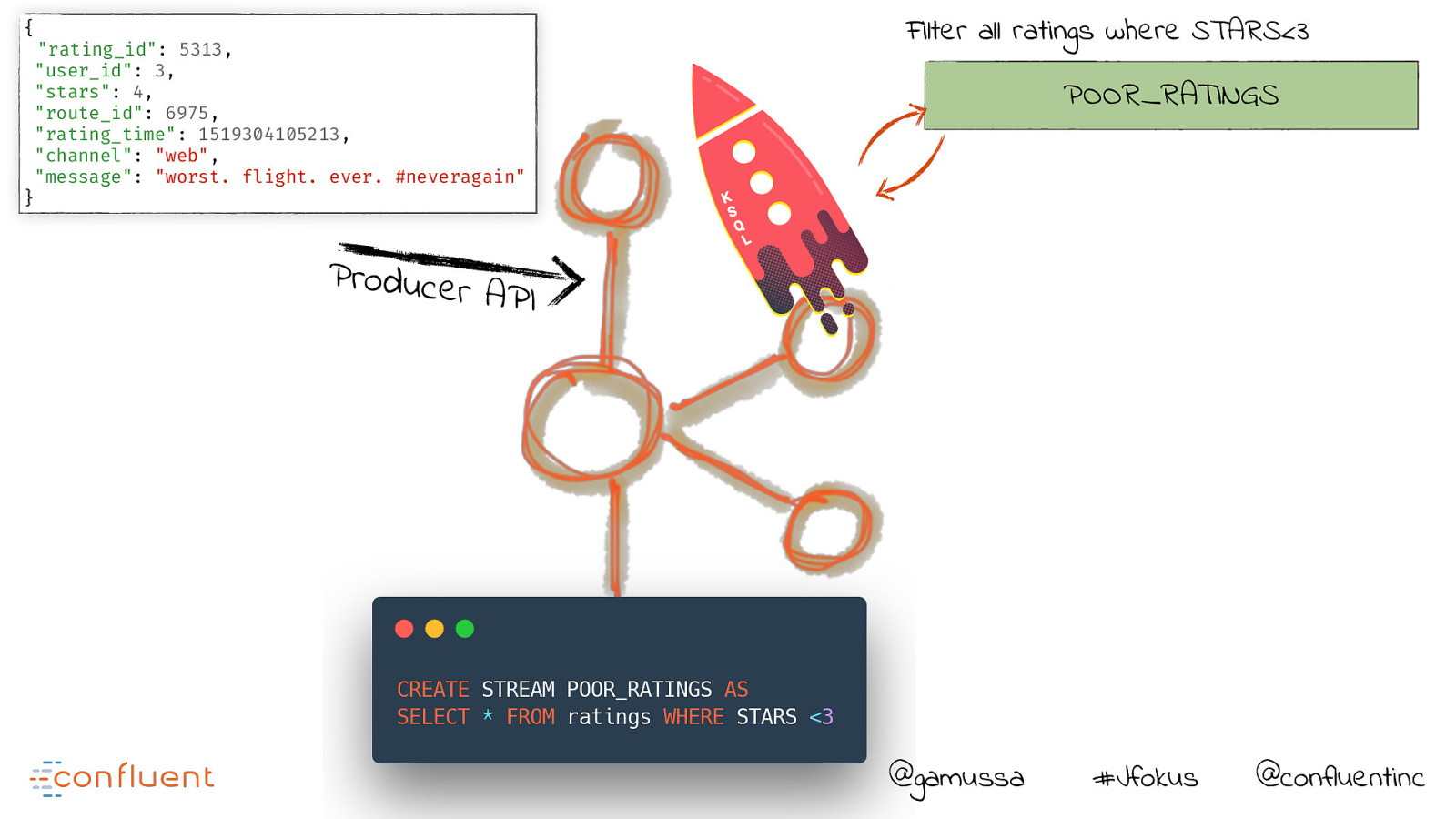

{ “rating_id”: 5313, “user_id”: 3, “stars”: 4, “route_id”: 6975, “rating_time”: 1519304105213, “channel”: “web”, “message”: “worst. flight. ever. #neveragain” Filter all ratings where STARS<3 POOR_RATINGS } Producer API @gamussa #Jfokus @confluentinc

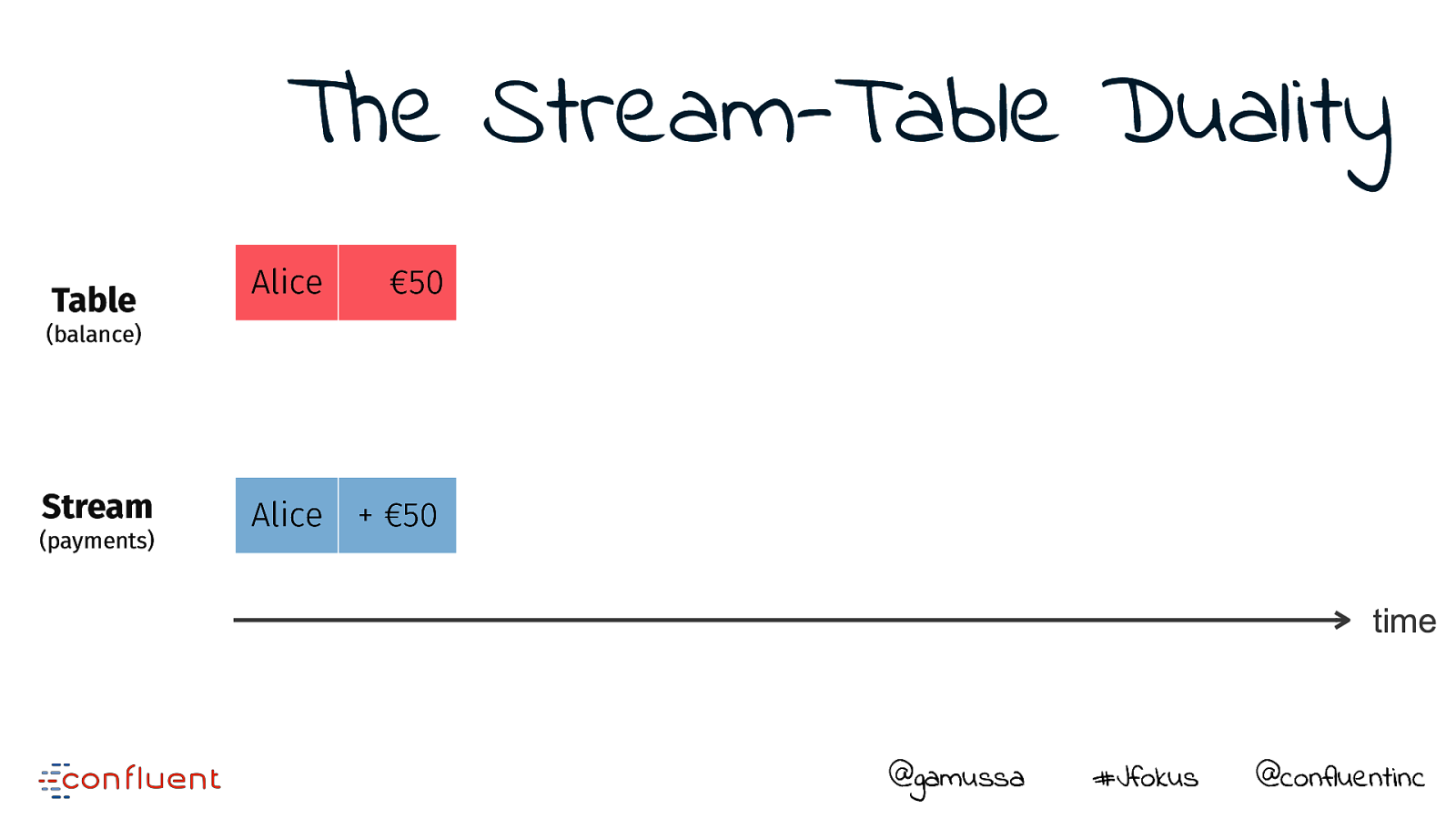

Do you think that’s a table you are querying ?

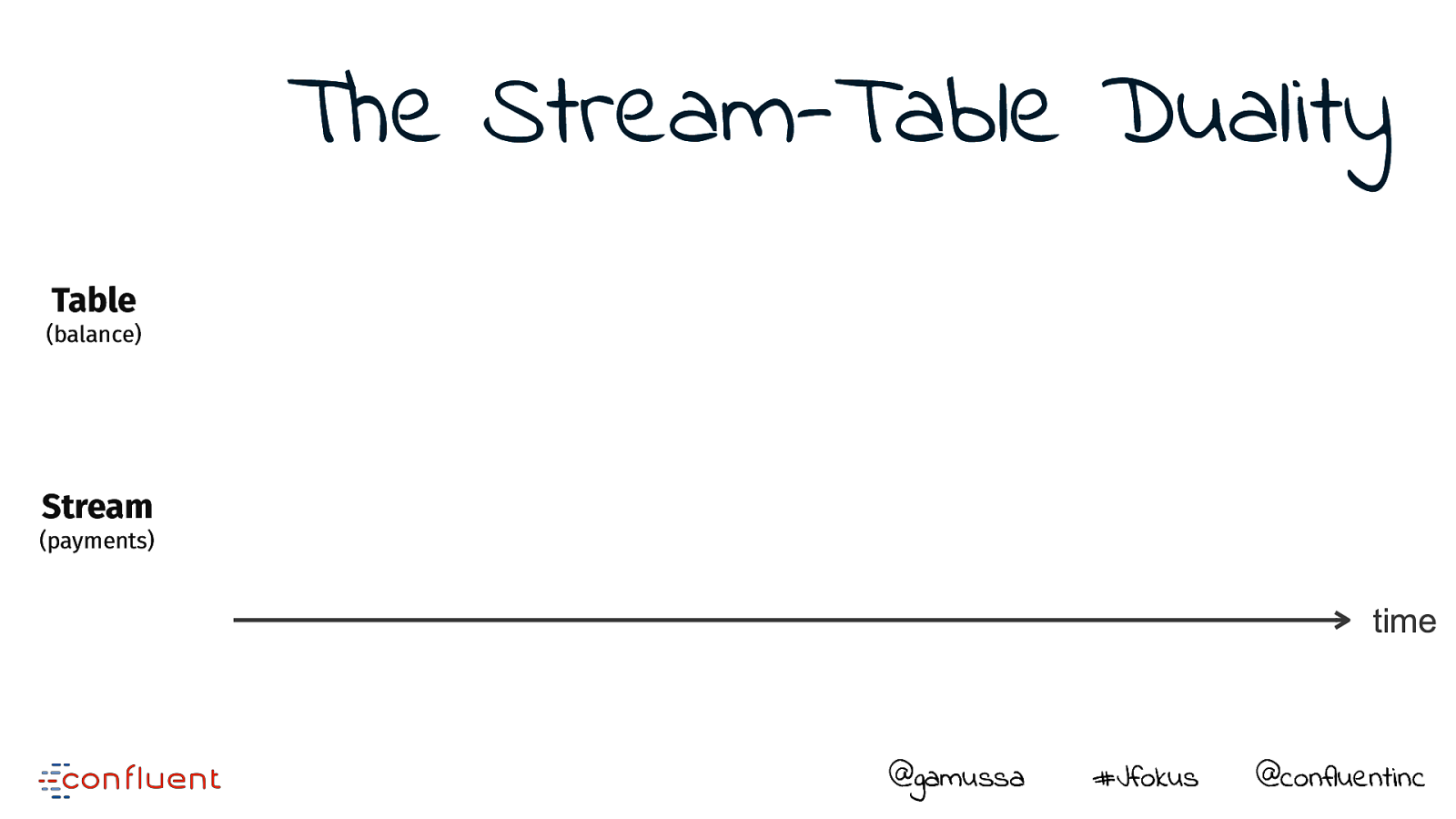

The Stream-Table Duality Table (balance) Stream (payments) time @gamussa #Jfokus @confluentinc

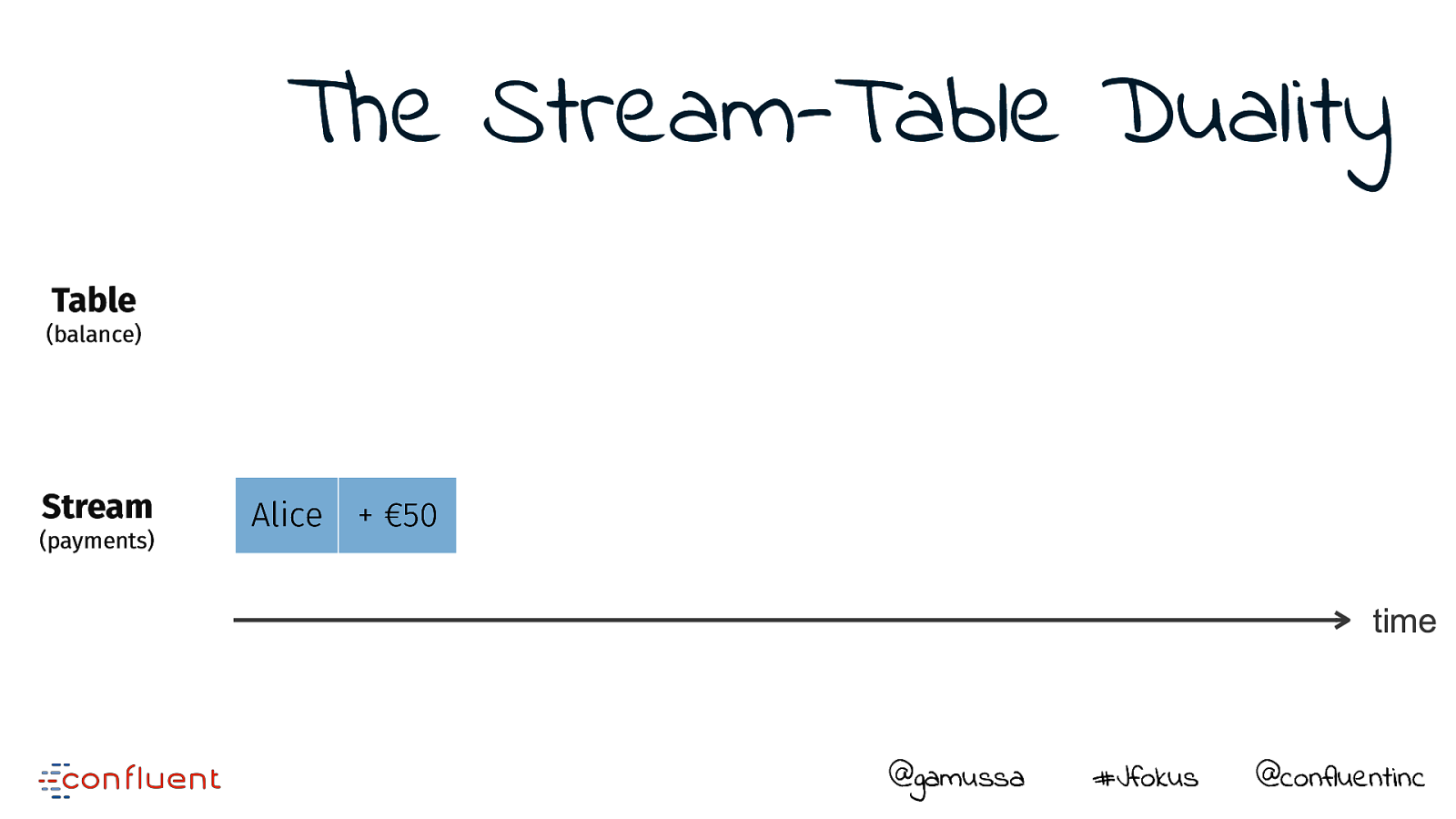

The Stream-Table Duality Table (balance) Stream (payments) Alice

- €50 time @gamussa #Jfokus @confluentinc

The Stream-Table Duality Table Alice €50 Alice

- €50 (balance) Stream (payments) time @gamussa #Jfokus @confluentinc

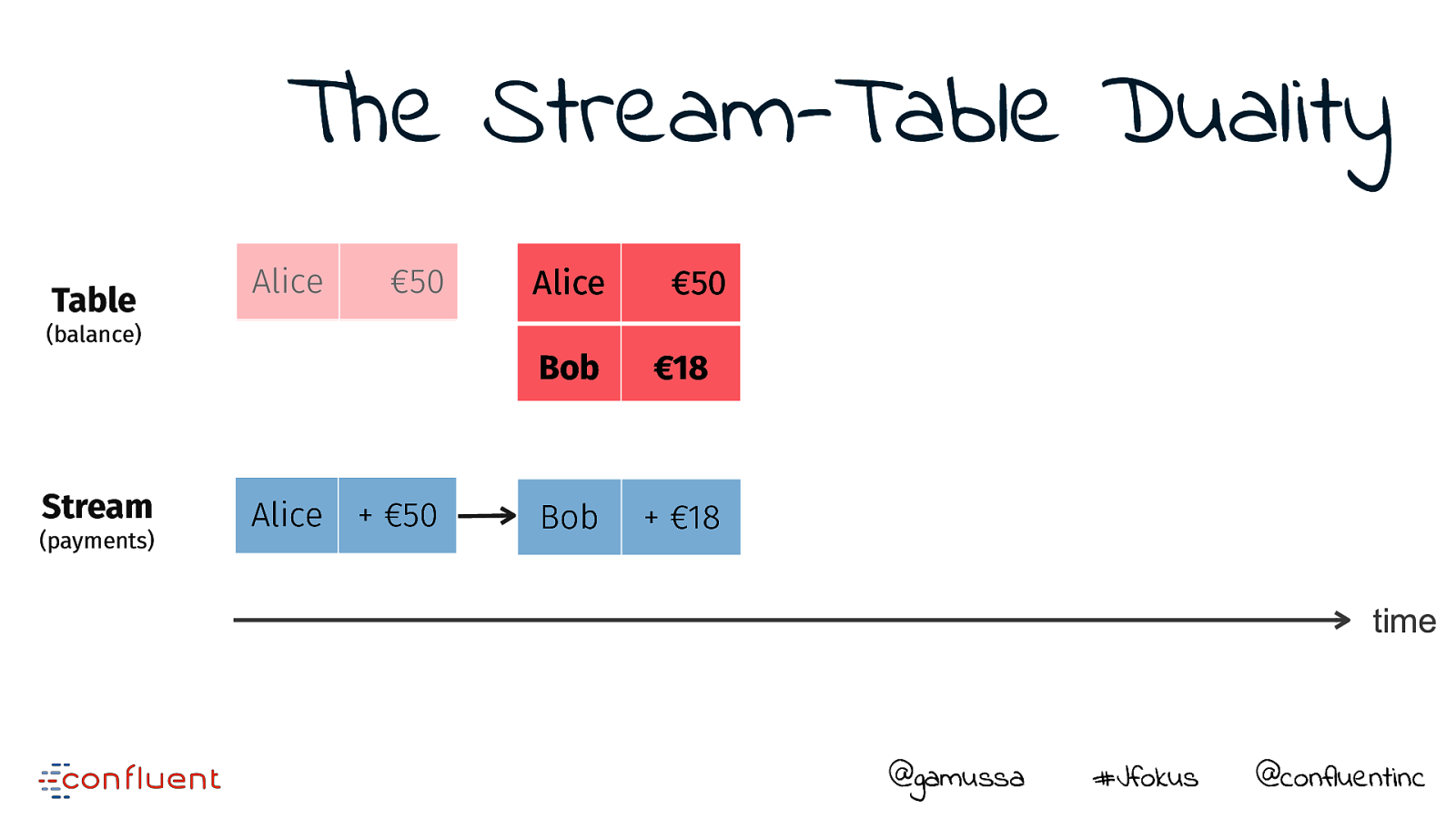

The Stream-Table Duality Table Alice €50 (balance) Stream (payments) Alice

- €50 Alice €50 Bob €18 Bob

- €18 time @gamussa #Jfokus @confluentinc

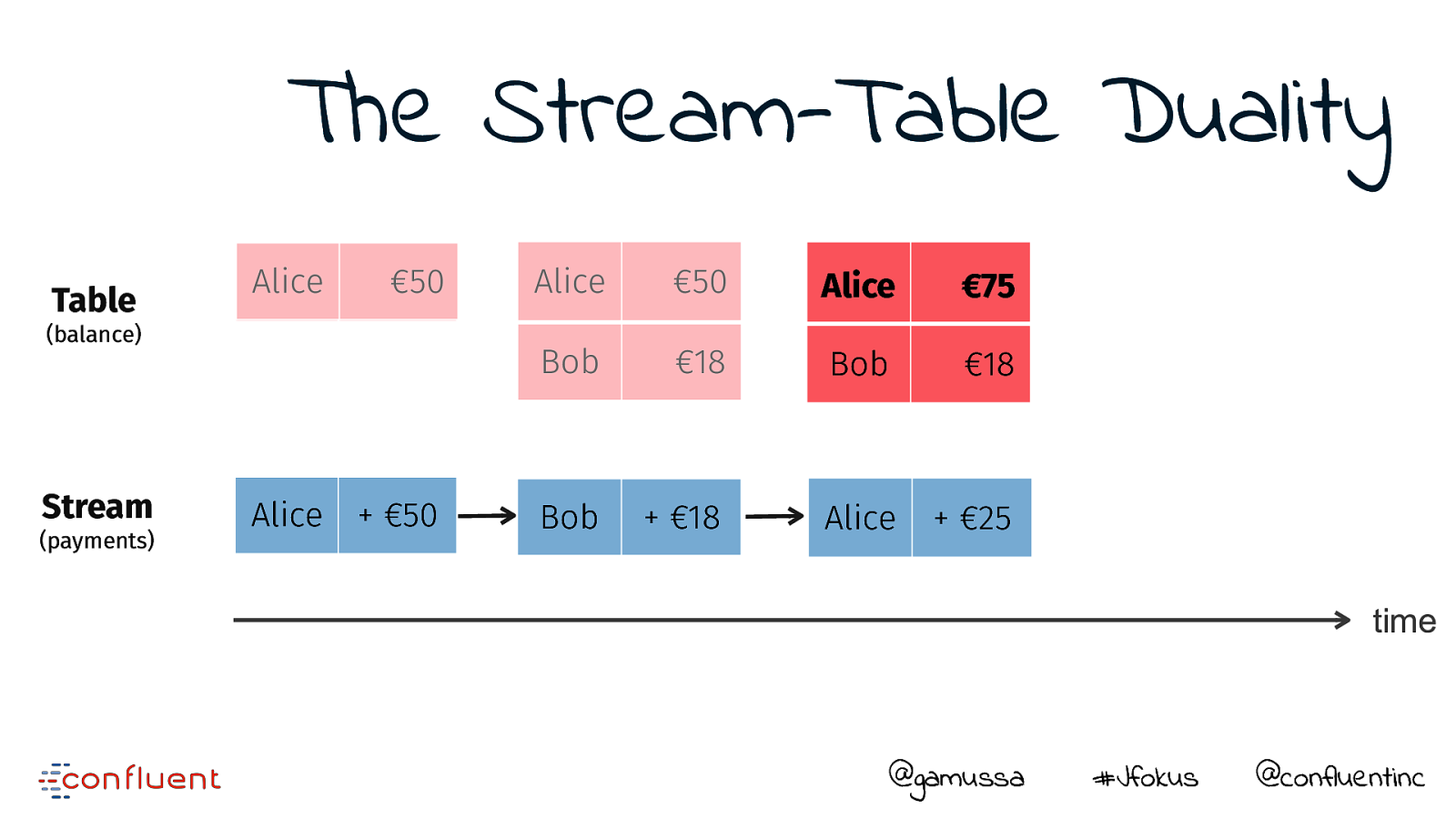

The Stream-Table Duality Table Alice €50 (balance) Stream (payments) Alice

- €50 Alice €50 Alice €75 Bob €18 €18 Bob €18 Bob

- €18 Alice

- €25 time @gamussa #Jfokus @confluentinc

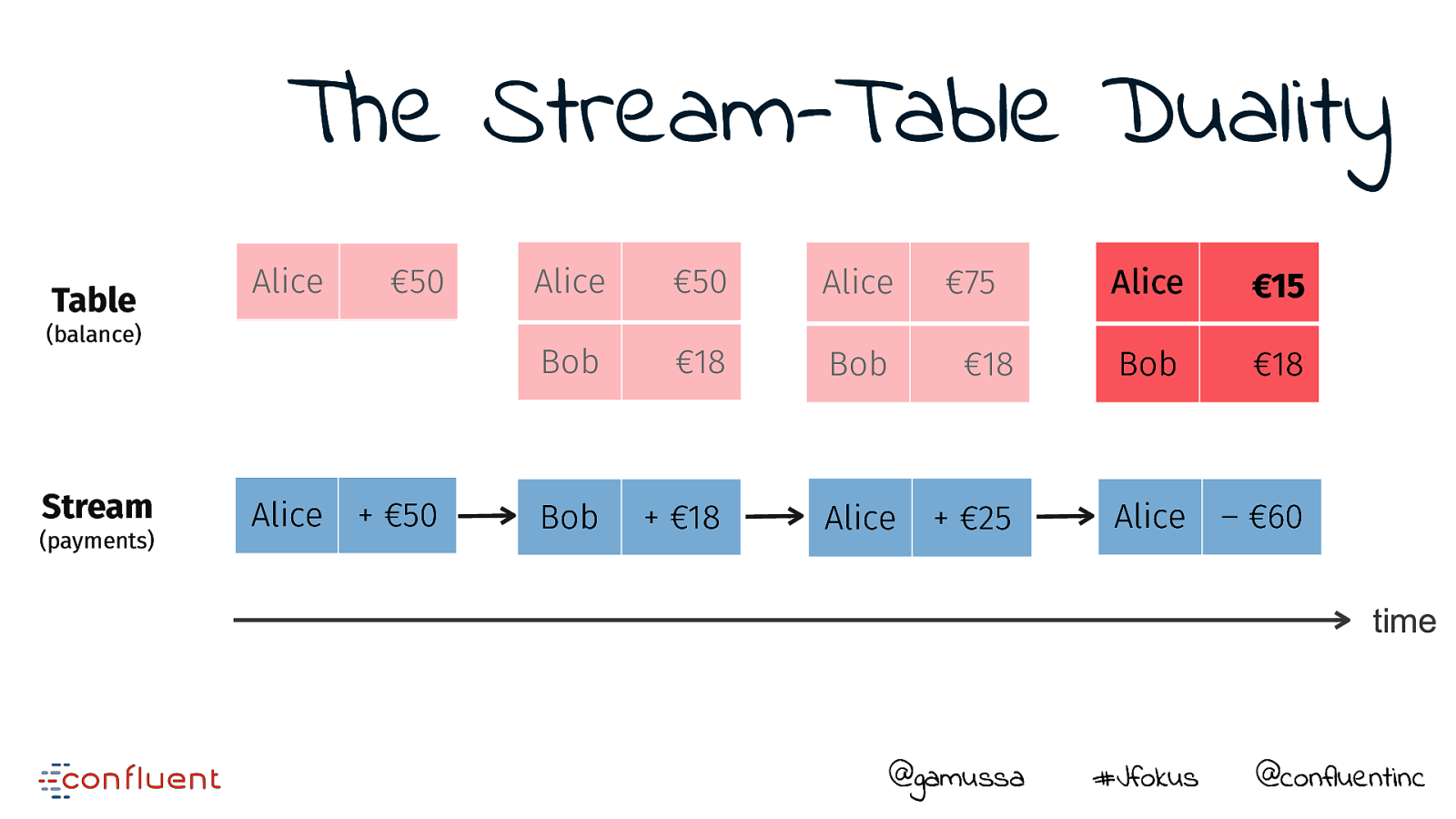

The Stream-Table Duality Table Alice €50 (balance) Stream (payments) Alice

- €50 Alice €50 Alice €75 €75 Alice €15 Bob €18 €18 Bob €18 Bob €18 Bob

- €18 Alice

- €25 Alice – €60 time @gamussa #Jfokus @confluentinc

@gamussa #Jfokus @confluentinc

The truth is the log. The database is a cache of a subset of the log. @gamussa #Jfokus @confluentinc

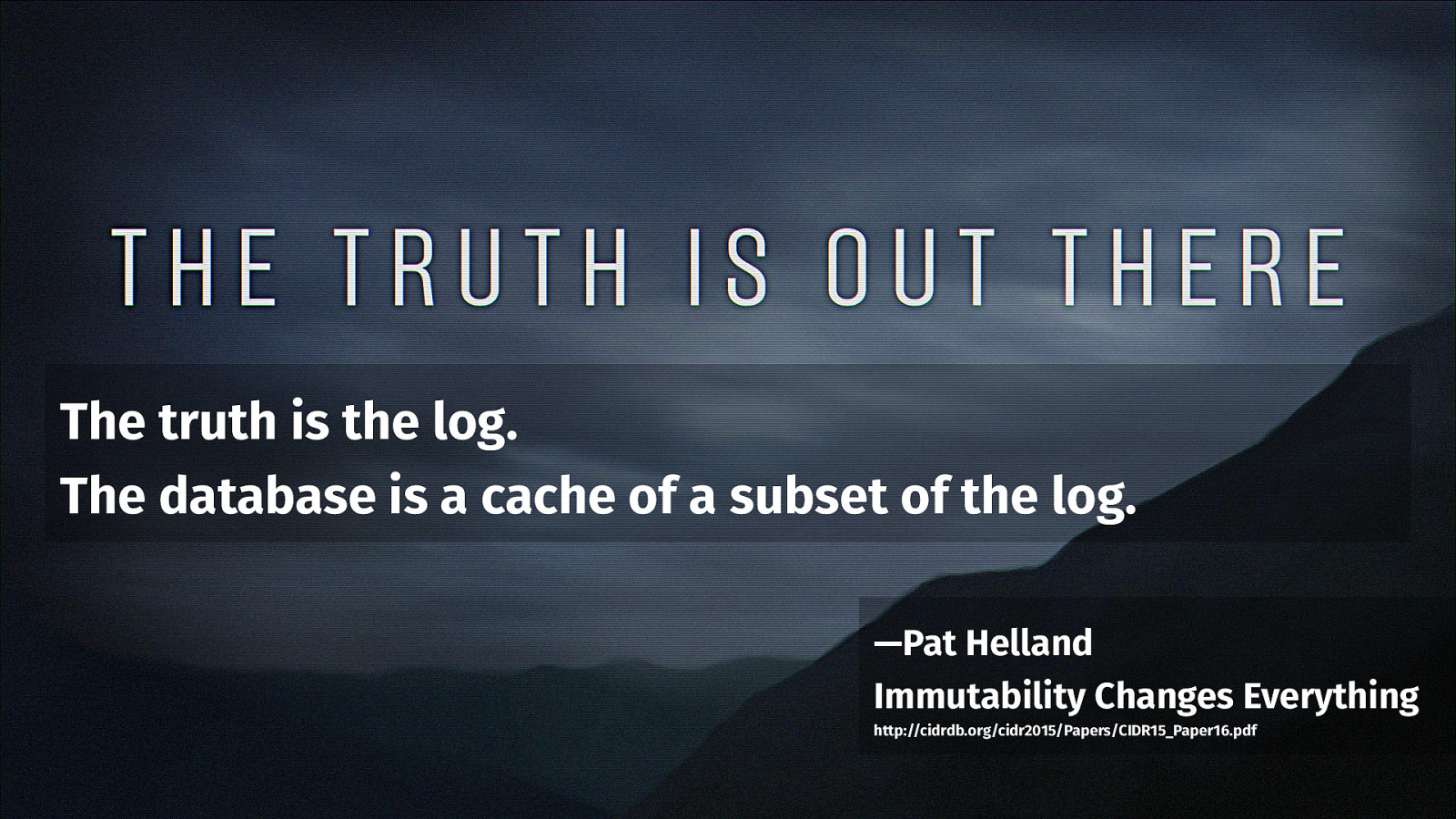

The truth is the log. The database is a cache of a subset of the log. —Pat Helland Immutability Changes Everything http://cidrdb.org/cidr2015/Papers/CIDR15_Paper16.pdf @gamussa #Jfokus @confluentinc

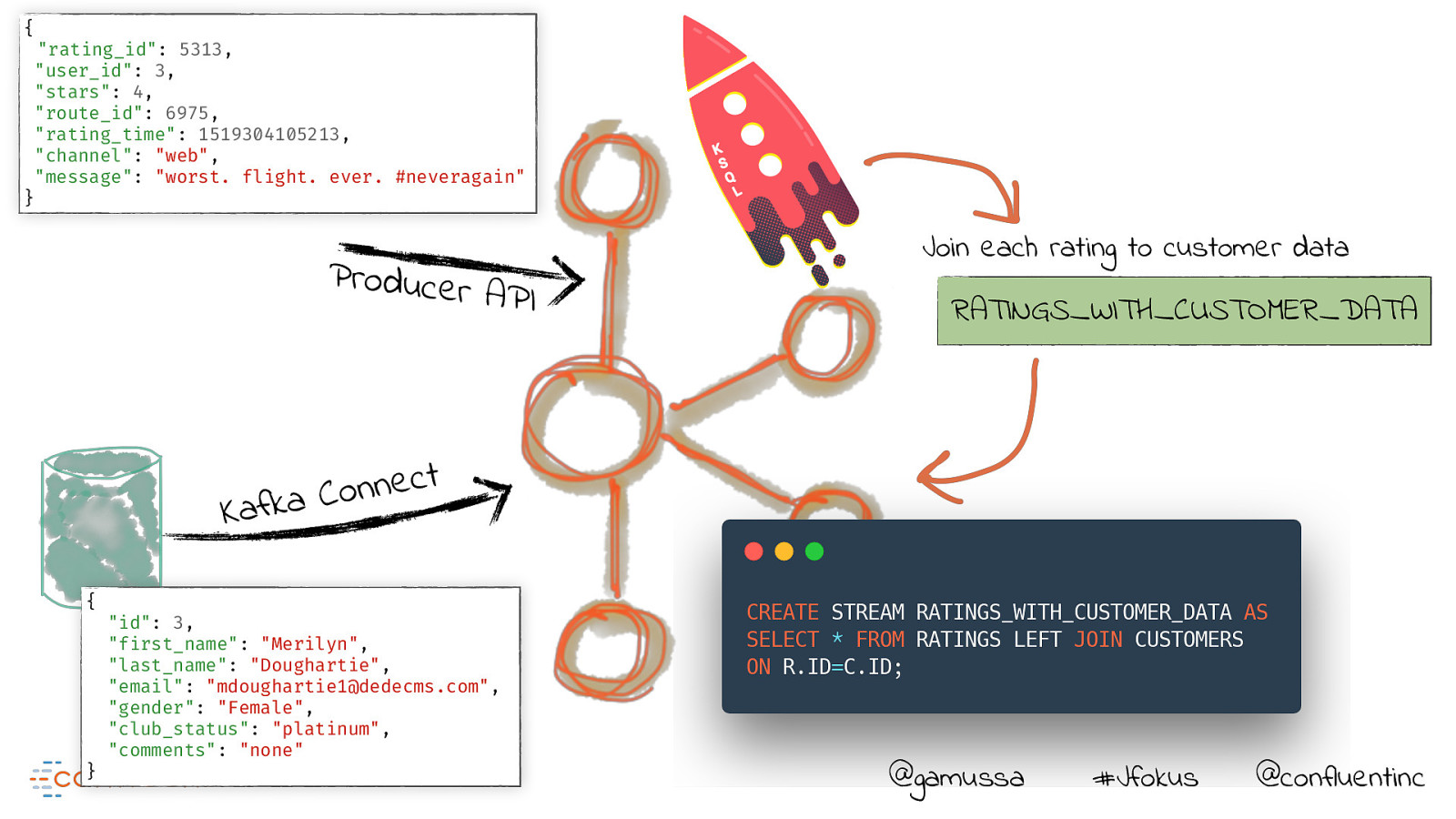

{ “rating_id”: 5313, “user_id”: 3, “stars”: 4, “route_id”: 6975, “rating_time”: 1519304105213, “channel”: “web”, “message”: “worst. flight. ever. #neveragain” } Producer API Join each rating to customer data RATINGS_WITH_CUSTOMER_DATA t c e n n o C a k f a K { } “id”: 3, “first_name”: “Merilyn”, “last_name”: “Doughartie”, “email”: “mdoughartie1@dedecms.com”, “gender”: “Female”, “club_status”: “platinum”, “comments”: “none” @gamussa #Jfokus @confluentinc

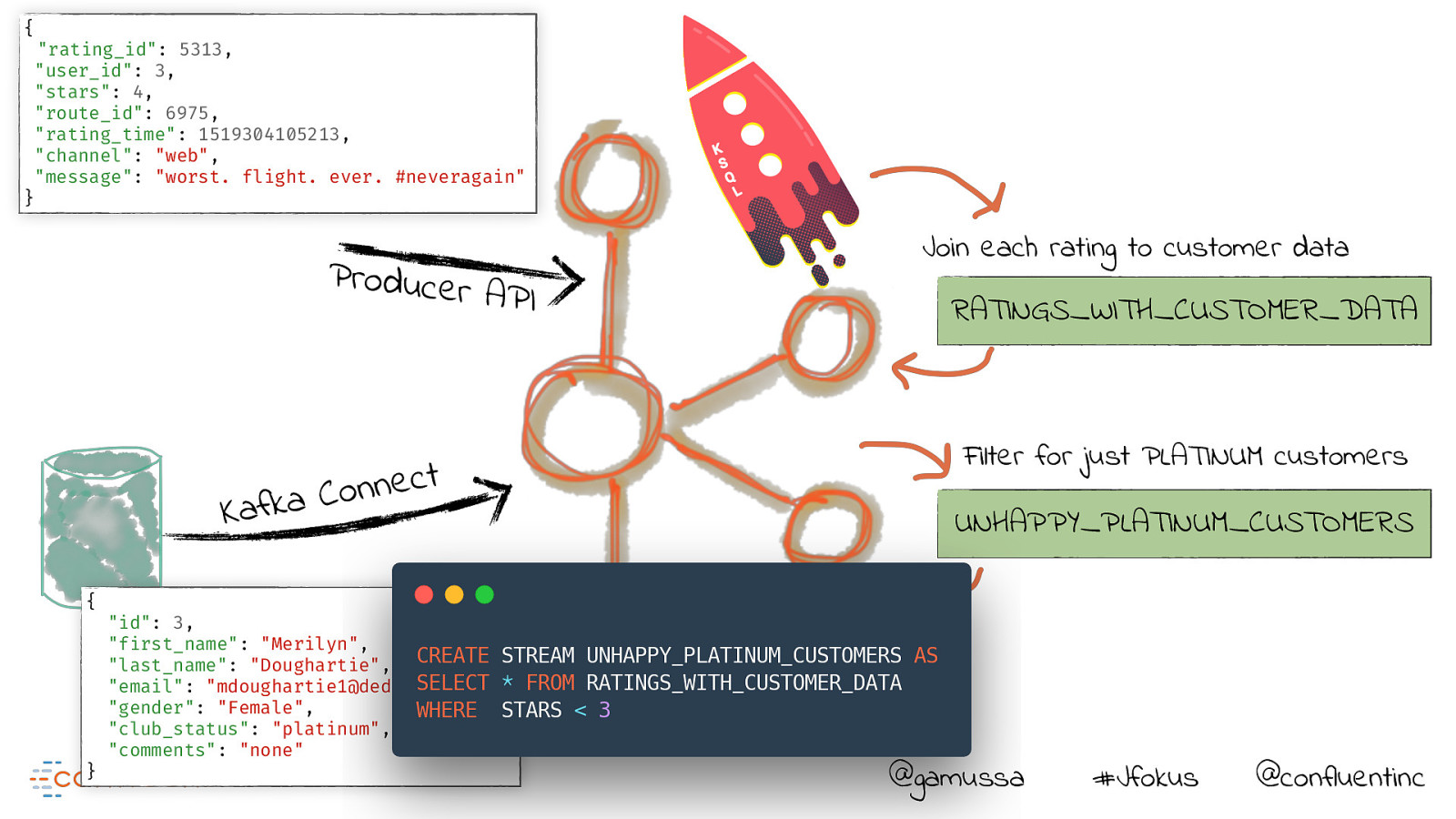

{ “rating_id”: 5313, “user_id”: 3, “stars”: 4, “route_id”: 6975, “rating_time”: 1519304105213, “channel”: “web”, “message”: “worst. flight. ever. #neveragain” } Producer API t c e n n o C a k f a K { } “id”: 3, “first_name”: “Merilyn”, “last_name”: “Doughartie”, “email”: “mdoughartie1@dedecms.com”, “gender”: “Female”, “club_status”: “platinum”, “comments”: “none” Join each rating to customer data RATINGS_WITH_CUSTOMER_DATA Filter for just PLATINUM customers UNHAPPY_PLATINUM_CUSTOMERS @gamussa #Jfokus @confluentinc

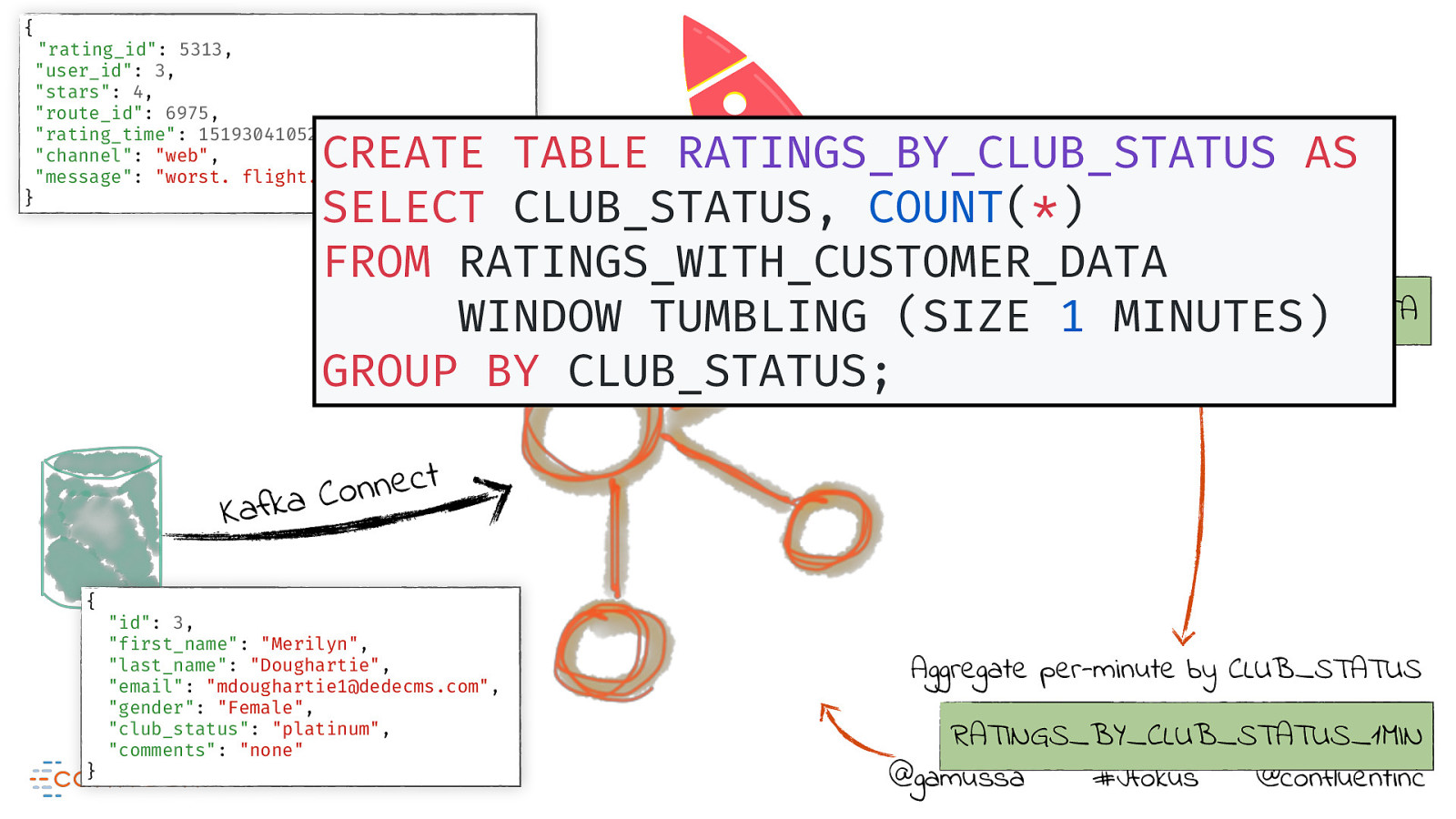

{ “rating_id”: 5313, “user_id”: 3, “stars”: 4, “route_id”: 6975, “rating_time”: 1519304105213, “channel”: “web”, “message”: “worst. flight. ever. #neveragain” CREATE TABLE RATINGS_BY_CLUB_STATUS AS SELECT CLUB_STATUS, COUNT(*) Join each rating to customer data FROM ProducerRATINGS_WITH_CUSTOMER_DATA API RATINGS_WITH_CUSTOMER_DATA WINDOW TUMBLING (SIZE 1 MINUTES) GROUP BY CLUB_STATUS; } t c e n n o C a k f a K { } “id”: 3, “first_name”: “Merilyn”, “last_name”: “Doughartie”, “email”: “mdoughartie1@dedecms.com”, “gender”: “Female”, “club_status”: “platinum”, “comments”: “none” Aggregate per-minute by CLUB_STATUS RATINGS_BY_CLUB_STATUS_1MIN @gamussa #Jfokus @confluentinc

Resources and Next Steps https://github.com/confluentinc/demo-scene http://confluent.io https://slackpass.io/confluentcommunity #ksql #connect @gamussa #Jfokus @confluentinc

Free Books! https://www.confluent.io/apache-kafka-stream-processing-book-bundle @gamussa #Jfokus @confluentinc

@gamussa Thanks! #Jfokus @confluentinc @gamussa viktor@confluent.io @

One last thing…

https://kafka-summit.org Gamov30 @gamussa #Jfokus @confluentinc

Have you ever thought that you needed to be a programmer to do stream processing? Think again! Apache Kafka provides low-latency pub-sub messaging coupled with native storage and stream processing capabilities. Integrating Kafka with RDBMS & other data stores is simple with Kafka Connect. KSQL is the open-source SQL streaming engine for Apache Kafka, and makes it possible to build stream processing applications at scale, written using a familiar SQL interface. In this talk well explain the architectural reasoning for Apache Kafka and the benefits of real-time integration, and well build a streaming data pipeline using nothing but our bare hands, Kafka Connect, and KSQL. You’ll learn how to use SQL statements to filter streams of events in Kafka, join events to data in RDBMS, and more.

Video

Resources

The following resources were mentioned during the presentation or are useful additional information.

Code

The following code examples from the presentation can be tried out live.

-

Demo on GH